David Natingga [David Natingga] - Data Science Algorithms in a Week - Second Edition

Here you can read online David Natingga [David Natingga] - Data Science Algorithms in a Week - Second Edition full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2018, publisher: Packt Publishing, genre: Computer. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Data Science Algorithms in a Week - Second Edition

- Author:

- Publisher:Packt Publishing

- Genre:

- Year:2018

- Rating:5 / 5

- Favourites:Add to favourites

- Your mark:

Data Science Algorithms in a Week - Second Edition: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Data Science Algorithms in a Week - Second Edition" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

Build a strong foundation of machine learning algorithms in 7 days

Key Features- Use Python and its wide array of machine learning libraries to build predictive models

- Learn the basics of the 7 most widely used machine learning algorithms within a week

- Know when and where to apply data science algorithms using this guide

Machine learning applications are highly automated and self-modifying, and continue to improve over time with minimal human intervention, as they learn from the trained data. To address the complex nature of various real-world data problems, specialized machine learning algorithms have been developed. Through algorithmic and statistical analysis, these models can be leveraged to gain new knowledge from existing data as well.

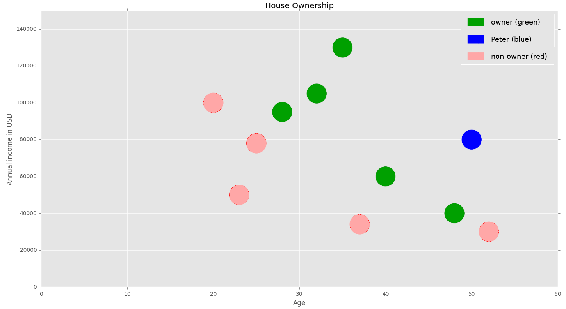

Data Science Algorithms in a Week addresses all problems related to accurate and efficient data classification and prediction. Over the course of seven days, you will be introduced to seven algorithms, along with exercises that will help you understand different aspects of machine learning. You will see how to pre-cluster your data to optimize and classify it for large datasets. This book also guides you in predicting data based on existing trends in your dataset. This book covers algorithms such as k-nearest neighbors, Naive Bayes, decision trees, random forest, k-means, regression, and time-series analysis.

By the end of this book, you will understand how to choose machine learning algorithms for clustering, classification, and regression and know which is best suited for your problem

What you will learn- Understand how to identify a data science problem correctly

- Implement well-known machine learning algorithms efficiently using Python

- Classify your datasets using Naive Bayes, decision trees, and random forest with accuracy

- Devise an appropriate prediction solution using regression

- Work with time series data to identify relevant data events and trends

- Cluster your data using the k-means algorithm

This book is for aspiring data science professionals who are familiar with Python and have a little background in statistics. Youll also find this book useful if youre currently working with data science algorithms in some capacity and want to expand your skill set

Downloading the example code for this book You can download the example code files for all Packt books you have purchased from your account at http://www.PacktPub.com. If you purchased this book elsewhere, you can visit http://www.PacktPub.com/support and register to have the files e-mailed directly to you.

David Natingga [David Natingga]: author's other books

Who wrote Data Science Algorithms in a Week - Second Edition? Find out the surname, the name of the author of the book and a list of all author's works by series.

![David Natingga [David Natingga] Data Science Algorithms in a Week - Second Edition](/uploads/posts/book/119607/thumbs/david-natingga-david-natingga-data-science.jpg)