Disclaimer

The information in this eBook is not meant to be applied as is in a production environment. By applying it to a production environment, you take full responsibility for your actions.

The author has made every effort to ensure the accuracy of the information within this book was correct at the time of publication. The author does not assume and hereby disclaims any liability to any party for any loss, damage, or disruption caused by errors or omissions, whether such errors or omissions result from accident, negligence, or any other cause.

No part of this eBook may be reproduced or transmitted in any form or by any means, electronic or mechanical, recording or by any information storage and retrieval system, without written permission from the author.

Acknowledgments

Special thanks to Austin Kodra for the edits.

Copyright

Intermediate Tutorials for Machine Learning

Copyright 2020 Derrick Mwiti. All Rights Reserved.

Other Things to Learn

Learn Python

Learn Data Science

Learn Deep Learning

Intermediate Tutorials for Machine Learning

Top 10 Tricks for TensorFlow and Google Colab Users

Googles Colab is a truly innovative product for machine learning. It enables machine engineers to run Notebooks and easily share them with colleagues. Another key advantage is access to GPUs and TPUs.

In this piece, well highlight some of the tips and tricks that will help you in getting the best out of Googles Colab.

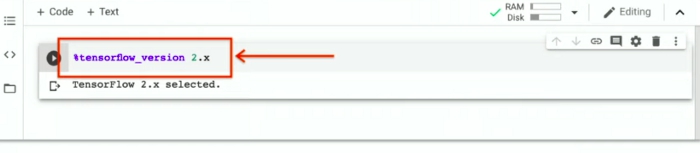

10. Specify TensorFlow version

Very soon, the default TF version in Colab will be TF 2. What this means is that if you have Notebooks running in TF 1, they will probably fail. In order to ensure that your code doesnt break, its recommended that you specify the TF version in all your Notebooks. This way, if the default version changes, your Notebooks still work.

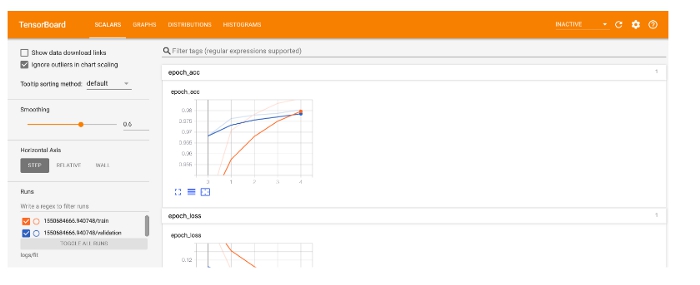

9. Use TensorBoard

Google Colab provides support for TensorBoard by default. This is a great tool for visualizing the performance of your model. Use it!

8. Train TFLite Models on Colab

When building mobile machine learning models, you can take advantage of Colabs resources to train your models. The alternative to this is training your model using other expensive cloud solutions or on your laptop, which might not have the needed compute power.

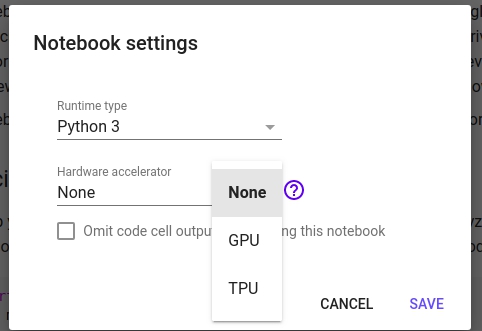

7. Use TPUs

Should you need more powerful processing for your model, then change your default runtime to the TPU. However, you should only use TPUs when you really need them, because their availability is on a limited basis, given how resource-intensive they are.

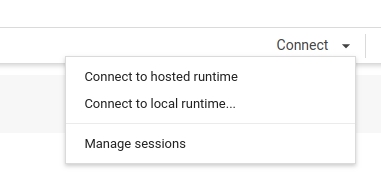

6. Use Local Runtimes

In the event that you have your own powerful hardware accelerator, then Colab allows you to connect to it. Just click on the connect drop down and select your preferred runtime.

5. Use Colab Scratchpad

Sometimes you might find yourself in a situation where you want to test things out quickly. Instead of creating a new Notebook that you dont intend to save on your drive, you can use an empty Notebook that doesnt save to your drive.

4. Copy Data to Colabs VM

In the interest of speeding up the loading of data, its recommended that you copy your data files into Colabs VM instead of loading them from elsewhere.

3. Check Your Ram and Resource Limits

Since Colabs resources are free, it means that they are not guaranteed. Therefore, keep your eye on the RAM and Disk usage to ensure that you dont run out of resources.

2. Close Tabs when Done

Closing tabs when done ensures that you disconnect from the VM and therefore save resources.

1. Use GPUs only when Needed

Since these resources are subject to restriction, save your GPU usage for when you really need it. However, if you need to work with Colab without the restrictions of the free version, you can check out the pro version.

I hope that you will find these Colab tips and tricks for TensorFlow helpful.

Federated Learning

Advancements in the power of machine learning have brought with them major data privacy concerns. This is especially true when it comes to training machine learning models with data obtained from the interaction of users with devices such as smartphones.

So the big question is, how do we train and improve these on-device machine learning models without sharing personally-identifiable data? That is the question that well seek to answer in this look at a technique known as federated learning.

Centralized Machine Learning

The traditional process for training a machine learning model involves uploading data to a server and using that to train models. This way of training works just fine as long as the privacy of the data is not a concern.

However, when it comes to training machine learning models where personally identifiable data is involved (on-device, or in industries with particularly sensitive data like healthcare), this approach becomes unsuitable.

Training models on a centralized server also means that you need enormous amounts of storage space, as well as world-class security to avoid data breaches. But imagine if you were able to train your models with data thats locally stored on a user's device.

Machine Learning on Decentralized Data

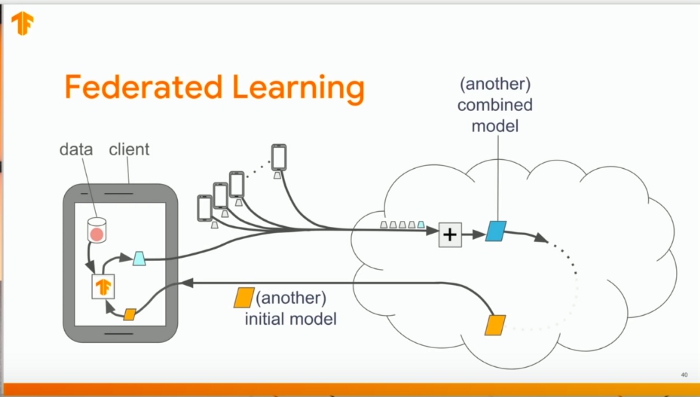

Enter: Federated learning .

Federated learning is a model training technique that enables devices to learn collaboratively from a shared model. The shared model is first trained on a server using proxy data. Each device then downloads the model and improves it using data federated data from the device.

The device trains the model with the locally available data. The changes made to the model are summarized as an update that is then sent to the cloud. The training data and individual updates remain on the device. In order to ensure faster uploads of theses updates, the model is compressed using random rotations and quantization. When the devices send their specific models to the server, the models are averaged to obtain a single combined model. This is done for several iterations until a high-quality model is obtained.

Compared to centralized machine learning, federated learning has a couple of specific advantages:

Ensuring privacy , since the data remains on the users device.

Lower latency , because the updated model can be used to make predictions on the users device.

Smarter models , given the collaborative training process.

Less power consumption , as models are trained on a users device.