Sushil C. Dimri - Algorithms

Here you can read online Sushil C. Dimri - Algorithms full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2021, publisher: De Gruyter, genre: Children. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Algorithms

- Author:

- Publisher:De Gruyter

- Genre:

- Year:2021

- Rating:4 / 5

- Favourites:Add to favourites

- Your mark:

- 80

- 1

- 2

- 3

- 4

- 5

Algorithms: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Algorithms" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

Algorithms — read online for free the complete book (whole text) full work

Below is the text of the book, divided by pages. System saving the place of the last page read, allows you to conveniently read the book "Algorithms" online for free, without having to search again every time where you left off. Put a bookmark, and you can go to the page where you finished reading at any time.

Font size:

Interval:

Bookmark:

De Gruyter Textbook

ISBN 9783110693416

e-ISBN (PDF) 9783110693607

e-ISBN (EPUB) 9783110693751

Bibliographic information published by the Deutsche Nationalbibliothek

The Deutsche Nationalbibliothek lists this publication in the Deutsche Nationalbibliografie; detailed bibliographic data are available on the Internet at http://dnb.dnb.de.

2021 Walter de Gruyter GmbH, Berlin/Boston

An algorithm is a finite sequence of computational steps that produces the desired output when applied on a certain problem with necessary input values. In algorithm, every step must be clear, simple, definite, and unambiguous. shows diagrammatic representation of algorithm.

Figure 1.1: Algorithm.

An algorithm is defined as an ultimate group of instructions to achieve a given set of goals to fulfill the given criteria.

Input: Zero or more quantities must be passed externally as input to algorithm.

Output: Minimum one quantity must be formed as an output.

Finiteness: An algorithm must be well defined in a finite set of steps.

Definiteness: Each and every instruction of a given algorithm must be precise and unambiguous.

Effectiveness: Every statement/instruction specifically contributes something in solution, defined by an algorithm.

Example 1.1: We will discuss an example of a sorting algorithm.

Input: Set of elements (sequence)

where elements are in random fashion.

Output: Elements in ascending order, that is:

Analysis of the algorithms allows us to take decisions about the value of one algorithm over another. When an algorithm is executed in computer, it requires central processing unit (CPU, for performing its operations) and memory (for storing program and data). There are two parameters on which we can analyze the performance of an algorithm. These are time complexity and space complexity. As compared to space analysis, the analysis of time requirements for an algorithm is important, but whenever necessary, both the complexities are used.

The amount of memory required by a program to run to conclusion is known as space complexity. Space is a kind of resource that is reusable, and we can easily increase the space (memory) of the system. But time is a resource that is limited, not reusable, and nonpurchasable.

Time complexity completely relies on the size of the input. The sum of time of CPU needed by the algorithm (program) to execute to conclusion is known as time complexity. It should be noted that the same algorithm can take different time to run. There are three kinds of time complexity: best, worst, and average case complexity of the algorithm. Best-case complexity is the minimum amount of time taken by the algorithm for n-sized input. Average-case complexity is the time taken by the algorithm having typical input data of size n. Worst-case complexity is the maximum amount of time required by the algorithm for n-sized input. These terms will be discussed later in this chapter.

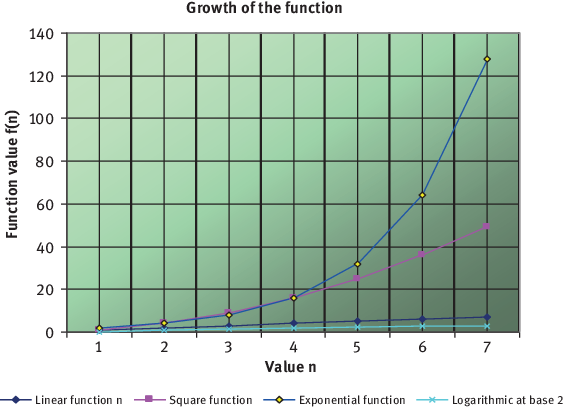

The time complexity of an algorithm is generally a function of the input size n. Growth order of a function indicates how fast a function grows with respect to change in input size n. Some useful popular functions are:

Linear function: n

Square function: n2

Exponential function: 2n

Logarithmic function: log2n

shows how various functions grow with the input size n. The graph is evidence for how fast exponential function grows in comparison to other functions.

Figure 1.2: Plotting function values.

Whenever we learn about an algorithm, we are keen to depict them as per their preciseness and efficiency. In other words, we are mainly concerned about the growth of the running time of an algorithm (not in the exact running time). Scientifically, this phenomenon is called asymptotic running time. There is an acute need to design a method to find out the rate of growth of functions so that we can compare algorithms. Asymptotic notation is an innovative way to provide the suitable methodology for categorizing functions according to the rate of growth of function.

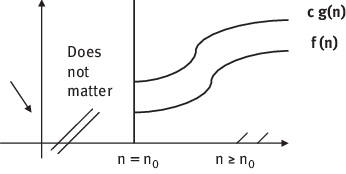

Let f(n) and g(n) are two positive functions. A function f(n) is said to be in O(g(n)) signified by f(n)=O(g(n)). If f(n) is bounded above by some positive constant multiple of g(n) for large values of n, i.e. there exists some positive constant c and some nonnegative whole number n0 (allude ) with the end goal that

Figure 1.3: Growth of the Function f(n) and g(n) for Big oh notation.

Example 1.2:

500 n+1=f(n) says

500 n+1500 n+n{ n1}

501 n501 n2

500 n+1501 n2, n1

So c=501, and n0=1

500 n+1O(n2)

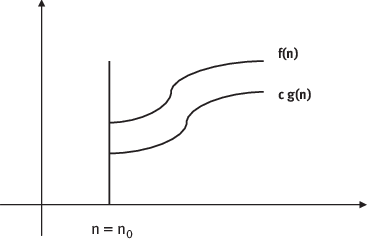

Let f(n) and g(n) are two positive functions. The function f(n) is said to be in (g(n)) (Omega of g(n)) meant by f(n)=(g(n)); if function f(n) is lower bounded by some positive multiple of g(n) for some large value of n, i.e. there exist a positive constant c and some nonnegative whole number n0 (allude ), with the end goal that

Figure 1.4: Growth of the Function f(n) and g(n) for Omega notation.

Example 1.3:

f(n)=(n3)

g(n)=(n2)

n3n2 v n0

n31.n2,where n00

Hence, c=1, n0=0, n3(n2)

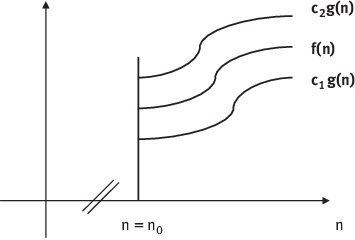

Let f(n) and g(n) are two positive functions. A function f(n) is said to be in (g(n)) (Theta of g(n)), which means f(n)=(g(n)). The function f(n) is limited above and below by some positive constant multiple of g(n) for large value of n, i.e. there exist positive constants c1 and c2 and some nonnegative whole number n0 (allude ), with the end goal that

Figure 1.5: Growth of the Function f(n) and g(n) for Theta notation.

Example 1.4:

For upper bound:

For lower bound:

So, we have

This implies that

Theorem 1.1:

Font size:

Interval:

Bookmark:

Similar books «Algorithms»

Look at similar books to Algorithms. We have selected literature similar in name and meaning in the hope of providing readers with more options to find new, interesting, not yet read works.

Discussion, reviews of the book Algorithms and just readers' own opinions. Leave your comments, write what you think about the work, its meaning or the main characters. Specify what exactly you liked and what you didn't like, and why you think so.