Contents

Guide

Mark Beckner

Quick Start Guide to Azure Data Factory, Azure Data

Lake Server, and Azure Data Warehouse

ISBN 978-1-5474-1735-3

e-ISBN (PDF) 978-1-5474-0127-7

e-ISBN (EPUB) 978-1-5474-0129-1

Library of Congress Control Number: 2018962033

Bibliographic information published by the Deutsche Nationalbibliothek

The Deutsche Nationalbibliothek lists this publication in the Deutsche Nationalbibliografie;

detailed bibliographic data are available on the Internet at http://dnb.dnb.de.

2019 Mark Beckner

Published by Walter de Gruyter Inc., Boston/Berlin

www.degruyter.com

About De|G PRESS

Five Stars as a Rule

De|G PRESS, the startup born out of one of the worlds most venerable publishers, De Gruyter, promises to bring you an unbiased, valuable, and meticulously edited work on important topics in the fields of business, information technology, computing, engineering, and mathematics. By selecting the finest authors to present, without bias, information necessary for their chosen topic for professionals , in the depth you would hope for, we wish to satisfy your needs and earn our five-star ranking.

In keeping with these principles, the books you read from De|G PRESS will be practical, efficient and, if we have done our job right, yield many returns on their price.

We invite businesses to order our books in bulk in print or electronic form as a best solution to meeting the learning needs of your organization, or parts of your organization, in a most cost-effective manner.

There is no better way to learn about a subject in depth than from a book that is efficient, clear, well organized, and information rich. A great book can provide life-changing knowledge. We hope that with De|G PRESS books you will find that to be the case.

Acknowledgments

Thanks to my editor, Jeff Pepper, who worked with me to come up with this quick start approach, and to Triston Arisawa for jumping in to verify the accuracy of the numerous exercises that are presented throughout this book.

About the Author

Mark Beckner is an enterprise solutions expert. With over 20 years of experience, he leads his firm Inotek Group, specializing in business strategy and enterprise application integration with a focus in health care, CRM, supply chain and business technologies.

He has authored numerous technical books, including Administering, Configuring, and Maintaining Microsoft Dynamics 365 in the Cloud , Using Scribe Insight , BizTalk 2013 Recipes , BizTalk 2013 EDI for Health Care , BizTalk 2013 EDI for Supply Chain Management , Microsoft Dynamics CRM API Development , and more. Beckner also helps up-and-coming coders, programmers, and aspiring tech entrepreneurs reach their personal and professional goals.

Mark has a wide range of experience, including specialties in BizTalk Server, SharePoint, Microsoft Dynamics 365, Silverlight, Windows Phone, SQL Server, SQL Server Reporting Services (SSRS), .NET Framework, .NET Compact Framework, C#, VB.NET, ASP.NET, and Scribe.

Beckners expertise has been featured in Computerworld , Entrepreneur, IT Business Edge , SD Times , UpStart Business Journal , and more.

He graduated from Fort Lewis College with a bachelors degree in computer science and information systems. Mark and his wife, Sara, live in Colorado with their two children, Ciro and Iyer.

Introduction

A challenge has been presented to me, that is to distill the essence of Azure Data Factory (ADF), Azure Data Lake Server (ADLS), and Azure Data Warehouse (ADW) into a book that is a short and fast quick start guide. Theres a tremendous amount of territory to cover when it comes to diving into these technologies! What I hoped to accomplish in this book is the following:

- Lay out the steps that will set up each environment and perform basic development within it.

- Show how to move data between the various environments and components, including local SQL and Azure instances.

- Eliminate some of the elusive aspects of the various features (e.g., check out the overview of External Tables at the end of !)

- Save you time!

I guarantee that this book will help you fully understand how to set up an ADF pipeline integration with multiple sources and destinations that will require very little of your time. Youll know how to create an ADLS instance and move data in a variety of formats into it. Youll be able to build a data warehouse that can be populated with an ADF process or by using external tables. And youll have a fair understanding of permissions, monitoring the various environments, and doing development across components.

Dive in. Have fun. There is a ton of value packed into this little book!

Chapter 1

Copying Data to Azure SQL Using Azure Data Factory

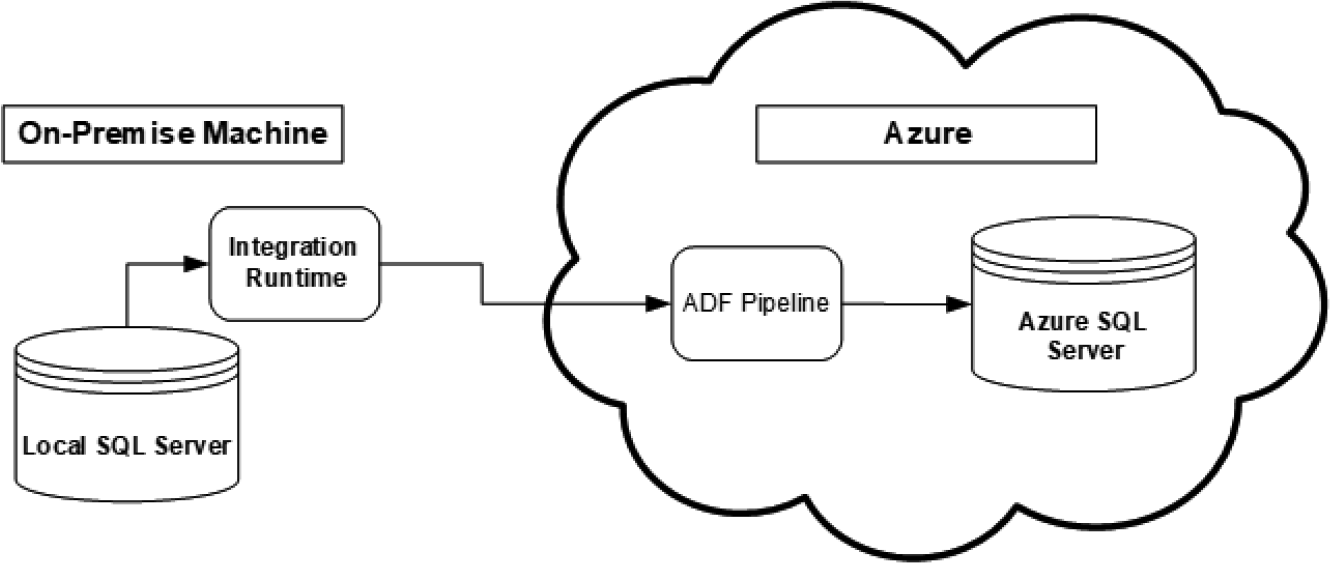

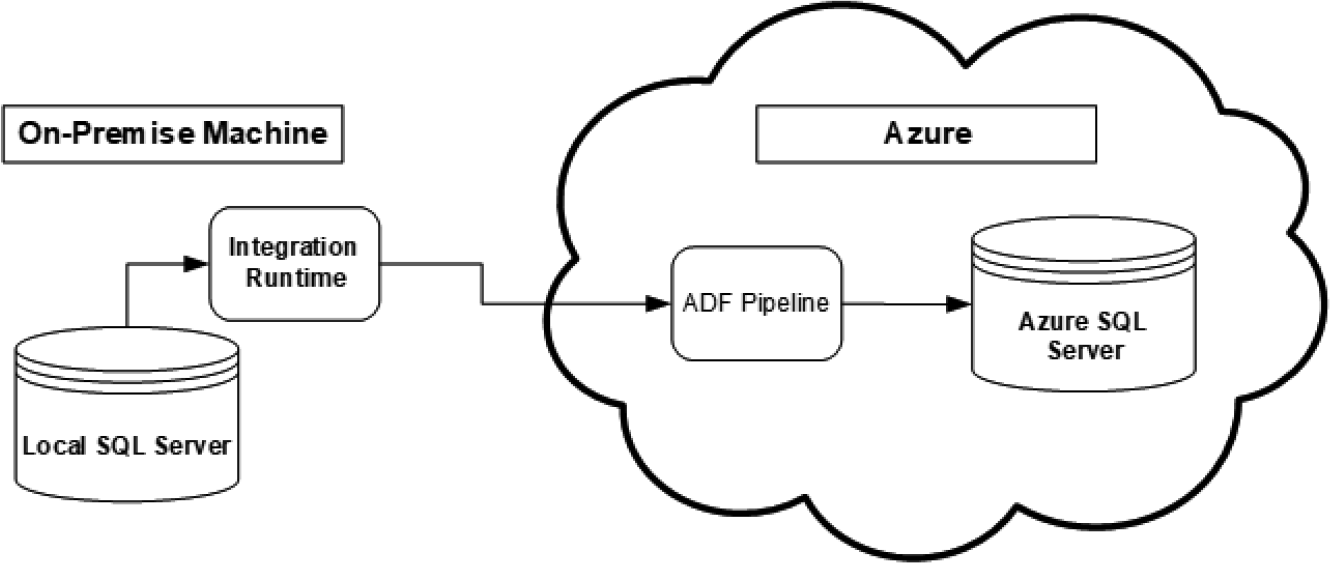

In this chapter well build out several components to illustrate how data can be copied between data sources using Azure Data Factory (ADF). The easiest way to illustrate this is by using a simple on-premise local SQL instance with data that will be copied to a cloud-based Azure SQL instance. Well create an ADF pipeline that uses an integration runtime component to acquire the connection with the local SQL database. A simple map will be created within the pipeline to show how the columns in the local table map to the Azure table. The full flow of this model is shown in .

Figure 1.1: Components and flow of data being built in this chapter

Creating a Local SQL Instance

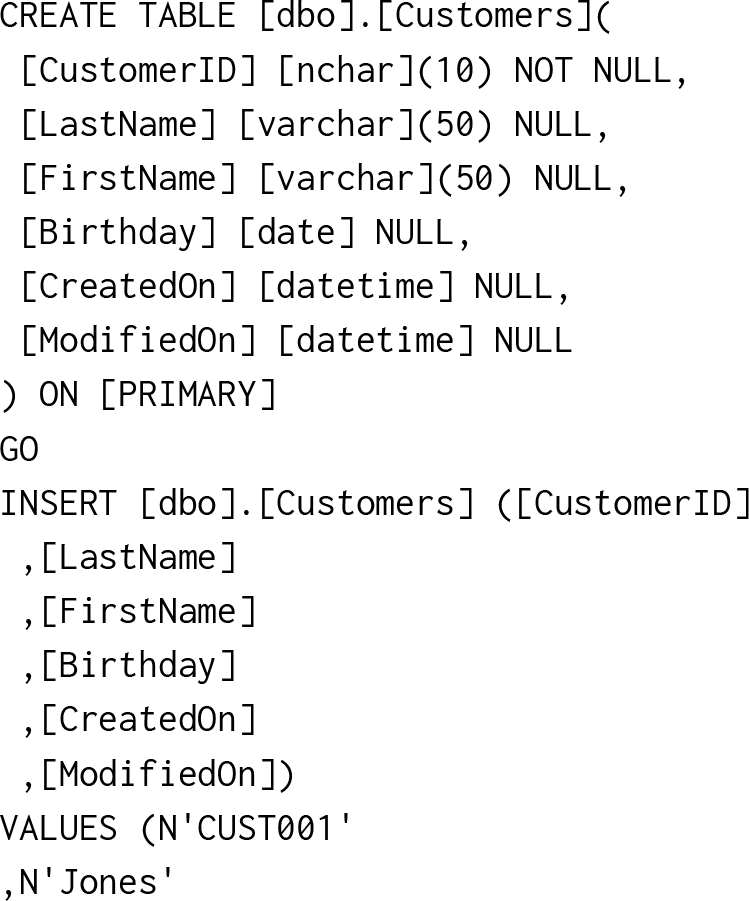

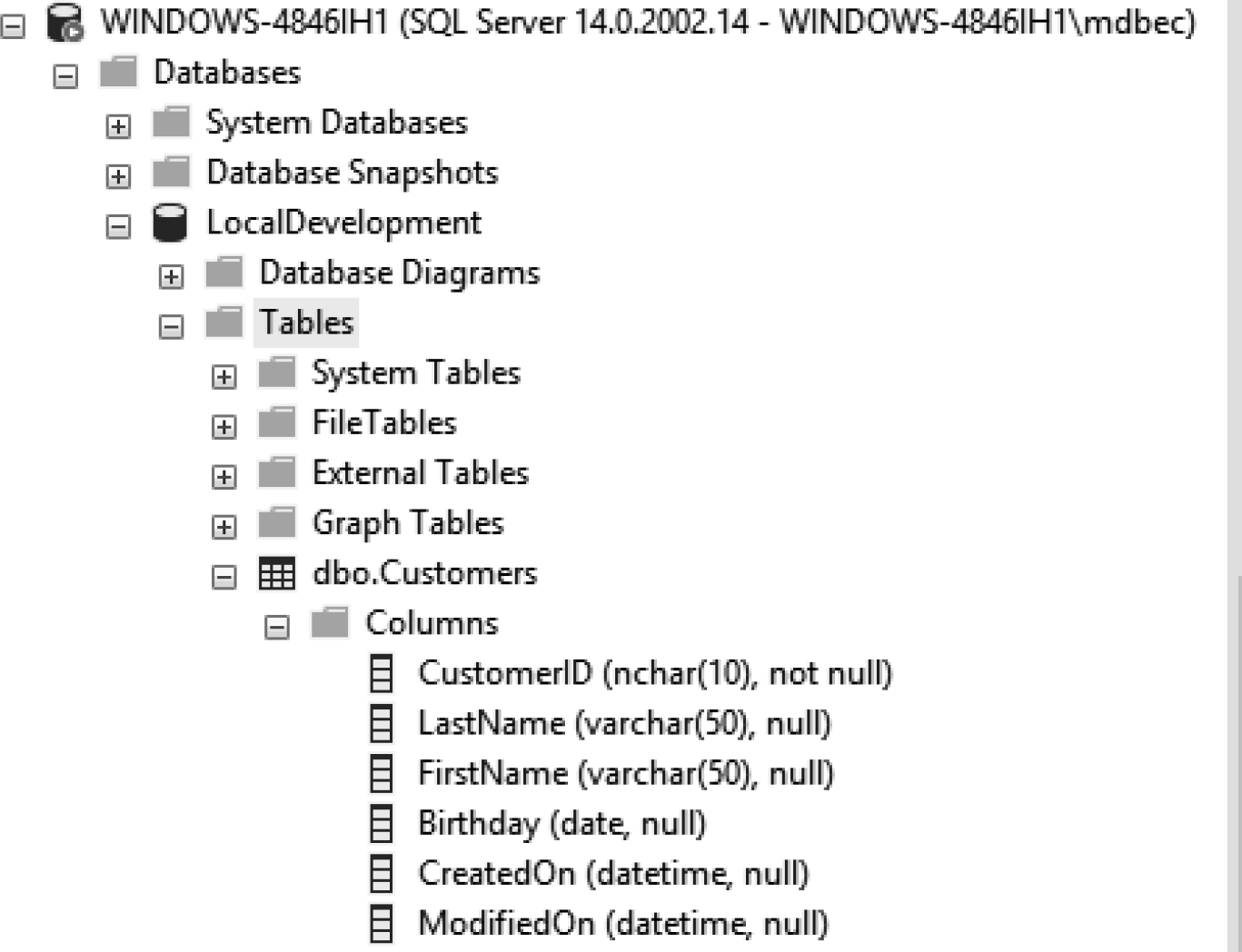

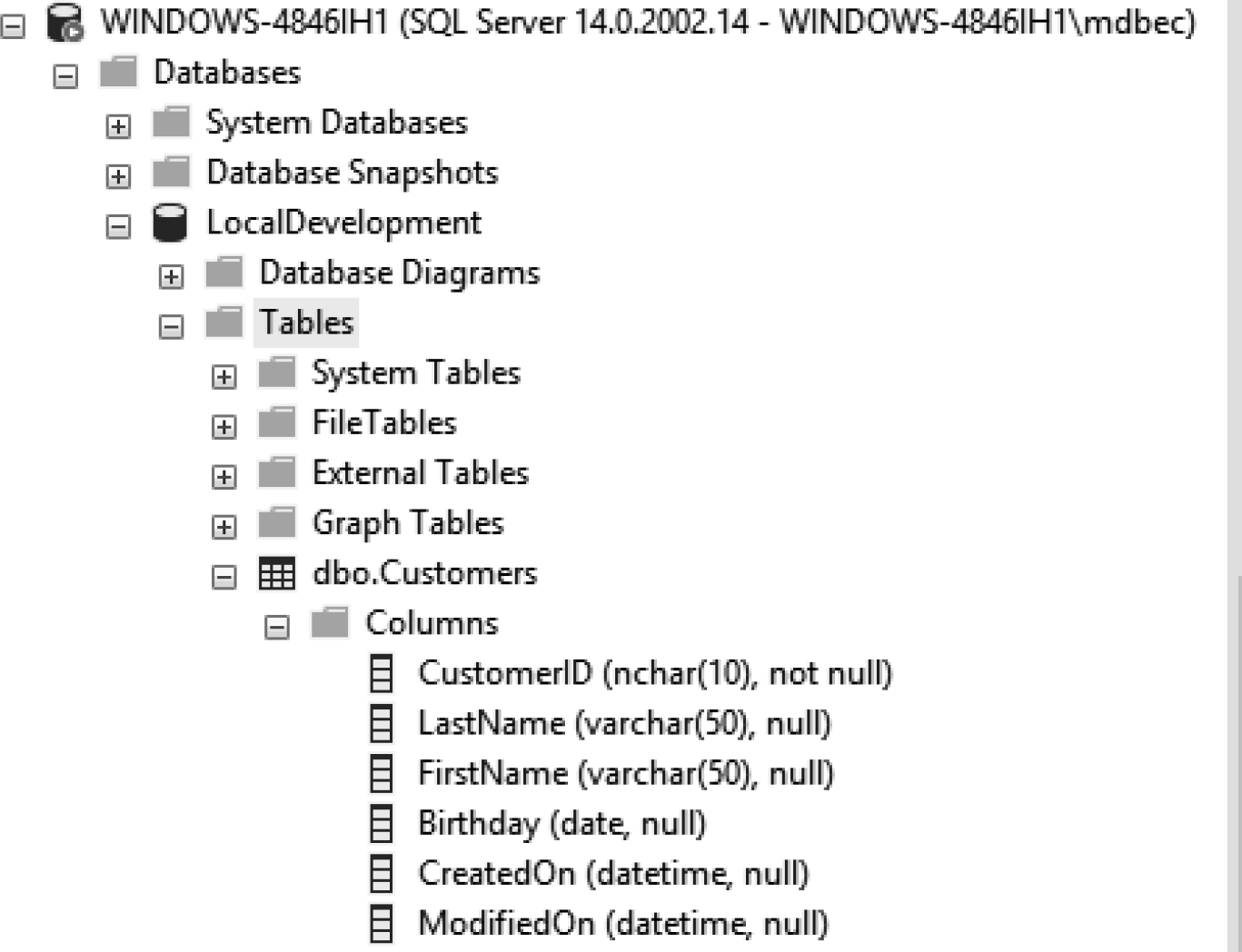

To build this simple architecture, a local SQL instance will need to be in place. Well create a single table called Customers. By putting a few records into the table, we can then use it as the base to load data into the new Azure SQL instance you create in the next section of this chapter. The table script and the script to load records into this table are shown in .

Figure 1.2: Creating a table on a local SQL Server instance

Listing 1.1: Listing 1.1. The Local SQL Customers Table with Data

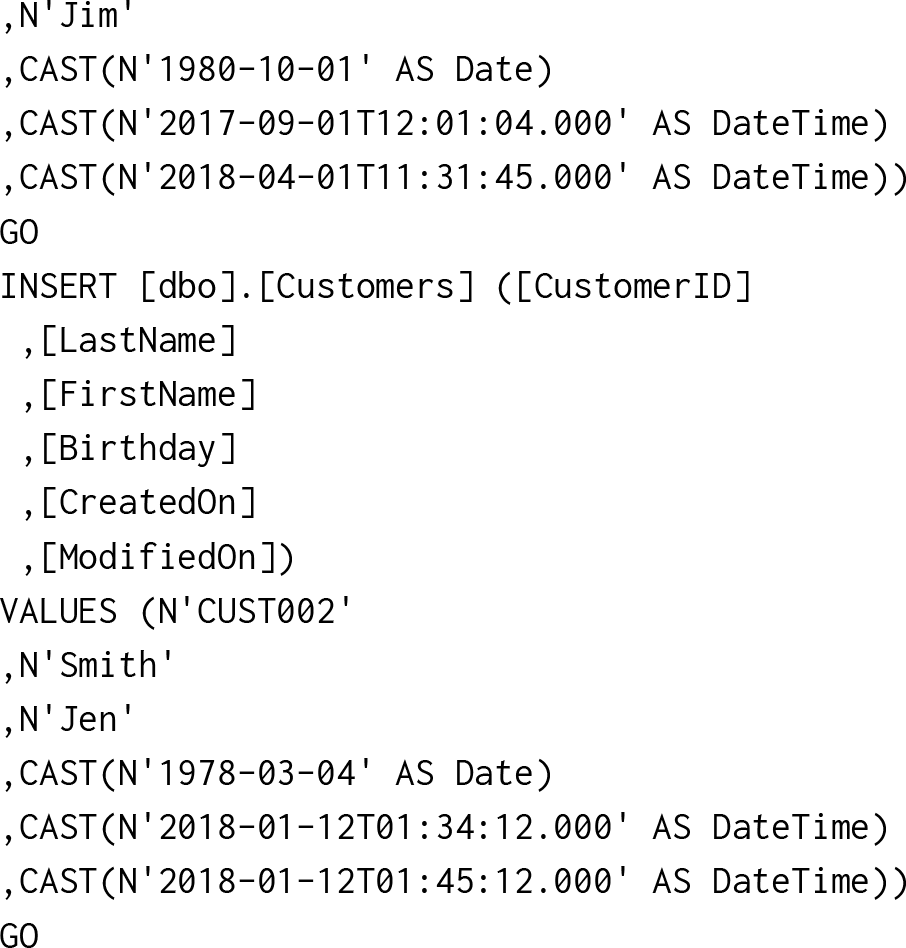

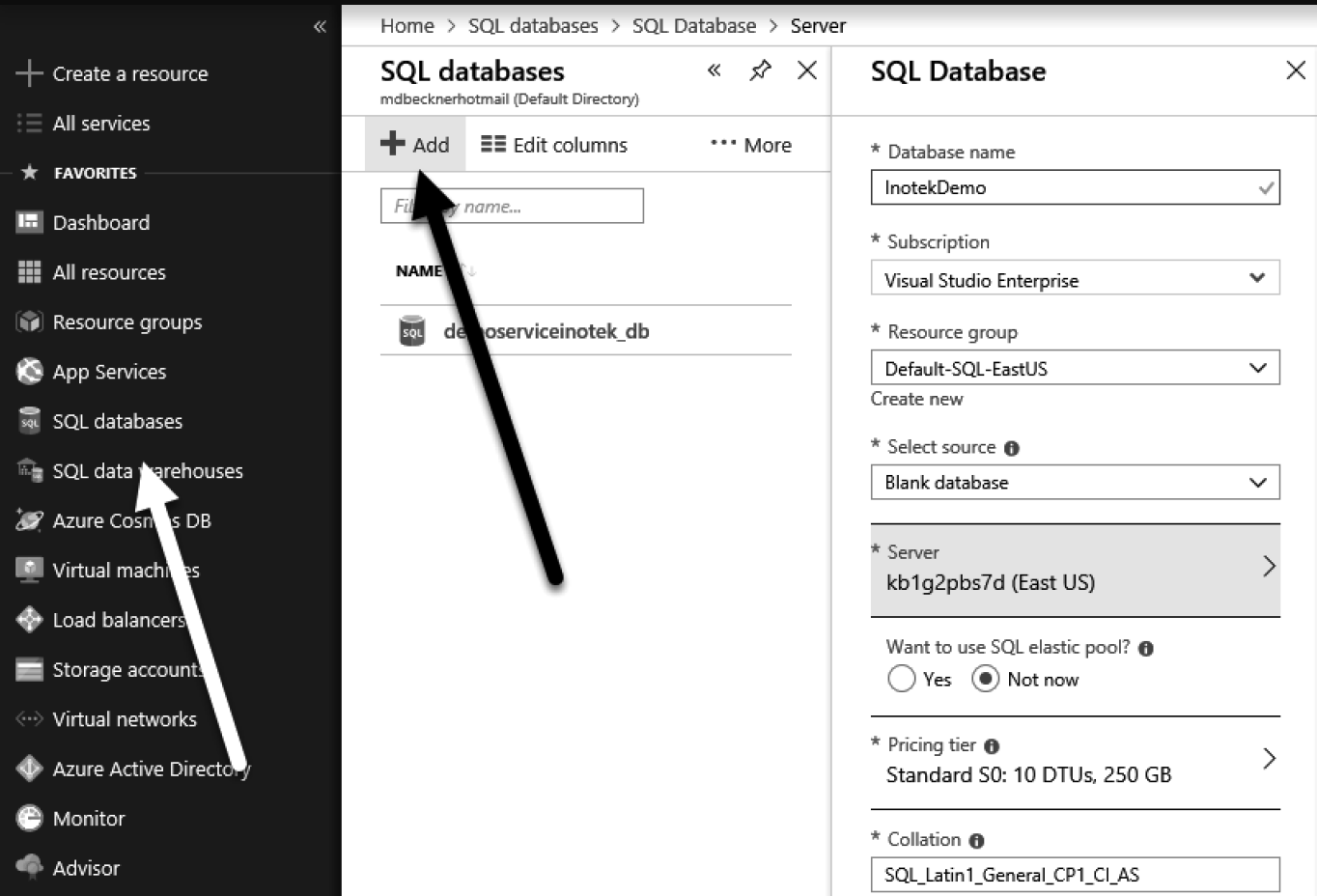

Creating an Azure SQL Database

Now well create an Azure SQL database. It will contain a single table that will be called Contacts. To begin with, this Contacts table will contain its own record, separate from data in any other location, but will eventually be populated with the copied data from the local SQL database. To create the Azure database, youll need to log into .

Figure 1.1: Components and flow of data being built in this chapter

Figure 1.1: Components and flow of data being built in this chapter  Figure 1.2: Creating a table on a local SQL Server instance

Figure 1.2: Creating a table on a local SQL Server instance