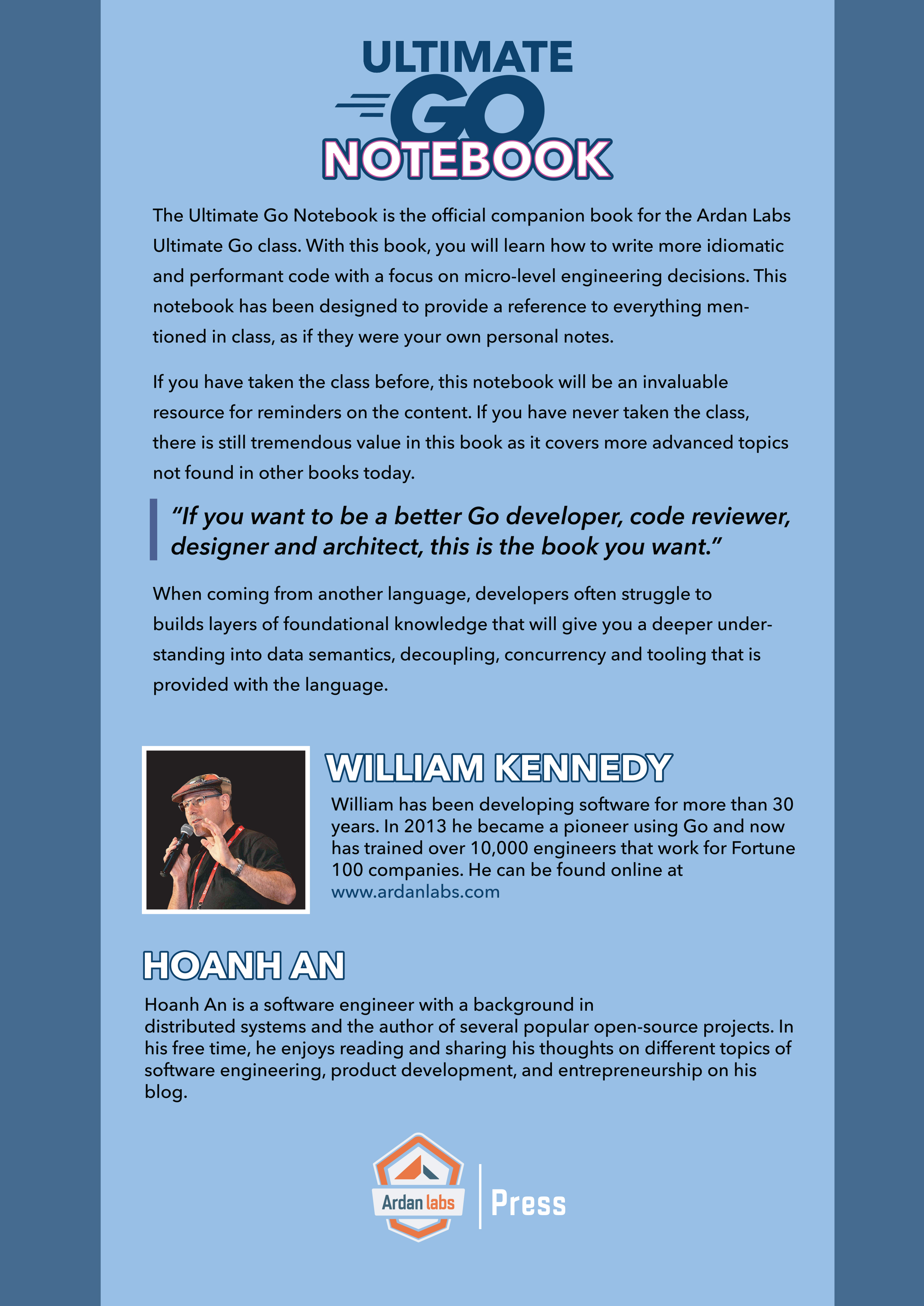

William Kennedy - Ultimate Go Notebook

Here you can read online William Kennedy - Ultimate Go Notebook full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2021, genre: Computer. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Ultimate Go Notebook

- Author:

- Genre:

- Year:2021

- Rating:5 / 5

- Favourites:Add to favourites

- Your mark:

- 100

- 1

- 2

- 3

- 4

- 5

Ultimate Go Notebook: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Ultimate Go Notebook" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

Ultimate Go Notebook — read online for free the complete book (whole text) full work

Below is the text of the book, divided by pages. System saving the place of the last page read, allows you to conveniently read the book "Ultimate Go Notebook" online for free, without having to search again every time where you left off. Put a bookmark, and you can go to the page where you finished reading at any time.

Font size:

Interval:

Bookmark:

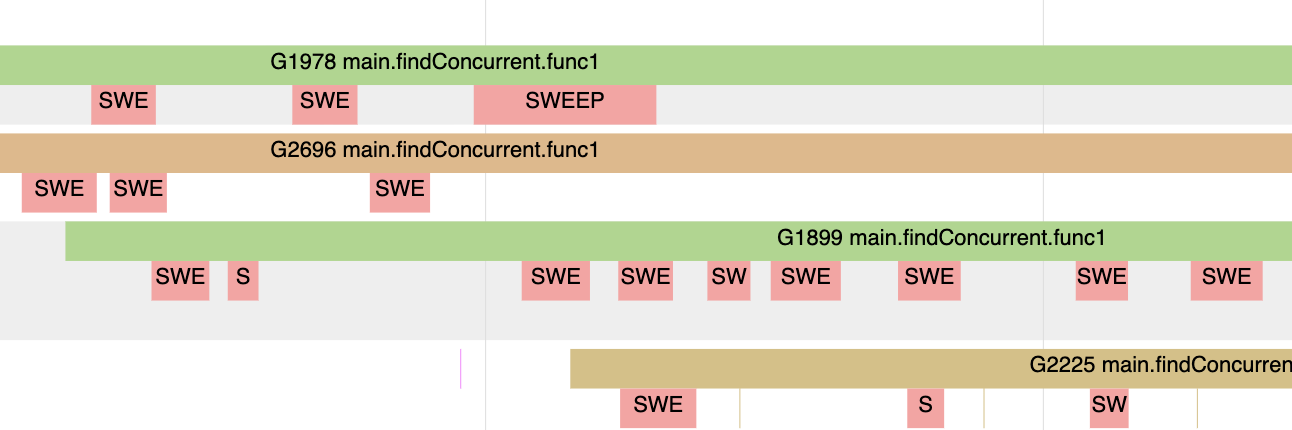

Figure 18  Figure 18 is showing STW latency where all the Goroutines are stopped. This happens twice on every collection. If your application is healthy, the collector should be able to keep the total STW time at or below 100 microsecond for the majority of collections. You now know the different phases of the collector, how memory is sized, how pacing works, and the different latencies the collector inflicts on your running application. With all that knowledge, the question of how you can be sympathetic with the collector can finally be answered. Being Sympathetic Being sympathetic with the collector is about reducing stress on heap memory.

Figure 18 is showing STW latency where all the Goroutines are stopped. This happens twice on every collection. If your application is healthy, the collector should be able to keep the total STW time at or below 100 microsecond for the majority of collections. You now know the different phases of the collector, how memory is sized, how pacing works, and the different latencies the collector inflicts on your running application. With all that knowledge, the question of how you can be sympathetic with the collector can finally be answered. Being Sympathetic Being sympathetic with the collector is about reducing stress on heap memory.

Remember, stress can be defined as how fast the application is allocating heap memory within a given amount of time. When stress is reduced, the latencies being inflicted by the collector will be reduced. Its the GC latencies that are slowing down your application. The way to reduce GC latencies is by identifying and removing unnecessary allocations from your application. Doing this will help the collector to: * Maintain the smallest heap possible. * Stay within the goal for every collection. * Minimize the duration of every collection, STW and Mark Assist. * Minimize the duration of every collection, STW and Mark Assist.

All these things help reduce the amount of latency the collector will inflict on your running application. That will increase the performance and throughput of your application. The pace of the collection has nothing to do with it. Conclusion If you take the time to focus on reducing allocations, you are doing what you can as a Go developer to be sympathetic with the garbage collector. You are not going to write zero allocation applications so its important to recognize the difference between allocations that are productive (those helping the application) and those that are not productive (those hurting the application). Then put your faith and trust in the garbage collector to keep the heap healthy and your application running consistently.

Having a garbage collector is a nice tradeoff. I will take the cost of garbage collection so I dont have the burden of memory management. Go is about allowing you as a developer to be productive while still writing applications that are fast enough. The garbage collector is a big part of making that a reality.  Pacing The collector has a pacing algorithm which is used to determine when a collection is to start. The algorithm depends on a feedback loop that the collector uses to gather information about the running application and the stress the application is putting on the heap.

Pacing The collector has a pacing algorithm which is used to determine when a collection is to start. The algorithm depends on a feedback loop that the collector uses to gather information about the running application and the stress the application is putting on the heap.

Stress can be defined as how fast the application is allocating heap memory within a given amount of time. Its that stress that determines the pace at which the collector needs to run. Before the collector starts a collection, it calculates the amount of time it believes it will take to finish the collection. Then, once a collection is running, latencies will be inflicted on the running application that will slow down application work. Every collection adds to the overall latency of the application. One misconception is thinking that slowing down the pace of the collector is a way to improve performance.

The idea being, if you can delay the start of the next collection, then you are delaying the latency it will inflict. Being sympathetic with the collector isnt about slowing down the pace. You could decide to change the GC Percentage value to something larger than 100. This will increase the amount of heap memory that can be allocated before the next collection has to start. This could result in the pace of collection to slow down. Dont consider doing this.

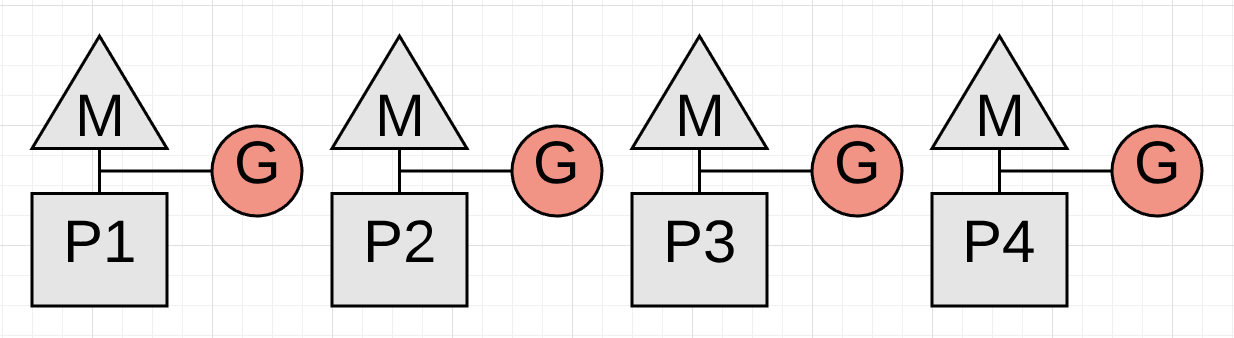

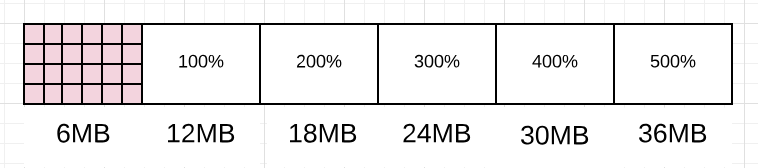

Figure 14  Figure 14 shows how changing the GC Percentage would change the amount of heap memory allowed to be allocated before the next collection has to start. You can visualize how the collector could be slowed down as it waits for more heap memory to become in-use. Attempting to directly affect the pace of collection has nothing to do with being sympathetic with the collector. Its really about getting more work done between each collection or during the collection. You affect that by reducing the amount or the number of allocations any piece of work is adding to heap memory. Note: The idea is also to achieve the throughput you need with the smallest heap possible.

Figure 14 shows how changing the GC Percentage would change the amount of heap memory allowed to be allocated before the next collection has to start. You can visualize how the collector could be slowed down as it waits for more heap memory to become in-use. Attempting to directly affect the pace of collection has nothing to do with being sympathetic with the collector. Its really about getting more work done between each collection or during the collection. You affect that by reducing the amount or the number of allocations any piece of work is adding to heap memory. Note: The idea is also to achieve the throughput you need with the smallest heap possible.

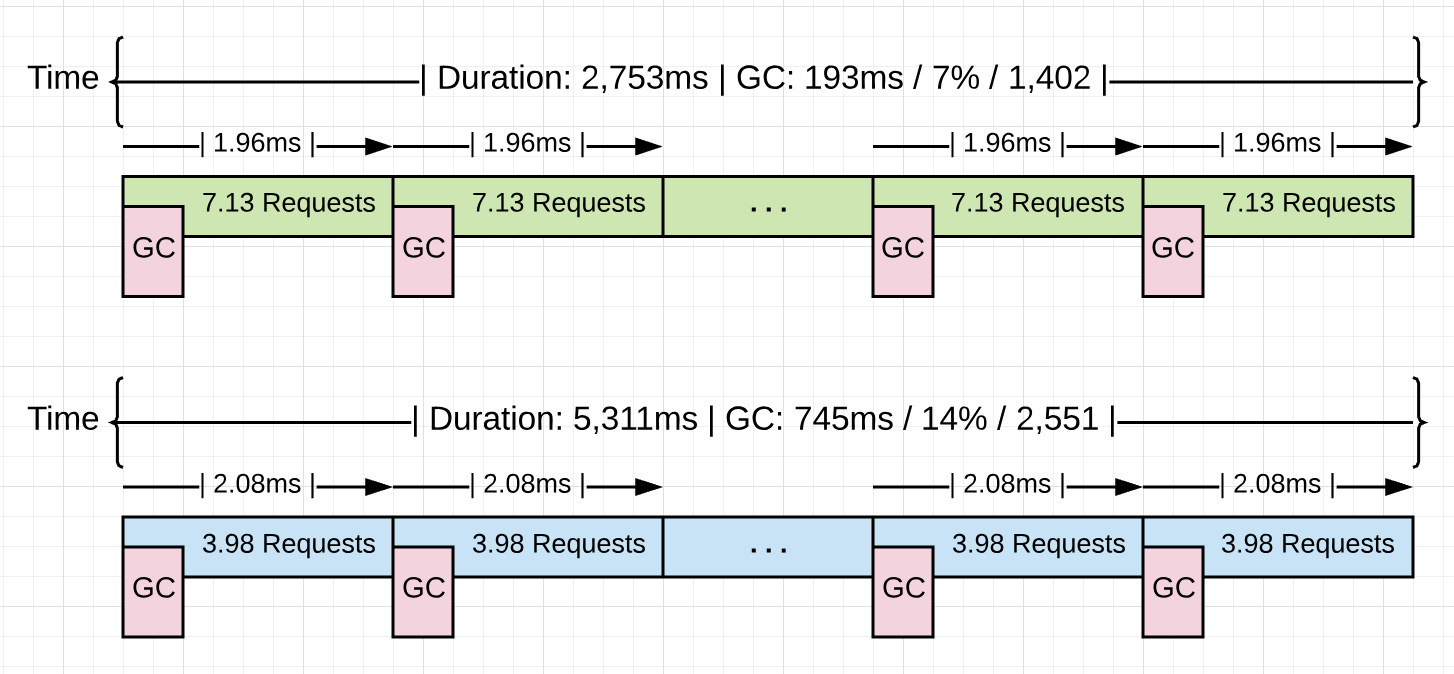

Remember, minimizing the use of resources like heap memory is important when running in cloud environments. Figure 15  Listing 15 shows some statistics of a running Go application. The version in blue shows stats for the application without any optimizations when 10k requests are processed through the application. The version in green shows stats after 4.48GB of non-productive memory allocations were found and removed from the application for the same 10k requests. Look at the average pace of collection for both versions (2.08ms vs 1.96ms). They are virtually the same, at around ~2.0ms.

Listing 15 shows some statistics of a running Go application. The version in blue shows stats for the application without any optimizations when 10k requests are processed through the application. The version in green shows stats after 4.48GB of non-productive memory allocations were found and removed from the application for the same 10k requests. Look at the average pace of collection for both versions (2.08ms vs 1.96ms). They are virtually the same, at around ~2.0ms.

What fundamentally changed between these two versions is the amount of work that is getting done between each collection. The application went from processing 3.98 to 7.13 requests per collection. That is a 79.1% increase in the amount of work getting done at the same pace. As you can see, the collection did not slow down with the reduction of those allocations, but remained the same. The win came from getting more work done in-between each collection. Adjusting the pace of the collection to delay the latency cost is not how you improve the performance of your application.

Its about reducing the amount of time the collector needs to run, which in turn will reduce the amount of latency cost being inflicted. The latency costs inflicted by the collector have been explained, but let me summarize it again for clarity. Collector Latency Costs There are two types of latencies every collection inflicts on your running application. The first is the stealing of CPU capacity. The effect of this stolen CPU capacity means your application is not running at full throttle during the collection. The application Goroutines are now sharing Ps with the collectors Goroutines or helping with the collection (Mark Assist).

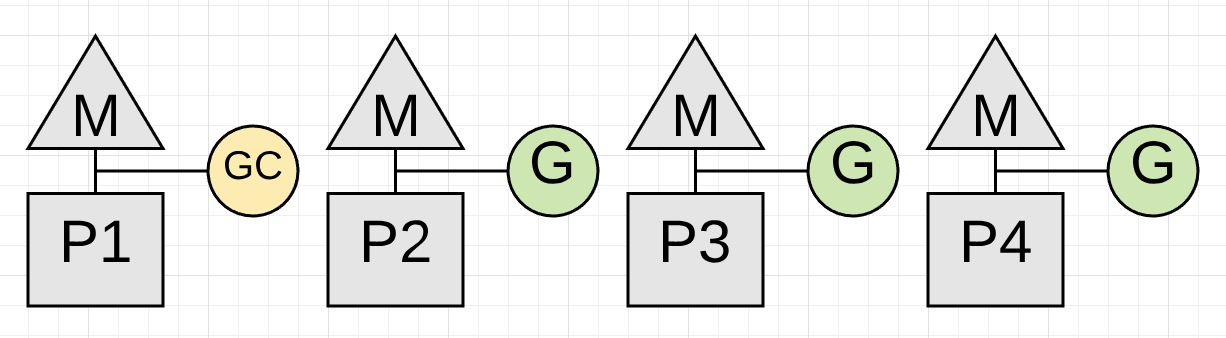

Figure 16  Figure 16 shows how the application is only using 75% of its CPU capacity for application work. This is because the collector has dedicated P1 for itself. This is true for the majority of the collection. Figure 17

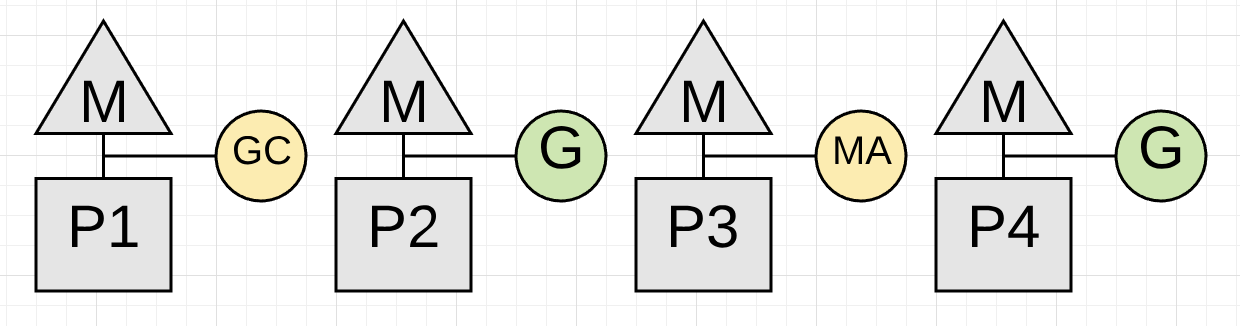

Figure 16 shows how the application is only using 75% of its CPU capacity for application work. This is because the collector has dedicated P1 for itself. This is true for the majority of the collection. Figure 17  Figure 17 shows how the application in this moment of time (typically for just a few microseconds) is now only using half of its CPU capacity for application work. This is because the Goroutine on P3 is performing a Mark Assist and the collector has dedicated P1 for itself. Note: Marking usually takes 4 CPU-milliseconds per MB of live heap (e.g., to estimate how many milliseconds the Marking phase will run for, take the live heap size in MB and divide by 0.25 * the number of CPUs).

Figure 17 shows how the application in this moment of time (typically for just a few microseconds) is now only using half of its CPU capacity for application work. This is because the Goroutine on P3 is performing a Mark Assist and the collector has dedicated P1 for itself. Note: Marking usually takes 4 CPU-milliseconds per MB of live heap (e.g., to estimate how many milliseconds the Marking phase will run for, take the live heap size in MB and divide by 0.25 * the number of CPUs).

Marking actually runs at about 1 MB/ms, but only has a quarter of the CPUs. The second latency that is inflicted is the amount of STW latency that occurs during the collection. The STW time is when no application Goroutines are performing any of their application work. The application is essentially stopped. Figure 8

Font size:

Interval:

Bookmark:

Similar books «Ultimate Go Notebook»

Look at similar books to Ultimate Go Notebook. We have selected literature similar in name and meaning in the hope of providing readers with more options to find new, interesting, not yet read works.

Discussion, reviews of the book Ultimate Go Notebook and just readers' own opinions. Leave your comments, write what you think about the work, its meaning or the main characters. Specify what exactly you liked and what you didn't like, and why you think so.