Manoj Kukreja - Data Engineering with Apache Spark, Delta Lake, and Lakehouse: Create scalable pipelines that ingest, curate, and aggregate complex data in a timely and secure way

Here you can read online Manoj Kukreja - Data Engineering with Apache Spark, Delta Lake, and Lakehouse: Create scalable pipelines that ingest, curate, and aggregate complex data in a timely and secure way full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2021, publisher: Packt Publishing, genre: Computer. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Data Engineering with Apache Spark, Delta Lake, and Lakehouse: Create scalable pipelines that ingest, curate, and aggregate complex data in a timely and secure way

- Author:

- Publisher:Packt Publishing

- Genre:

- Year:2021

- Rating:4 / 5

- Favourites:Add to favourites

- Your mark:

Data Engineering with Apache Spark, Delta Lake, and Lakehouse: Create scalable pipelines that ingest, curate, and aggregate complex data in a timely and secure way: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Data Engineering with Apache Spark, Delta Lake, and Lakehouse: Create scalable pipelines that ingest, curate, and aggregate complex data in a timely and secure way" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

Understand the complexities of modern-day data engineering platforms and explore strategies to deal with them with the help of use case scenarios led by an industry expert in big data

Key Features- Become well-versed with the core concepts of Apache Spark and Delta Lake for building data platforms

- Learn how to ingest, process, and analyze data that can be later used for training machine learning models

- Understand how to operationalize data models in production using curated data

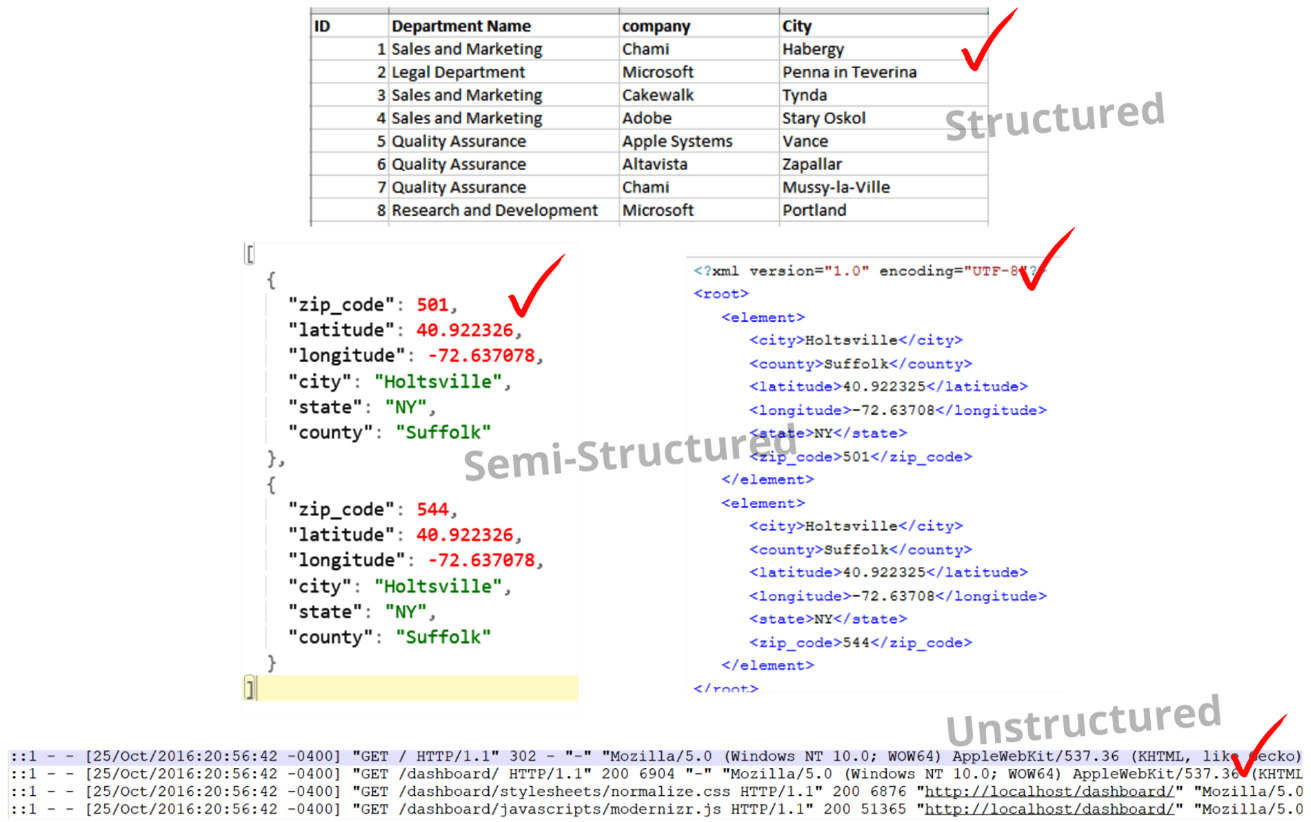

In the world of ever-changing data and schemas, it is important to build data pipelines that can auto-adjust to changes. This book will help you build scalable data platforms that managers, data scientists, and data analysts can rely on.

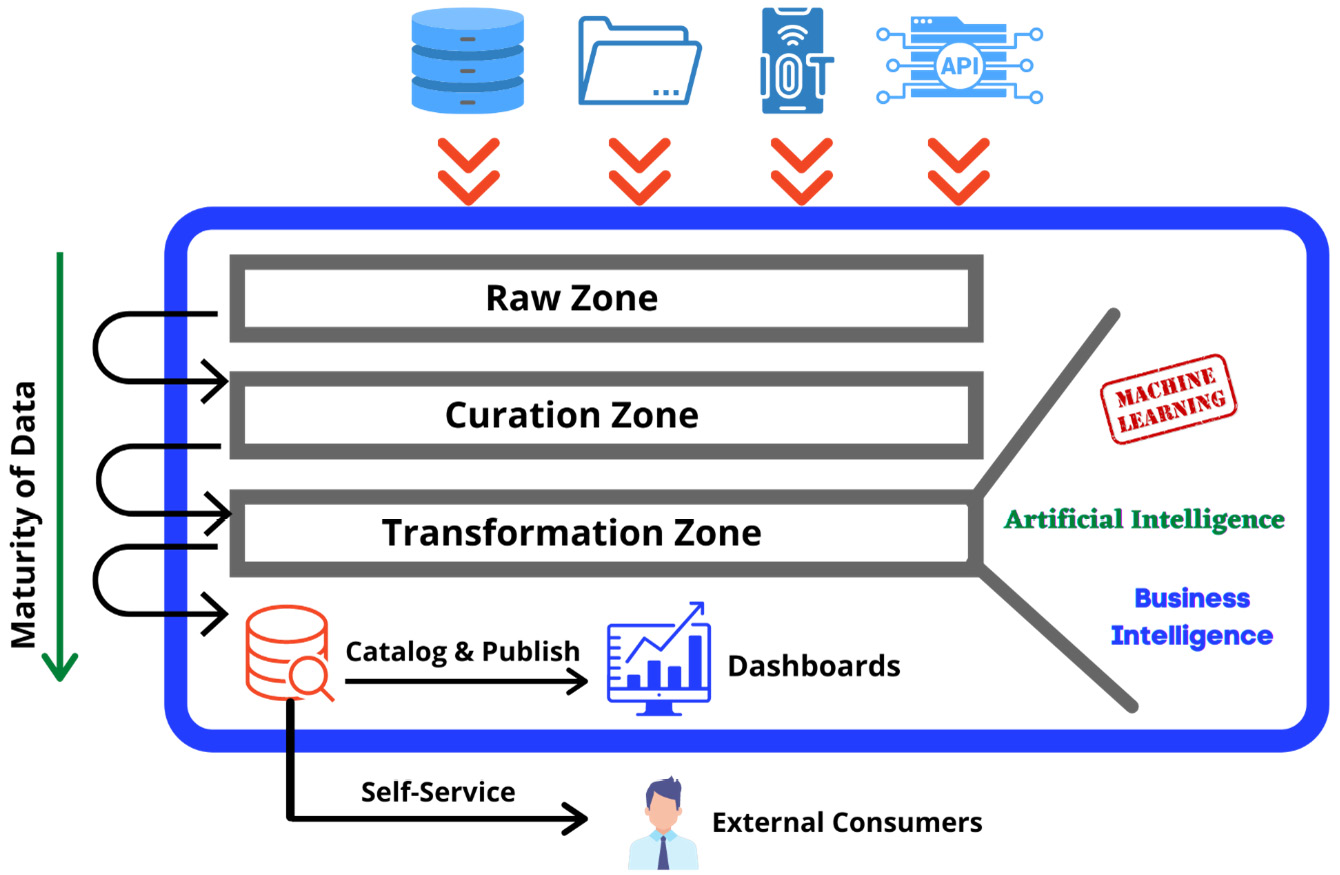

Starting with an introduction to data engineering, along with its key concepts and architectures, this book will show you how to use Microsoft Azure Cloud services effectively for data engineering. Youll cover data lake design patterns and the different stages through which the data needs to flow in a typical data lake. Once youve explored the main features of Delta Lake to build data lakes with fast performance and governance in mind, youll advance to implementing the lambda architecture using Delta Lake. Packed with practical examples and code snippets, this book takes you through real-world examples based on production scenarios faced by the author in his 10 years of experience working with big data. Finally, youll cover data lake deployment strategies that play an important role in provisioning the cloud resources and deploying the data pipelines in a repeatable and continuous way.

By the end of this data engineering book, youll know how to effectively deal with ever-changing data and create scalable data pipelines to streamline data science, ML, and artificial intelligence (AI) tasks.

What you will learn- Discover the challenges you may face in the data engineering world

- Add ACID transactions to Apache Spark using Delta Lake

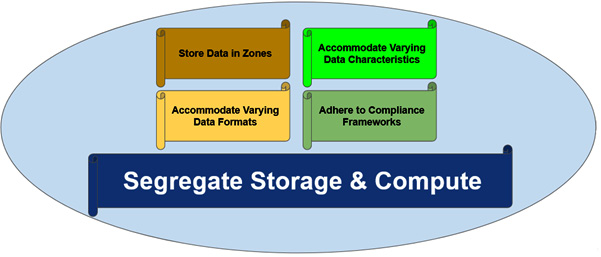

- Understand effective design strategies to build enterprise-grade data lakes

- Explore architectural and design patterns for building efficient data ingestion pipelines

- Orchestrate a data pipeline for preprocessing data using Apache Spark and Delta Lake APIs

- Automate deployment and monitoring of data pipelines in production

- Get to grips with securing, monitoring, and managing data pipelines models efficiently

This book is for aspiring data engineers and data analysts who are new to the world of data engineering and are looking for a practical guide to building scalable data platforms. If you already work with PySpark and want to use Delta Lake for data engineering, youll find this book useful. Basic knowledge of Python, Spark, and SQL is expected.

Table of Contents- The Story of Data Engineering and Analytics

- Discovering Storage and Compute Data Lake Architectures

- Data Engineering on Microsoft Azure

- Understanding Data Pipelines

- Data Collection Stage - The Bronze Layer

- Understanding Delta Lake

- Data Curation Stage - The Silver Layer

- Data Aggregation Stage - The Gold Layer

- Deploying and Monitoring Pipelines in Production

- Solving Data Engineering Challenges

- Infrastructure Provisioning

- Continuous Integration and Deployment (CI/CD) of Data Pipelines

Manoj Kukreja: author's other books

Who wrote Data Engineering with Apache Spark, Delta Lake, and Lakehouse: Create scalable pipelines that ingest, curate, and aggregate complex data in a timely and secure way? Find out the surname, the name of the author of the book and a list of all author's works by series.