Machine Learning: Foundations, Methodologies, and Applications

Series Editors

Kay Chen Tan

Department of Computing, Hong Kong Polytechnic University, Hong Kong, China

Dacheng Tao

University of Technology, Sydney, Australia

Books published in this series focus on the theory and computational foundations, advanced methodologies and practical applications of machine learning, ideally combining mathematically rigorous treatments of a contemporary topics in machine learning with specific illustrations in relevant algorithm designs and demonstrations in real-world applications. The intended readership includes research students and researchers in computer science, computer engineering, electrical engineering, data science, and related areas seeking a convenient medium to track the progresses made in the foundations, methodologies, and applications of machine learning.

Topics considered include all areas of machine learning, including but not limited to:

Decision tree

Artificial neural networks

Kernel learning

Bayesian learning

Ensemble methods

Dimension reduction and metric learning

Reinforcement learning

Meta learning and learning to learn

Imitation learning

Computational learning theory

Probabilistic graphical models

Transfer learning

Multi-view and multi-task learning

Graph neural networks

Generative adversarial networks

Federated learning

This series includes monographs, introductory and advanced textbooks, and state-of-the-art collections. Furthermore, it supports Open Access publication mode.

More information about this series at https://link.springer.com/bookseries/16715

Alexander Jung

Machine Learning

The Basics

Logo of the publisher

Alexander Jung

Department of Computer Science, Aalto University, Espoo, Finland

ISSN 2730-9908 e-ISSN 2730-9916

Machine Learning: Foundations, Methodologies, and Applications

ISBN 978-981-16-8192-9 e-ISBN 978-981-16-8193-6

https://doi.org/10.1007/978-981-16-8193-6

The Editor(s) (if applicable) and The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd. 2022

This work is subject to copyright. All rights are solely and exclusively licensed by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed.

The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use.

The publisher, the authors and the editors are safe to assume that the advice and information in this book are believed to be true and accurate at the date of publication. Neither the publisher nor the authors or the editors give a warranty, expressed or implied, with respect to the material contained herein or for any errors or omissions that may have been made. The publisher remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This Springer imprint is published by the registered company Springer Nature Singapore Pte Ltd.

The registered company address is: 152 Beach Road, #21-01/04 Gateway East, Singapore 189721, Singapore

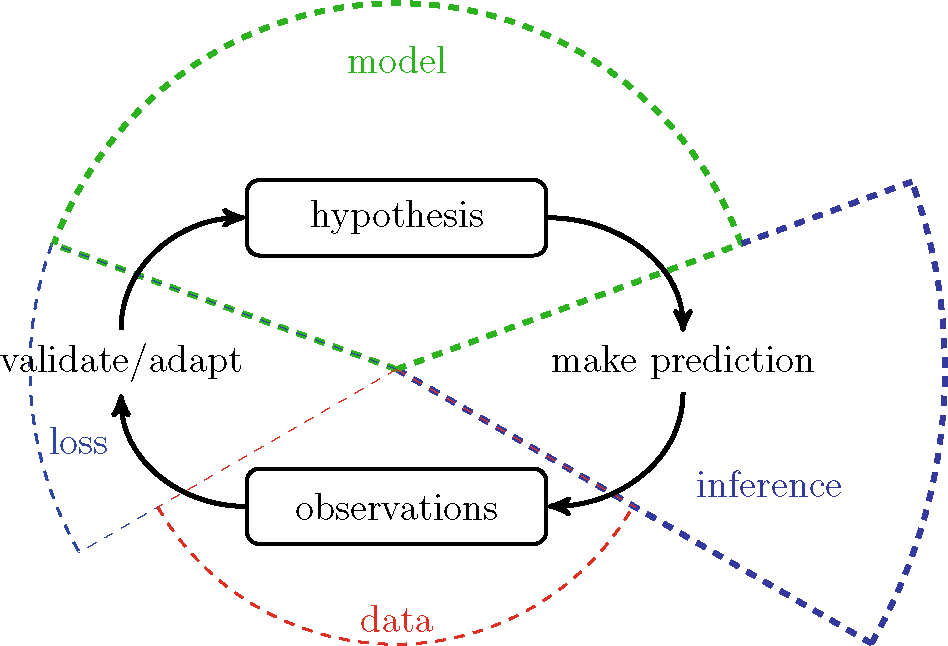

Fig. 1 Machine learning combines three main components: data, model and loss. Machine learning methods implement the scientific principle of trial and error. These methods continuously validate and refine a model based on the loss incurred by its predictions about a phenomenon that generates data.

Preface

Machine learning (ML) influences our daily lives in several aspects. We routinely ask ML empowered smartphones to suggest lovely restaurants or to guide us through a strange place. ML methods have also become standard tools in many fields of science and engineering. ML applications transform human lives at unprecedented pace and scale.

This book portrays ML as the combination of three basic components: data, model and loss. ML methods combine these three components within computationally efficient implementations of the basic scientific principle trial and error. This principle consists of the continuous adaptation of a hypothesis about a phenomenon that generates data.

ML methods use a hypothesis map to compute predictions of a quantity of interest (or higher level fact) that is referred to as the label of a data point. A hypothesis map reads in low level properties (referred to as features) of a data point and delivers the prediction for the label of that data point. ML methods choose or learn a hypothesis map from a (typically very) large set of candidate maps. We refer to this set as of candidate maps as the hypospace or model underlying an ML method.

The adaptation or improvement of the hypothesis is based on the discrepancy between predictions and observed data. ML methods use a loss function to quantify this discrepancy.

A plethora of different ML methods is obtained by combining different design choices for the data representation, model and loss. ML methods also differ vastly in their practical implementations which might obscure their unifying basic principles.

Deep learning methods use cloud computing frameworks to train large models on large datasets. Operating on a much finer granularity for data and computation, linear (least squares) regression can be implemented on small embedded systems. Nevertheless, deep learning methods and linear regression use the same principle of iteratively updating a model based on the discrepancy between model predictions and actual observed data.

We believe that thinking about ML as combinations of three components given by data, model and lossfunc helps to navigate the steadily growing offer for ready-to-use ML methods. Our three-component picture allows a unified treatment of ML techniques, such as early stopping, privacy-preserving ML and xml, that seem quite unrelated at first sight. For example, the regularization effect of the early stopping technique in gradient-based methods is due to the shrinking of the effective hypospace. Privacy-preserving ML methods can be obtained by particular choices for the features used to characterize data points (see Sect. ).

To make good use of ML tools it is instrumental to understand its underlying principles at the appropriate level of detail. It is typically not necessary to understand the mathematical details of advanced optimization methods to successfully apply deep learning methods. On a lower level, this tutorial helps ML engineers choose suitable methods for the application at hand. The book also offers a higher level view on the implementation of ML methods which is typically required to manage a team of ML engineers and data scientists.

![Daniel D. Gutierrez [Daniel D. Gutierrez] - Machine Learning and Data Science: An Introduction to Statistical Learning Methods with R](/uploads/posts/book/119585/thumbs/daniel-d-gutierrez-daniel-d-gutierrez-machine.jpg)