Part I

An Introduction to Parallelism

- Chapter 1: Parallelism Today

- Chapter 2: An Overview of Parallel Studio XE

- Chapter 3: Parallel Studio XE for the Impatient

Chapter 1

Parallelism Today

What's in This Chapter?

How parallelism arrived and why parallel programming is feared

Different parallel models that you can use, along with some potential pitfalls this new type of programming introduces

How to predict the behavior of parallel programs

The introduction of multi-core processors brings a new set of challenges for the programmer. After a brief discussion on the power density race, this chapter looks at the top six parallel programming challenges. Finally, the chapter presents a number of different programming models that you can use to add parallelism to your code.

The Arrival of Parallelism

Parallelism is not new; indeed, parallel computer architectures were available in the 1950s. What is new is that parallelism is ubiquitous, available to everyone, and now in every computer.

The Power Density Race

Over the recent decades, computer CPUs have become faster and more powerful; the clock speed of CPUs doubled almost every 18 months. This rise in speed led to a dramatic rise in the power density. shows the power density of different generations of processors. Power density is a measure of how much heat is generated by the CPU, and is usually dissipated by a heat sink and cooling system. If the trend of the 1990s were to continue into the twenty-first century, the heat needing to be dissipated would be comparable to that of the surface of the sun we would be at meltdown! A tongue-in-cheek cartoon competition appeared on an x86 user-forum website in the early 1990s. The challenge was to design an alternative use of the Intel Pentium Processor. The winner suggested a high-tech oven hot plate design using four CPUs side-by-side.

The power density race

Increasing CPU clock speed to get better software performance is well established. Computer game players use overclocking to get their games running faster. Overclocking involves increasing the CPU clock speed so that instructions are executed faster. Processors often are run at speeds above what the manufacturer specifies. One downside to overclocking is that it produces extra heat, which needs dissipating. Increasing the speed of a CPU by just a fraction can result in a chip that runs much hotter. So, for example, increasing a CPU clock speed by just over 20 percent causes the power consumption to be almost doubled.

Increasing clock speed was an important tool for the silicon manufacturer. Many of the performance claims and marketing messages were based purely on the clock speed. Intel and AMD typically were leapfrogging over each other to produce faster and faster chips all of great benefit to the computer user. Eventually, as the physical limitations of the silicon were reached, further increases in CPU speed gave diminishing returns.

Even though the speed of the CPU is no longer growing rapidly, the number of transistors used in CPU design is still growing, with the new transistors used to supply added functionality and performance. Most of the recent performance gains in CPUs are because of improved connections to external memory, improved transistor design, extra parallel execution units, wider data registers and buses, and placing multiple cores on one die. The 3D-transistor, announced in May 2011, which exhibits reduced current leakage and improved switching times while lowering power consumption, will contribute to future microarchitecture improvements.

The Emergence of Multi-Core and Many-Core Computing

Hidden in the power density race is the secret to why multi-core CPUs have become today's solution to the limits on performance.

Rather than overclocking a CPU, if it were underclocked by 20 percent, the power consumption would be almost half the original value. By putting two of these underclocked CPUs on the same die, you get a total performance improvement of more than 70 percent, with a power consumption being about the same as the original single-core processor. The first multi-core devices consisted of two underclocked CPUs on the same chip. Reducing power consumption is one of the key ingredients to the successful design of multi-core devices.

Gordon E. Moore observed that the number of transistors that can be placed on integrated circuits doubles about every two years famously referred to as Moore's Law. Today, those transistors are being used to add additional cores. The current trend is that the number of cores in a CPU is doubling about every 18 months. Future devices are likely to have dozens of cores and are referred to as being many-core.

It is already possible to buy a regular PC machine that supports many hardware threads. For example, the workstation used to test some of the example programs in this book can support 24 parallel execution paths by having:

- A two-socket motherboard

- Six-core XEON CPUs

- Hyper-threading, in which some of the internal electronics of the core are duplicated to double the amount of hardware threads that can be supported

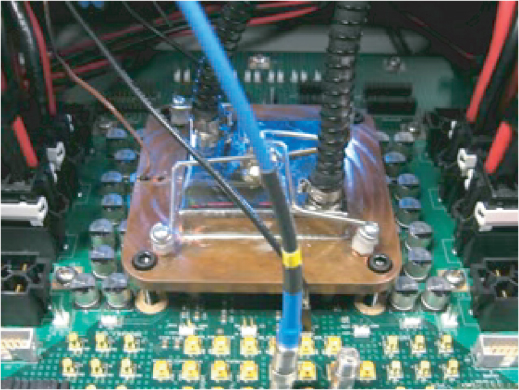

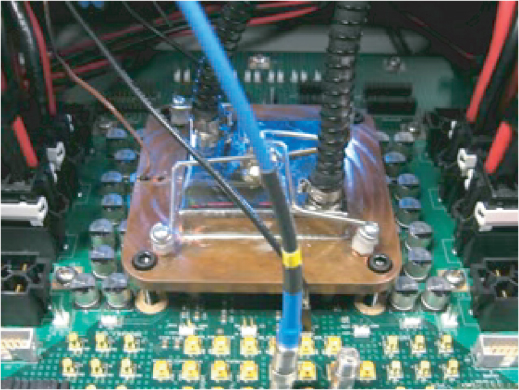

One of Intel's first many-core devices was the Intel Teraflop Research Chip. The processor, which came out of the Intel research facilities, had 80 cores and could do one teraflop, which is one trillion floating-point calculations per second. In 2007, this device was demonstrated to the public. As shown in , the heat sink is quite small an indication that despite its huge processing capability, it is energy efficient.

The 80-core Teraflop Research Chip

There is the huge difference in power consumption between the lower and higher clock speeds; provides sample values. With a one-teraflop performance (1 1012 floating-point calculations per second), 62 watts of power is used; to get 1.81 teraflops of performance, the power consumption is four times larger.

Power-to-Performance Relationship of the Teraflop Research Chip

| Speed (GHz) | Power (Watts) | Performance (Teraflops) |

| 3.16 | 62 | 1.01 |

| 5.1 | 175 | 1.63 |

| 5.7 | 265 | 1.81 |

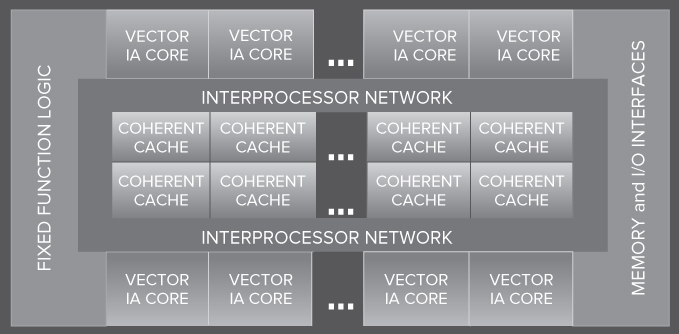

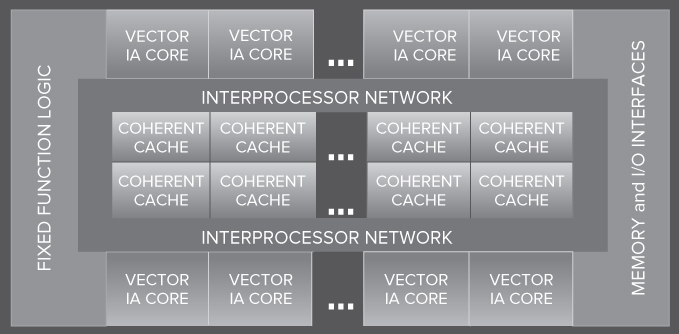

The Intel Many Integrated Core Architecture (MIC) captures the essentials of Intel's current many-core strategy (see ). Each of the cores is connected together on an internal network. A 32-core preproduction version of such devices is already available.

Intel's many-core architecture

Many programmers are still operating with a single-core computing mind-set and have not taken up the opportunities that multi-core programming brings.

For some programmers, the divide between what is available in hardware and what the software is doing is closing; for others, the gap is getting bigger.

Adding parallelism to programs requires new skills, knowledge, and the appropriate software development tools. This book introduces Intel Parallel Studio XE, a software suite that helps the C\C++ and Fortran programmer to transition from serial programmer to parallel programmer. Parallel Studio XE is designed to help the programmer in all phases of the development of parallel code.