List of Tables

CHAPTER 6

Fundamental Quantitative Analysis for Systems

I have worked for many managers with a wide range of quantitative abilities. I have learned from them all. One of the managers took a moment to sit with me when he first arrived to describe features that would make his job easier. He first described how full his calendar is every day, and how utterly random the subject of each meeting is when looked at in total. He said he can sometimes spend up to the first 15 minutes of a meeting trying to shift mental gears, but it is easier when he has some graphs that serve as a focusing aid. He requested a few components be included in a presentation:

- How many items are there? Tens, hundreds, thousands?

- How long is the processing time? Hours, days, months, years?

- What direction is good? Is the goal to increase or decrease the count?

- How do these numbers compare to where we were last week, last month, and last year?

These requests were easy to accommodate, and I was effective in spreading the request to other analysts. It communicated his approach to decision making steady and disciplined. It also served as a good foundation for doing quantitative analysis.

Being a data scientist is like being an offensive lineman: no one notices you until you do something wrong! There are fundamental aspects that must always be followed such as being disciplined in approach and rigorous in execution. Many people play fast and loose with data. The world of data science needs to be more structured so that truthful and useful solutions are achieved. Sometimes extenuating situations can scuttle an endeavor, but if the analysis was fundamentally sound, the analysis can be extended to solve related issues.

This chapter is broken into three parts: a discussion of exploratory tools, an introduction to the basic tool kit for quality analysis, and three technical sections that highlight fundamentals for descriptive statistics, inferential statistics, and modern tools for the analysis of big data. There are many good textbooks that provide in-depth knowledge for how to apply these tools. There are also many software packages to aid with this analysis that include extensive training modules to support mastery. The intent herein is to demonstrate how and when quantitative analysis approaches can be used.

Exploratory data analysis

The most exciting phrase to hear in science, the one that heralds new discoveries, is not Eureka! (I found it!), but Thats funny

Isaac Asimov

As with all scientific endeavors, performing an analysis opens the door to the unknown. This is also true for data analysis. The writings of John Tukey are fascinating. His article with Martin Wilks is a must read to understand the fundamentals of data science circa 1965. Warning, we still have not mastered the key points that they described! It is truly fascinating to read what the great thinkers were expecting to happen by the computer revolution. They would be shocked about how much time ends up being wasted on the web versus actually doing work. Tukey coined the term Exploratory Data Analysis in his book title from 1977 that provides the following gems of wisdom:

Exploratory data analysis can never be the whole story, but nothing else can serve as the foundation stone as the first step...Unless exploratory data analysis uncovers indications, usually quantitative ones, there is likely to be nothing for confirmatory data analysis to consider. (page 3)

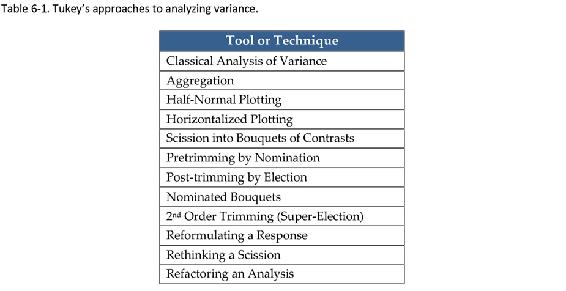

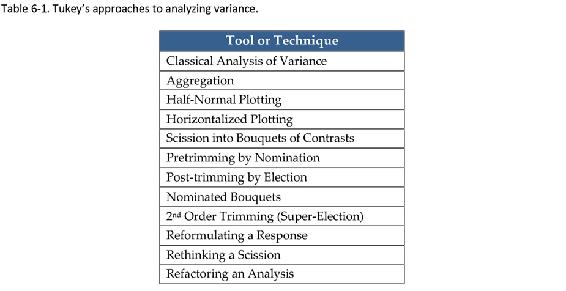

He was a world-class data scientist before the computer age. He figured out how to make powerful visualizations using typewriters and found patterns and trends using only an inquiring mind. One of his most powerful tools was the box-and-whisker plot, shown in Figure 4-5. Much has been written on the analysis of measures of central tendency (including in this book), but he was focused on analyzing variance in 1987, shown in Table 6-1. When analytics tools are rolled out, most of these techniques are not addressed. It is obvious that they are still rafts in the iceberg-filled sea. There is much yet to be learned. As big data are a new development, expect there to be many new tools unveiled each year for the foreseeable future.

The first big targets for exploration are granularity and decomposition. Data must be chunked to be processed when it is collected over time or space with the finer divisions taking longer to process. Data can be decomposed along many lines and hierarchies as dictated by the system itself. Software tools exist to help with these initial steps. It is an easy analysis because one just follows the Voice of the Data and determines which fields are the most important just by following the natural course.

Tukeys approaches to analyzing variance.

The strategy is also called unpeeling the onion because each layer is unfolded, straightened, and reviewed in order to learn what is unique about that layer. The process slows when additional layers are investigated, because those discoveries must be joined with the knowledge acquired from the previous layers. Once this decomposition is finished, the data scientist is buried in an avalanche of knowledge that must be presented and prioritized for action. The output of this exploration is frequently named a multi-vari study and is often presented as an appendix to the study. It usually works best to present this appendix individually to each individual stakeholder so that free and open discussions can occur.

What about missing data points?

They are missing. There are approaches to accommodate them that border on magic and voodoo, but the bottom line is you do not know what you do not know. The only correct way to handle missing data points is to show that the confidence level and error bars are slightly larger so that decision making must be made with more uncertainty. If a data point falls below the detection limit, a two-phased approach must be taken in that the count of measurable data (an attribute) is first reported, and then it is reported as a percentage of completeness. Finally, descriptive statistics can be applied to the subset that is reported of having measured values. This is often seen on Election Night when the talking heads report that candidate X has a 10-point lead with 67% of the precincts reporting. Inferences can be made about the missing data, but a winner cannot be named until those votes are counted. However, if you are in the realm of large numbers, then a few missing data points will not be as influential. If there is a chance that data will be lost, then sampling frequency should be increased as a hedge. The tools of big data make this easier than it was in the old days when people used stubby yellow pencils and Big Chief tablets.

Descriptive statistics

Descriptive statistics are used most often during the quantitative analysis of a system. They are like knives to a chef. There are many kinds of software to use depending on where the data resides and the intensity of the analysis to be performed. There are four parameters that are captured when the descriptive statistics are generated: central tendency, variation, distribution, and ranking. The technical term is univariate analysis .

Central tendency

There are three values that are used to describe the center of a data set. The mean or average is calculated by the sum divided by the total number. The median is the mid-point with one half of the values above and below this number. The mode is the most frequently occurring number. These three values can vary for the same data set. Sometimes the values that correspond to the 25 th and 75 th percentiles are captured to bound the center half of the data.

Next page

![Daniel A. McGrath Ph.D. [Daniel A. McGrath Ph.D.] Quantitative Analysis for System Applications: Data Science and Analytics Tools and Techniques](/uploads/posts/book/119603/thumbs/daniel-a-mcgrath-ph-d-daniel-a-mcgrath-ph-d.jpg)

![EMC Education Services [EMC Education Services] - Data Science and Big Data Analytics: Discovering, Analyzing, Visualizing and Presenting Data](/uploads/posts/book/119625/thumbs/emc-education-services-emc-education-services.jpg)