Architecting HBase Applications

by Jean-Marc Spaggiari and Kevin ODell

Copyright 2015 Jean-Marc Spaggiari and Kevin ODell. All rights reserved.

Printed in the United States of America.

Published by OReilly Media, Inc. , 1005 Gravenstein Highway North, Sebastopol, CA 95472.

OReilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles ( http://safaribooksonline.com ). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com .

- Editor: Marie Beaugureau

- Production Editor: FILL IN PRODUCTION EDITOR

- Copyeditor: FILL IN COPYEDITOR

- Proofreader: FILL IN PROOFREADER

- Indexer: FILL IN INDEXER

- Interior Designer: David Futato

- Cover Designer: Karen Montgomery

- Illustrator: Rebecca Demarest

- September 2015: First Edition

Revision History for the First Edition

- 2015-08-25: First Release

See http://oreilly.com/catalog/errata.csp?isbn=9781491915813 for release details.

While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-491-91581-3

[LSI]

Chapter 1. Underlying storage engine - Description

The first use case that will be examined is from Omneo a division of Camstar a Siemens Company. Omneo is a big data analytics platform that assimilates data from disparate sources to provide a 360-degree view of product quality data across the supply chain. Manufacturers of all sizes are confronted with massive amounts of data, and manufacturing data sets comprise the key attributes of Big Data. These data sets are high volume, rapidly generated and come in many varieties. When tracking products built through a complex, multi-tier supply chain, the challenges are exacerbated by the lack of a centralized data store and no unified data format. Omneo ingests data from all areas of the supply chain, such as manufacturing, test, assembly, repair, service, and field.

Omneo offers this system to their end customer as a Software as a Service(SaaS) model. This platform must provide users the ability to investigate product quality issues, analyze contributing factors and identify items for containment and control. The ability to offer a rapid turn-around for early detection and correction of problems can result in greatly reduced costs and significantly improved consumer brand confidence. Omneo must start by building a unified data model that links the data sources so users can explore the factors that impact product quality throughout the product lifecycle. Furthermore, Omneo has to provide a centralized data store, and applications that facilitate the analysis of all product quality data in a single, unified environment.

Omneo evaluated numerous NoSQL systems and other data platforms. Omneos parent company Camstar has been in business for over 30 years, giving them a well established IT operations system. When Omneo was created they were given carte blanche to build their own system. Knowing the daunting task of handling all of the data at hand, they decided against building a traditional EDW. They also looked at other big data technologies such as Cassandra and MongoDB, but ended up selecting Hadoop as the foundation for the Omneo platform. The primary reason for the decision came down to ecosystem or lack thereof from the other technologies. The fully integrated ecosystem that Hadoop offered with MapReduce, HBase, Solr, and Impala allowed Omneo to handle the data in a single platform without the need to migrate the data between disparate systems.

The solution must be able to handle numerous products and customers data being ingested and processed on the same cluster. This can make handling data volumes and sizing quite precarious as one customer could provide eighty to ninety percent of the total records. As of writing this Omneo hosts multiple customers on the same cluster for a rough record count of +6 billion records stored in ~50 nodes. The total combined set of data in the HDFS filesystem is approximately 100TBs. This is important to note as we get into the overall architecture of the system we will note where duplicating data is mandatory and where savings can be introduced by using a unified data format.

Omneo has fully embraced the Hadoop ecosystem for their overall architecture. It would only make sense for the architecture to also takes advantage of Hadoops Avro data serialization system. Avro is a popular file format for storing data in the Hadoop world. Avro allows for a schema to be stored with data, making it easier for different processing systems such as MapReduce, HBase, and Impala/Hive to easily access the data without serializing and deserializing the data over and over again.

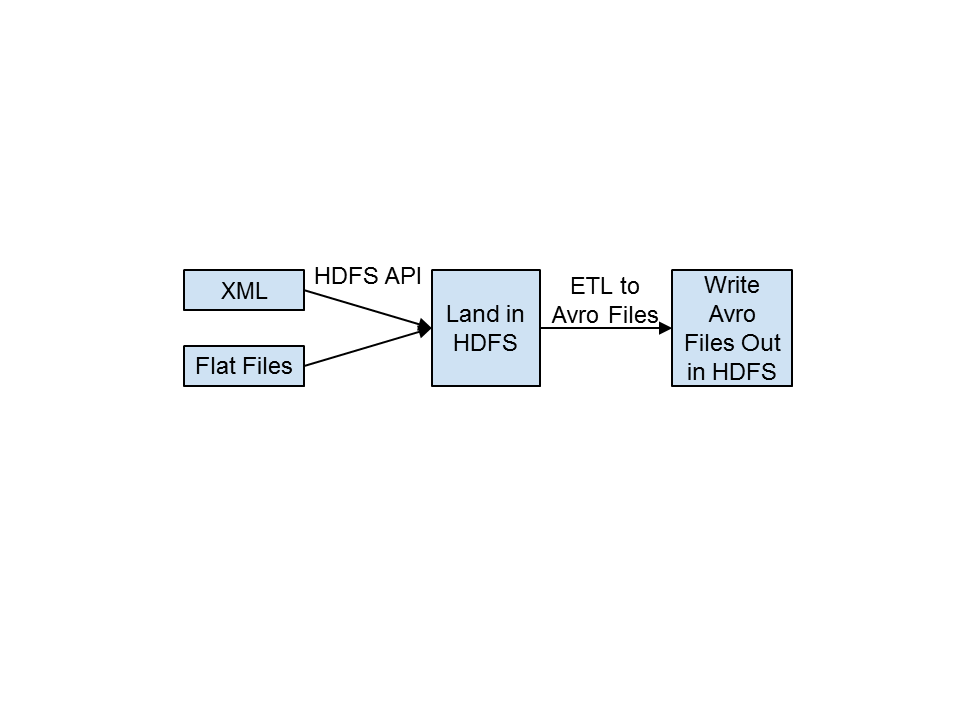

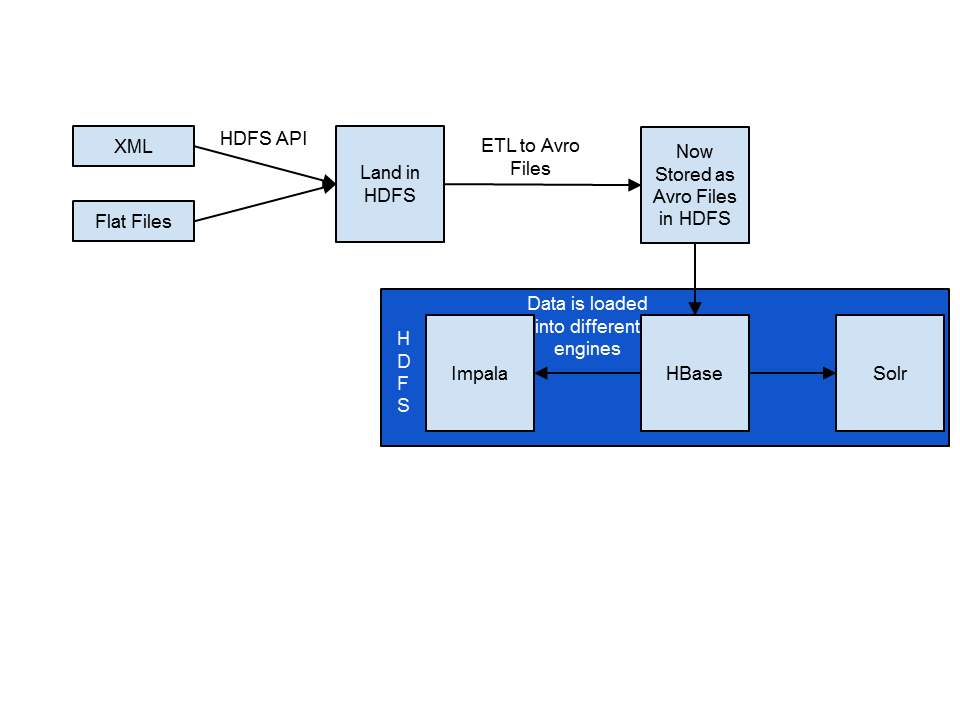

The high level Omneo architecture is shown below:

Ingest/pre-processing

Processing/Serving

User Experience

Ingest/pre-processing

Figure 1-1. Batch ingest using HDFS API

The ingest/pre-processing phase includes acquiring the flat files, landing them in HDFS, and converting the files into Avro. As noted in the above diagram, Omneo currently receives all files in a batch manner. The files arrive in a CSV format or in a XML file format. The files are loaded into HDFS through the HDFS API. Once the files are loaded into Hadoop a series of transformations are performed to join the relevant data sets together. Most of these joins are done based on a primary key in the data. In the case of electronic manufacturing this is normally a serial number to identify the product throughout its lifecycle. These transformations are all handled through the MapReduce framework. Omneo wanted to provide a graphical interface for consultants to integrate the data rather than code custom mapReduce. To accomplish this they partnered with Pentaho to expedite time to production. Once the data has been transformed and joined together it is then serialized into the Avro format.

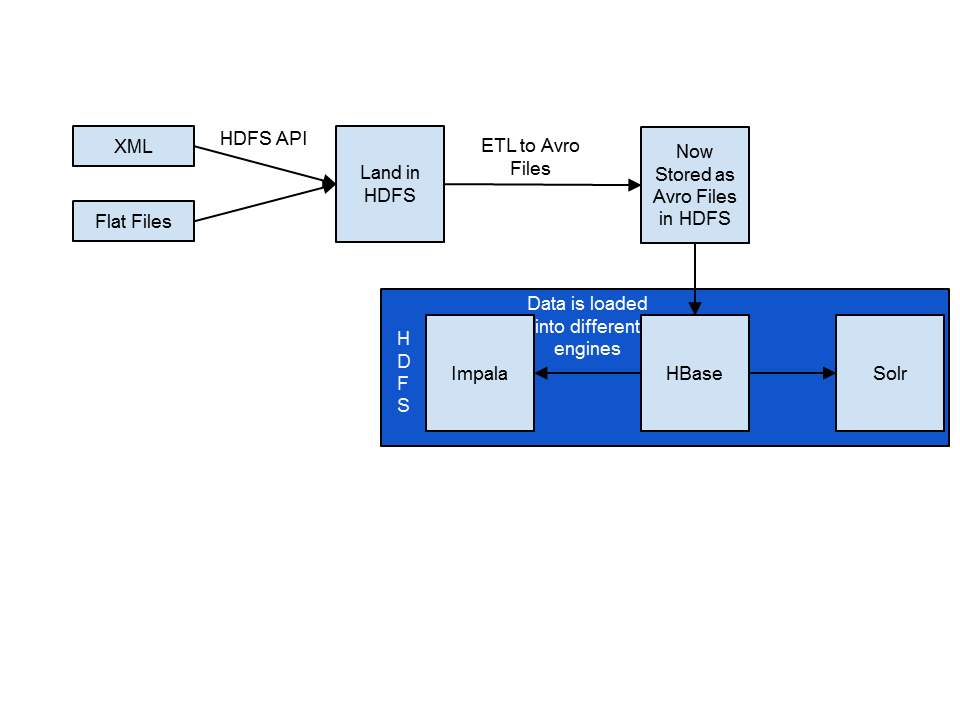

Processing/Serving

Figure 1-2. Using Avro for a unified storage format

Once the data has been converted into Avro it is loaded into HBase. Since the data is already being presented to Omneo in batch, we take advantage of this and use bulk loads. The data is loaded into a temporary HBase table using the bulk loading tool. The previously mentioned MapReduce jobs output HFiles that are ready to be loaded into HBase. The HFiles are loaded through the completebulkload tool.The completebulkload works by passing in a URL, which the tool uses to locate the files in HDFS. Next, the bulk load tool will load each file into the relevant region being served by each RegionServer. Occasionally a region has been split after the HFiles were created, and the bulk load tool will automatically split the new HFile according to the correct region boundaries.