Soumendra Mohanty , Madhu Jagadeesh and Harsha Srivatsa Big Data Imperatives Enterprise Big Data Warehouse, BI Implementations and Analytics 10.1007/978-1-4302-4873-6_1 Soumendra Mohanty 2013

1. Big Data in the Enterprise

Humans have been generating data for thousands of years. More recently we have seen an amazing progression in the amount of data produced from the advent of mainframes to client server to ERP and now everything digital. For years the overwhelming amount of data produced was deemed useless. But data has always been an integral part of every enterprise, big or small. As the importance and value of data to an enterprise became evident, so did the proliferation of data silos within an enterprise. This data was primarily of structured type, standardized and heavily governed (either through enterprise wide programs or through business functions or IT), the typical volumes of data were in the range of few terabytes and in some cases due to compliance and regulation requirements the volumes expectedly went up several notches higher.

Big data is a combination of transactional data and interactive data. While technologies have mastered the art of managing volumes of transaction data, it is the interactive data that is adding variety and velocity characteristics to the ever-growing data reservoir and subsequently poses significant challenges to enterprises.

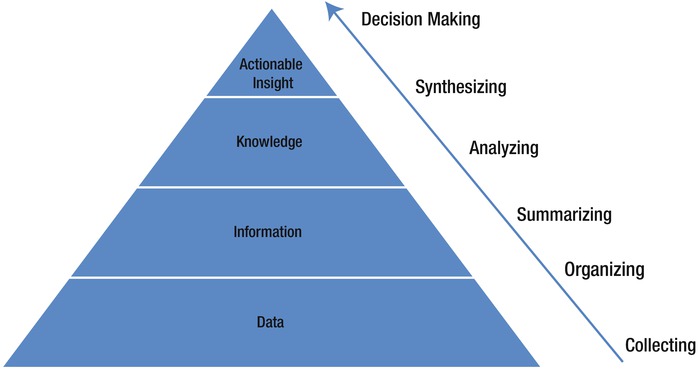

Irrespective of how data is managed within an enterprise, if it is leveraged properly, it can deliver immense business values. Figure illustrates the value cycle of data, from raw data to decision making. In the early 2000s, the acceptance of concepts like Enterprise Data Warehouse (EDW), Business Intelligence (BI) and analytics, helped enterprises to transform raw data collections into actionable wisdom. Analytics applications such as customer analytics, financial analytics, risk analytics, product analytics, health-care analytics became an integral part of the business applications architecture of any enterprise. But all of these applications were dealing with only one type of data: structured data.

Figure 1-1.

Transforming raw data into action-guiding wisdom

The ubiquity of the Internet has dramatically changed the way enterprises function. Essentially most every business became a digital business. The result was a data explosion. New application paradigms such as web 2.0, social media applications, cloud computing, and software-as-a-service applications further contributed to the data explosion. These new application paradigms added several new dimensions to the very definition of data. Data sources for an enterprise were no longer confined to data stores within the corporate firewalls but also to what is available outside the firewalls. Companies such as LinkedIn, Facebook, Twitter, and Netflix took advantage of these newer data sources to launch innovative product offerings to millions of end users; a new business paradigm of consumerism was born.

Data regardless of type, location, and source increasingly has become a core business asset for an enterprise and is now categorized as belonging to two camps: internal data (enterprise application data) and external data (e.g., web data). With that, a new term has emerged: big data . So, what is the definition of this all-encompassing arena called big data?

To start with, the definition of big data veers into 3Vs (exploding data volumes, data getting generated at high velocity and data now offering more variety); however, if you scan the Internet for a definition of big data, you will find many more interpretations. There are also other interesting observations around big data: it is not only the 3Vs that need to be considered, rather when the scale of data poses real challenges to the traditional data management principles, it can then be considered a big data problem. The heterogeneous nature of big data across multiple platforms and business functions makes it difficult to be managed by following the traditional data management principles, and there is no single platform or solution that has answers to all the questions related to big data. On the other hand, there is still a vast trove of data within the enterprise firewalls that is unused (or underused) because it has historically been too voluminous and/or raw (i.e., minimally structured) to be exploited by conventional information systems, or too costly or complex to integrate and exploit.

Big data is more a concept than a precise term. Some categorize big data as a volume issue, only to petabyte-scale data collections (> one million GB); some associate big data with the variety of data types even if the volume is in terabytes. These interpretations have made big data issues situational.

The pervasiveness of the Internet has pushed generation and usage of data to unprecedented levels. This aspect of digitization has taken a new meaning. The term data is now expanding to cover events captured and stored in the form of text, numbers, graphics, video, images, sound, and signals.

Table illustrates the measures of scale of data.

Table 1-1.

Measuring Big Data

1000 Gigabytes (GB) = 1 Terabyte (TB) |

1000 Terabytes = 1 Petabyte (PB) |

1000 Petabytes = 1 Exabyte (EB) |

1000 Exabytes = 1 Zettabyte (ZB) |

1000 Zettabytes = 1 Yottabyte (YB) |

Is big data a new problem for enterprises? Not necessarily.

Big data has been of concern in few selected industries and scenarios for some time: physical sciences (meteorology, physics), life sciences (genomics, biomedical research), financial institutions (banking, insurance, and capital markets) and government (defense, treasury). For these industries, big data was primarily a data volume problem, and to solve these data-volume-related issues they had heavily relied on a mash-up of custom-developed technologies and a set of complex programs to collect and manage the data. But, when doing so, these industries and vendor products generally made the total cost of ownership (TCO) of the IT infrastructure rise exponentially every year.

CIOs and CTOs have always grappled with dilemmas like how to lower IT costs to manage the ever-increasing volumes of data, how to build systems that are scalable, how to address performance-related concerns to meet business requirements that are becoming increasingly global in scope and reach, how to manage data security, and privacy and data-quality-related concerns. The polystructured nature of big data has made the concerns increase in manifold ways: how does an industry effectively utilize the poly-structured nature of data (structured data like database content, semi-structured data like log files or XML files and unstructured content like text documents or web pages or graphics) in a cost effective manner?