Dr. Sunil Kumar Chinnamgari - R Machine Learning Projects

Here you can read online Dr. Sunil Kumar Chinnamgari - R Machine Learning Projects full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2019, publisher: Packt Publishing, genre: Children. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:R Machine Learning Projects

- Author:

- Publisher:Packt Publishing

- Genre:

- Year:2019

- Rating:3 / 5

- Favourites:Add to favourites

- Your mark:

- 60

- 1

- 2

- 3

- 4

- 5

R Machine Learning Projects: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "R Machine Learning Projects" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

R Machine Learning Projects — read online for free the complete book (whole text) full work

Below is the text of the book, divided by pages. System saving the place of the last page read, allows you to conveniently read the book "R Machine Learning Projects" online for free, without having to search again every time where you left off. Put a bookmark, and you can go to the page where you finished reading at any time.

Font size:

Interval:

Bookmark:

Fraud management has been known to be a very painful problem for banking and finance firms. Card-related frauds have proven to be especially difficult for firms to combat. Technologies such as chip and PIN are available and are already used by most credit card system vendors, such as Visa and MasterCard. However, the available technology is unable to curtail 100% of credit card fraud. Unfortunately, scammers come up with newer ways of phishing to obtain passwords from credit card users. Also, devices such as skimmers make stealing credit card data a cake walk!

Despite the availability of some technical abilities to combat credit card fraud, The Nilson Report, a leading publication covering payment systems worldwide, estimated that credit card fraud is going to soar to $32 billion in 2020 (https://nilsonreport.com/upload/content_promo/The_Nilson_Report_10-17-2017.pdf). To get a perspective on the estimated loss, it is more than the recent profits posted by companies such as Coca-Cola ($2 billion), Warren Buffets Berkshire Hathaway ($24 billion), and JP Morgan Chase ($23.5 billion)!

While credit card chip technology-providing companies have been investing hugely to advance the technology to counter credit card fraud, in this chapter, we are going to examine whether and how far machine learning can help deal with the credit card fraud problem. We will cover the following topics as we progress through this chapter:

- Machine learning in credit card fraud detection

- Autoencoders and the various types

- The credit card fraud dataset

- Building AEs with the H2O library in R

- Implementation of auto encoder for credit card fraud detection

In 1966, Professor Seymour Papert at MIT conceptualized an ambitious summer project titled The Summer Vision Project. The task for the graduate student was to plug a camera into a computer and enable it to understand what it sees! I am sure it would have been super-difficult for the graduate student to have finished this project, as even today the task remains half complete.

A human being, when they look outside, is able to recognize the objects that they see. Without thinking, they are able to classify a cat as a cat, a dog as a dog, a plant as a plant, an animal as an animalthis is happening because the human brain draws knowledge from its extensive prelearned database. After all, as human beings, we have millions of years' worth of evolutionary context that enables us draw inferences from the thing that we see. Computer vision deals with replicating the human vision processes so as to pass them on to machines and automate them.

This chapter is all about learning the theory and implementation of computer vision through machine learning (ML). We will build a feedforward deep learning network and LeNet to enable handwritten digit recognition. We will also build a project that uses a pretrained Inception-BatchNorm network to identify objects in an image. We will cover the following topics as we progress in this chapter:

- Understanding computer vision

- Achieving computer vision with deep learning

- Introduction to the MNIST dataset

- Implementing a deep learning network for handwritten digit recognition

- Implementing computer vision with pretrained models

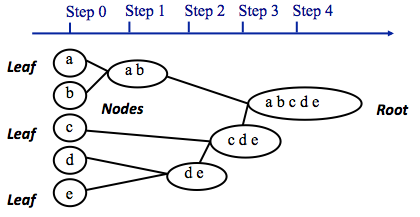

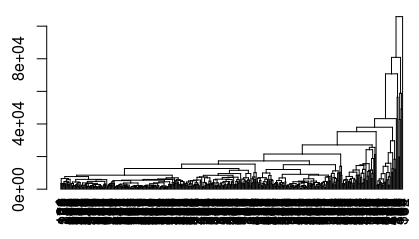

AGNES is the reverse of DIANA in the sense that it follows a bottom-up approach to clustering the dataset. The following diagram illustrates the working principle of the AGNES algorithm for clustering:

Except for the bottom-up approach followed by AGNES, the implementation details behind the algorithm are the same as for DIANA; therefore, we won't repeat the discussion of the concepts here. The following code block clusters our wholesale dataset into three clusters with AGNES; it also creates a visualization of the clusters thus formed:

# setting the working directory to a folder where dataset is locatedsetwd('/home/sunil/Desktop/chapter5/')

# reading the dataset to cust_data dataframe

cust_data = read.csv(file='Wholesale_customers_ data.csv', header = TRUE)

# removing the non-required columns

cust_data<-cust_data[,c(-1,-2)]

# including the cluster library so as to make use of agnes function

library(cluster)

# Compute agnes()

cust_data_agnes<-agnes(cust_data, metric = "euclidean",stand = FALSE)

# plotting the dendogram from agnes output

pltree(cust_data_agnes, cex = 0.6, hang = -1,

main = "Dendrogram of agnes")

# agglomerative coefficient; amount of clustering structure found

print(cust_data_agnes$ac)

plot(as.dendrogram(cust_data_agnes), cex = 0.6,horiz = TRUE)

# obtain the clusters through cuttree

# Cut tree into 3 groups

grp <- cutree(cust_data_agnes, k = 3)

# Number of members in each cluster

table(grp)

# Get the observations of cluster 1

rownames(cust_data)[grp == 1]

# visualization of clusters

library(factoextra)

fviz_cluster(list(data = cust_data, cluster = grp))

library(factoextra)

fviz_cluster(list(data = cust_data, cluster = grp))

plot(as.hclust(cust_data_agnes))

rect.hclust(cust_data_agnes, k = 3, border = 2:5)

This is the output that you will obtain:

[1] 0.9602911> plot(as.dendrogram(cust_data_agnes), cex = 0.6,horiz = FALSE)

Take a look at the following screenshot:

Take a look at the following code block:

> grp <- cutree(cust_data_agnes, k = 3)> # Number of members in each cluster

> table(grp)

grp

1 2 3

434 5 1

> rownames(cust_data)[grp == 1]

[1] "1" "2" "3" "4" "5" "6" "7" "8" "9" "10" "11" "12" "13" "14" "15" "16" "17" "18"

[19] "19" "20" "21" "22" "23" "24" "25" "26" "27" "28" "29" "30" "31" "32" "33" "34" "35" "36"

[37] "37" "38" "39" "40" "41" "42" "43" "44" "45" "46" "47" "49" "50" "51" "52" "53" "54" "55"

[55] "56" "57" "58" "59" "60" "61" "63" "64" "65" "66" "67" "68" "69" "70" "71" "72" "73" "74"

[73] "75" "76" "77" "78" "79" "80" "81" "82" "83" "84" "85" "88" "89" "90" "91" "92" "93" "94"

[91] "95" "96" "97" "98" "99" "100" "101" "102" "103" "104" "105" "106" "107" "108" "109" "110" "111" "112"

[109] "113" "114" "115" "116" "117" "118" "119" "120" "121" "122" "123" "124" "125" "126" "127" "128" "129" "130"

[127] "131" "132" "133" "134" "135" "136" "137" "138" "139" "140" "141" "142" "143" "144" "145" "146" "147" "148"

[145] "149" "150" "151" "152" "153" "154" "155" "156" "157" "158" "159" "160" "161" "162" "163" "164" "165" "166"

[163] "167" "168" "169" "170" "171" "172" "173" "174" "175" "176" "177" "178" "179" "180" "181" "183" "184" "185"

[181] "186" "187" "188" "189" "190" "191" "192" "193" "194" "195" "196" "197" "198" "199" "200" "201" "202" "203"[199] "204" "205" "206" "207" "208" "209" "210" "211" "212" "213" "214" "215" "216" "217" "218" "219" "220" "221"

[217] "222" "223" "224" "225" "226" "227" "228" "229" "230" "231" "232" "233" "234" "235" "236" "237" "238" "239"

[235] "240" "241" "242" "243" "244" "245" "246" "247" "248" "249" "250" "251" "252" "253" "254" "255" "256" "257"

[253] "258" "259" "260" "261" "262" "263" "264" "265" "266" "267" "268" "269" "270" "271" "272" "273" "274" "275"

Font size:

Interval:

Bookmark:

Similar books «R Machine Learning Projects»

Look at similar books to R Machine Learning Projects. We have selected literature similar in name and meaning in the hope of providing readers with more options to find new, interesting, not yet read works.

Discussion, reviews of the book R Machine Learning Projects and just readers' own opinions. Leave your comments, write what you think about the work, its meaning or the main characters. Specify what exactly you liked and what you didn't like, and why you think so.