Rajdeep Dua - Keras Deep Learning Cookbook: Over 80 Recipes for Implementing Deep Neural Networks in Python

Here you can read online Rajdeep Dua - Keras Deep Learning Cookbook: Over 80 Recipes for Implementing Deep Neural Networks in Python full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2018, publisher: Packt Publishing, genre: Computer. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Keras Deep Learning Cookbook: Over 80 Recipes for Implementing Deep Neural Networks in Python

- Author:

- Publisher:Packt Publishing

- Genre:

- Year:2018

- Rating:4 / 5

- Favourites:Add to favourites

- Your mark:

Keras Deep Learning Cookbook: Over 80 Recipes for Implementing Deep Neural Networks in Python: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Keras Deep Learning Cookbook: Over 80 Recipes for Implementing Deep Neural Networks in Python" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

Leverage the power of deep learning and Keras to solve complex computational problems

Key Features- Recipes on training and fine-tuning your neural network models efficiently using Keras

- A highly practical guide to simplify your understanding of neural networks and their implementation

- This book is a must-have on your shelf if you are planning to put your deep learning knowledge to practical use

Keras has quickly emerged as a popular deep learning library. Written in Python, it allows you to train convolutional as well as recurrent neural networks with speed and accuracy.

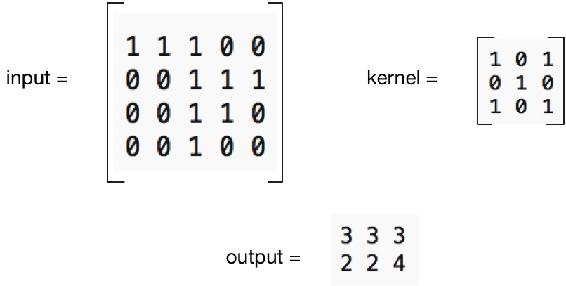

This book shows you how to tackle different problems in training efficient deep learning models using the popular Keras library. Starting with installing and setting up of Keras, the book demonstrates how you can perform deep learning with Keras on top of Tensorflow, Apache MXNet and CNTK backends. From loading the data to fitting and evaluating your model for optimal performance, you will go through a step by step process to tackle every possible problem in training deep models. You will implement efficient convolutional neural networks, recurrent neural networks, adversarial networks and more, with the help of this handy guide. You will also see how to train these models for real-world image and language processing tasks.

By the end of this book, you will have a practical, hands-on understanding of how you can leverage the power of Python and Keras to perform effective deep learning.

What you will learn- Install and configure Keras on top of Tensorflow, Apache MXNet and CNTK

- Develop a strong background in neural network programming using the Keras library

- Understand the details of different Keras layers like Core, Embedding and so on

- Use Keras to implement simple feed-forward neural networks and the more complex CNNs, RNNs

- Work with various datasets, models used for image and text classification

- Develop text summarization and Reinforcement Learning models using Keras

Data scientists and machine learning experts looking to find practical solutions to the common problems encountered while training deep learning models will find this book to be a useful resource. A basic understanding of Python, as well as some experience with machine learning and neural networks is required for this book.

**

About the AuthorRajdeep Dua has over 18 years of experience in the Cloud and Big Data space. He worked in the advocacy team for Googles big data tools, BigQuery. He worked on the Greenplum big data platform at VMware in the developer evangelist team. He also worked closely with a team on porting Spark to run on VMwares public and private cloud as a feature set. He has taught Spark and Big Data at some of the most prestigious tech schools in India: IIIT Hyderabad, ISB, IIIT Delhi, and College of Engineering Pune.

Currently, he leads the developer relations team at Salesforce India. He also works with the data pipeline team at Salesforce, which uses Hadoop and Spark to expose big data processing tools for developers.

He has published Big Data and Spark tutorials. He has also presented BigQuery and Google App Engine at the W3C conference in Hyderabad. He led the developer relations teams at Google, VMware, and Microsoft, and he has spoken at hundreds of other conferences on the cloud. Some of the other references to his work can be seen at Your Story and on ACM digital library.

His contributions to the open source community are related to Docker, Kubernetes, Android, OpenStack, and cloud foundry. You can connect with him on LinkedIn.

Manpreet Singh Ghotra has more than 15 years of experience in software development for both enterprise and big data software. He is currently working on developing a machine learning platform/apis using open source libraries and frameworks like Keras, Apache Spark, Tensorflow at Salesforce. He has worked on various machine learning systems like sentiment analysis, spam detection and anomaly detection. He was part of the machine learning group at one of the largest online retailers in the world, working on transit time calculations using Apache Mahout and the R Recommendation system using Apache Mahout. With a masters and postgraduate degree in machine learning, he has contributed to and worked for the machine learning community.

Rajdeep Dua: author's other books

Who wrote Keras Deep Learning Cookbook: Over 80 Recipes for Implementing Deep Neural Networks in Python? Find out the surname, the name of the author of the book and a list of all author's works by series.