Matthew Hallett - Mastering Spark for Data Science

Here you can read online Matthew Hallett - Mastering Spark for Data Science full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2017, publisher: Packt Publishing, genre: Computer. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Mastering Spark for Data Science

- Author:

- Publisher:Packt Publishing

- Genre:

- Year:2017

- Rating:3 / 5

- Favourites:Add to favourites

- Your mark:

Mastering Spark for Data Science: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Mastering Spark for Data Science" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

Master the techniques and sophisticated analytics used to construct Spark-based solutions that scale to deliver production-grade data science products

About This Book

- Develop and apply advanced analytical techniques with Spark

- Learn how to tell a compelling story with data science using Sparks ecosystem

- Explore data at scale and work with cutting edge data science methods

Who This Book Is For

This book is for those who have beginner-level familiarity with the Spark architecture and data science applications, especially those who are looking for a challenge and want to learn cutting edge techniques. This book assumes working knowledge of data science, common machine learning methods, and popular data science tools, and assumes you have previously run proof of concept studies and built prototypes.

What You Will Learn

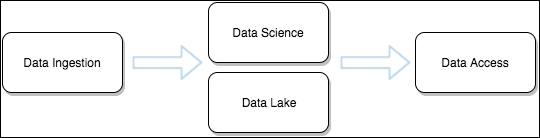

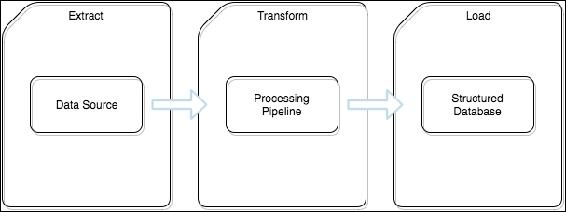

- Learn the design patterns that integrate Spark into industrialized data science pipelines

- See how commercial data scientists design scalable code and reusable code for data science services

- Explore cutting edge data science methods so that you can study trends and causality

- Discover advanced programming techniques using RDD and the DataFrame and Dataset APIs

- Find out how Spark can be used as a universal ingestion engine tool and as a web scraper

- Practice the implementation of advanced topics in graph processing, such as community detection and contact chaining

- Get to know the best practices when performing Extended Exploratory Data Analysis, commonly used in commercial data science teams

- Study advanced Spark concepts, solution design patterns, and integration architectures

- Demonstrate powerful data science pipelines

In Detail

Data science seeks to transform the world using data, and this is typically achieved through disrupting and changing real processes in real industries. In order to operate at this level you need to build data science solutions of substance solutions that solve real problems. Spark has emerged as the big data platform of choice for data scientists due to its speed, scalability, and easy-to-use APIs.

This book deep dives into using Spark to deliver production-grade data science solutions. This process is demonstrated by exploring the construction of a sophisticated global news analysis service that uses Spark to generate continuous geopolitical and current affairs insights.You will learn all about the core Spark APIs and take a comprehensive tour of advanced libraries, including Spark SQL, Spark Streaming, MLlib, and more.

You will be introduced to advanced techniques and methods that will help you to construct commercial-grade data products. Focusing on a sequence of tutorials that deliver a working news intelligence service, you will learn about advanced Spark architectures, how to work with geographic data in Spark, and how to tune Spark algorithms so they scale linearly.

Style and approach

This is an advanced guide for those with beginner-level familiarity with the Spark architecture and working with Data Science applications. Mastering Spark for Data Science is a practical tutorial that uses core Spark APIs and takes a deep dive into advanced libraries including: Spark SQL, visual streaming, and MLlib. This book expands on titles like: Machine Learning with Spark and Learning Spark. It is the next learning curve for those comfortable with Spark and looking to improve their skills.

Downloading the example code for this book. You can download the example code files for all Packt books you have purchased from your account at http://www.PacktPub.com. If you purchased this book elsewhere, you can visit http://www.PacktPub.com/support and register to have the code file.

Matthew Hallett: author's other books

Who wrote Mastering Spark for Data Science? Find out the surname, the name of the author of the book and a list of all author's works by series.

![EMC Education Services [EMC Education Services] - Data Science and Big Data Analytics: Discovering, Analyzing, Visualizing and Presenting Data](/uploads/posts/book/119625/thumbs/emc-education-services-emc-education-services.jpg)