Akash Tandon - Advanced Analytics with PySpark: Patterns for Learning from Data at Scale Using Python and Spark

Here you can read online Akash Tandon - Advanced Analytics with PySpark: Patterns for Learning from Data at Scale Using Python and Spark full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2022, publisher: OReilly Media, genre: Computer. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Advanced Analytics with PySpark: Patterns for Learning from Data at Scale Using Python and Spark

- Author:

- Publisher:OReilly Media

- Genre:

- Year:2022

- Rating:5 / 5

- Favourites:Add to favourites

- Your mark:

Advanced Analytics with PySpark: Patterns for Learning from Data at Scale Using Python and Spark: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Advanced Analytics with PySpark: Patterns for Learning from Data at Scale Using Python and Spark" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

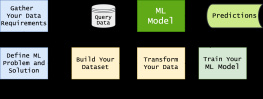

The amount of data being generated today is staggering and growing. Apache Spark has emerged as the de facto tool to analyze big data and is now a critical part of the data science toolbox. Updated for Spark 3.0, this practical guide brings together Spark, statistical methods, and real-world datasets to teach you how to approach analytics problems using PySpark, Sparks Python API, and other best practices in Spark programming.

Data scientists Akash Tandon, Sandy Ryza, Uri Laserson, Sean Owen, and Josh Wills offer an introduction to the Spark ecosystem, then dive into patterns that apply common techniques-including classification, clustering, collaborative filtering, and anomaly detection, to fields such as genomics, security, and finance. This updated edition also covers NLP and image processing.

If you have a basic understanding of machine learning and statistics and you program in Python, this book will get you started with large-scale data analysis.

- Familiarize yourself with Sparks programming model and ecosystem

- Learn general approaches in data science

- Examine complete implementations that analyze large public datasets

- Discover which machine learning tools make sense for particular problems

- Explore code that can be adapted to many uses

Akash Tandon: author's other books

Who wrote Advanced Analytics with PySpark: Patterns for Learning from Data at Scale Using Python and Spark? Find out the surname, the name of the author of the book and a list of all author's works by series.