Mahmoud Parsian - Data Algorithms with Spark: Recipes and Design Patterns for Scaling Up using PySpark

Here you can read online Mahmoud Parsian - Data Algorithms with Spark: Recipes and Design Patterns for Scaling Up using PySpark full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2022, publisher: OReilly Media, genre: Home and family. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Data Algorithms with Spark: Recipes and Design Patterns for Scaling Up using PySpark

- Author:

- Publisher:OReilly Media

- Genre:

- Year:2022

- Rating:4 / 5

- Favourites:Add to favourites

- Your mark:

Data Algorithms with Spark: Recipes and Design Patterns for Scaling Up using PySpark: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Data Algorithms with Spark: Recipes and Design Patterns for Scaling Up using PySpark" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

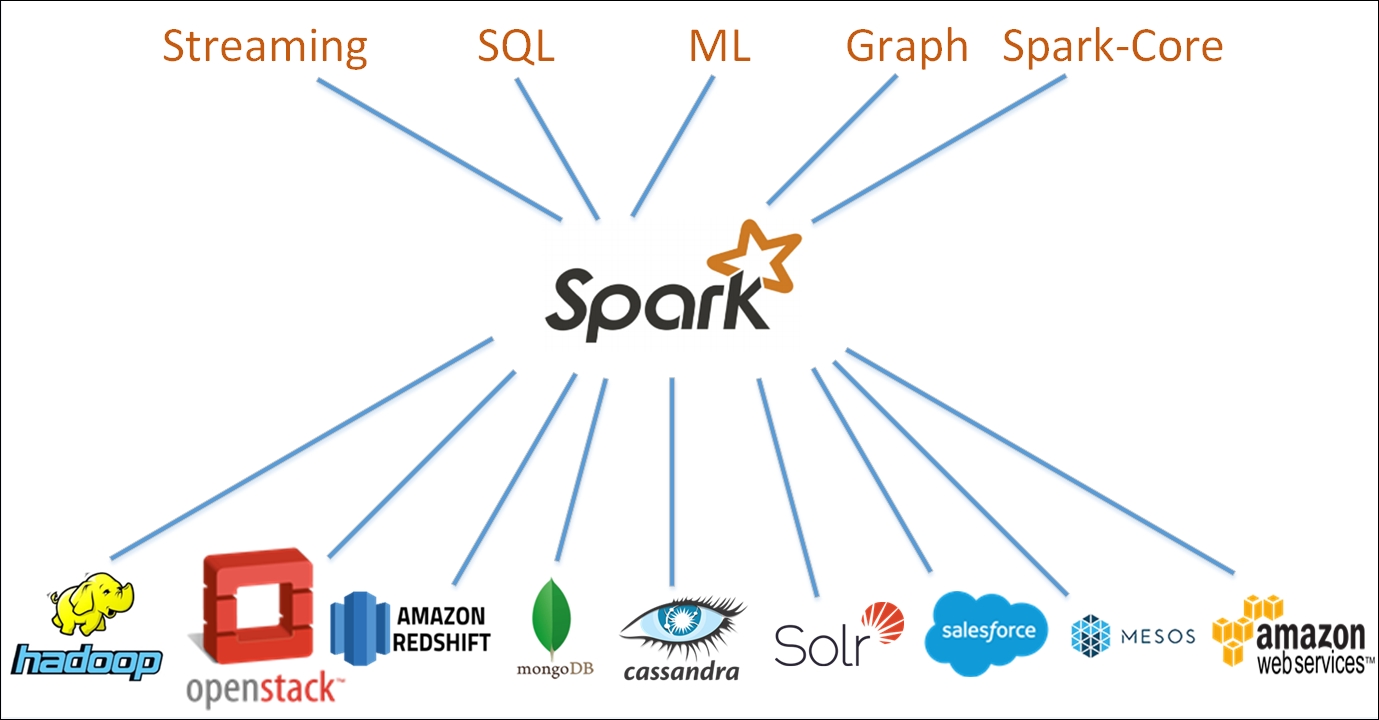

Apache Sparks speed, ease of use, sophisticated analytics, and multilanguage support makes practical knowledge of this cluster-computing framework a required skill for data engineers and data scientists. With this hands-on guide, anyone looking for an introduction to Spark will learn practical algorithms and examples using PySpark.

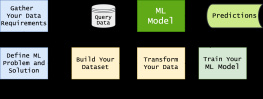

In each chapter, author Mahmoud Parsian shows you how to solve a data problem with a set of Spark transformations and algorithms. Youll learn how to tackle problems involving ETL, design patterns, machine learning algorithms, data partitioning, and genomics analysis. Each detailed recipe includes PySpark algorithms using the PySpark driver and shell script.

With this book, you will:

- Learn how to select Spark transformations for optimized solutions

- Explore powerful transformations and reductions including reduceByKey(), combineByKey(), and mapPartitions()

- Understand data partitioning for optimized queries

- Design machine learning algorithms including Naive Bayes, linear regression, and logistic regression

- Build and apply a model using PySpark design patterns

- Apply motif-finding algorithms to graph data

- Analyze graph data by using the GraphFrames API

- Apply PySpark algorithms to clinical and genomics data (such as DNA-Seq)

Mahmoud Parsian: author's other books

Who wrote Data Algorithms with Spark: Recipes and Design Patterns for Scaling Up using PySpark? Find out the surname, the name of the author of the book and a list of all author's works by series.