Contents

Guide

Pagebreaks of the print version

HOW SMART MACHINES THINK

SEAN GERRISH

Foreword by Kevin Scott, CTO, Microsoft

The MIT Press

Cambridge, Massachusetts

London, England

2018 Massachusetts Institute of Technology

All rights reserved. No part of this book may be reproduced in any form by any electronic or mechanical means (including photocopying, recording, or information storage and retrieval) without permission in writing from the publisher.

This book was set in Bembo Std by Westchester Publishing Services. Printed and bound in the United States of America.

Library of Congress Cataloging-in-Publication Data is available.

Names: Gerrish, Sean, author.

Title: How smart machines think / Sean Gerrish.

Description: Cambridge, MA : MIT Press, [2018] | Includes bibliographical references and index.

Identifiers: LCCN 2017059862 | ISBN 9780262038409 (hardcover : alk. paper)

Subjects: LCSH: Neural networks (Computer science) | Machine learning. | Artificial intelligence.

Classification: LCC QA76.87 .G49 2018 | DDC 006.3--dc23 LC record available at https://lccn.loc.gov/2017059862

This book is for the engineers and researchers who conceived of and then built these smart machines.

CONTENTS

LIST OF FIGURES

A centrifugal governor, the precursor to electronic control systems. As the engine runs more quickly, the rotating axis with the flyballs spins more rapidly, and the flyballs are pulled outward by centrifugal force. Through a series of levers, this causes the valve to the engine to close. If the engine is running too slowly, the valve will allow more fuel through.

The control loop for a PID controller, the three-rule controller described in the text. The controller uses feedback from the speedometer to adjust inputs to the engine, such as power.

An example map. Darker shades indicate a higher travel cost.

The search frontier at different iterations of Dijkstras algorithm.

An optimal path through this map.

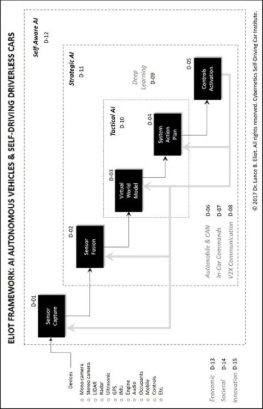

A simplified summary of the software and hardware organization of Stanley, the Stanford Racing Teams 2005 Grand Challenge winner.

Area A in the DARPA Urban Challenge. Professional human drivers circled in the outer loop as self-driving cars circulated on the right half. The primary challenge here for autonomous cars was to merge into a lane of moving traffic at the stop sign. Self-driving cars were allowed to circulate as many times as they could within their time limit.

Bosss architecture, simplified: the hardware, the perception (and world-modeling), and the reasoning (planning) modules, organized in increasing levels of reasoning abstraction from left to right. Its highest-level reasoning layer (planning, far right) was organized into its own three-layer architecture: the controller (route planner module), the sequencer (Monopoly board module), and the deliberator (motion planner module). The motion planner could have arguably been placed with the sequencer.

The handle intersection finite state machine. The Monopoly board module steps through the diagram, from start to done. The state machine waits for precedence and then attempts to enter the intersection. If the intersection is partially blocked, it is handled as a zone, which is a complex area like a parking lot, instead of being handled as a lane; otherwise the state machine creates a virtual lane through the intersection and drives in that lane. This is a simplified version of the state machine described in Urmson et al. (see note 7).

The motion planner of a self-driving car has been instructed by the Monopoly board to park in the designated parking spot.

The car has an internal map represented as a grid in which obstacles fill up cells in that grid. The motion planner also uses a cost function when picking its path (shaded gray). The cost function incorporates the distance to obstacles (in this case, other cars).

The motion planner searches for a path to its goal. The path will comprise many small path segments that encode velocity, position, and orientation. Unlike this picture, the search is performed from the end state to the start state.

A candidate path to the goal.

By applying a classifier to the recipe Holiday Baked Alaska we can see whether it would be good for kids. The weights stay fixed, and the details (and hence the recipe score) change for each recipe. Holiday Baked Alaska details are from the Betty Crocker website (see notes).

Some examples of star ratings that Netflix customers might have made on various movies. Netflix provided some of the ratings in the matrix (shown as numbers). Competitors needed to predict some of the missing recommendations (shown as question marks).

A test to determine whether Steven Spielberg would like the movie Jurassic Park. Here, we can assume Jurassic Park falls into two genres: science fiction and adventure. Steven Spielberg tends to like science fiction movies, comedies, and adventures, and tends not to like horror movies, as indicated by his genre affinities. We combine these into a score by multiplying together the genre scores, which are 0 or 1, with Spielbergs affinities for those genres, and adding the results together. The result is a fairly high affinity score describing how much Spielberg likes Jurassic Park.

Popularity of the movie The Matrix over time.

The chart shows team progress toward the Netflix Prize. The final team to win the competition was BellKors Pragmatic Chaos.

Two of the Atari games played by DeepMinds agent: Space Invaders (top) and Breakout (bottom).

DeepMinds Atari-playing agent ran continuously. At any given moment, it would receive the last four screenshots worth of pixels as an input, and then it would run an algorithm to decide on its next actions and output its action.

The golf course used in the reinforcement-learning example. Terrain types, ranging from light grey to dark black: the green (least difficult), fairway, rough, sand trap, and water hazard (most difficult). The starting point is on the left, and the goal is in the top-right corner.

Your goal is to hit the ball from the start position into the hole in as few swings as possible; the ball moves only one square (or zero squares) per swing.

The golf course also has explosive mines, each of which is marked with an x. You must avoid hitting these.

One way to train a reinforcement-learning agent is with simulation. First the agent plays through a game to generate a series of state-action pairs and rewards, as shown in the left panel. Next, as shown in the right panel, the agents estimate of future rewards for taking different actions when its on a given state is updated using the state-action pairs experienced by the agent. This particular method is sometimes called temporal difference, or TD, learning.

Trajectories (white paths) made by the golf-playing agent. (a): a trajectory made by the agent after playing 10 games. (b): a trajectory made by the agent after playing 3,070 games.

A simple neural network.

Propagation of values through a neural network. In neural networks, the value of a neuron is either specified by outside datathat is, its an input neuronor it is a function of other upstream neurons that act as inputs to it. When the value of the neuron is determined by other neurons, the values of the upstream neurons are weighted by the edges, summed, and passed through a nonlinear function such as max(x,0), tanh(x), or an S-shaped function, exp(x)/(exp(x) + 1).

The performance of several neural networks (b)(d) trained to represent a target image (a). The networks take as their inputs the