V Kishore Ayyadevara - Pro Machine Learning Algorithms: A Hands-On Approach to Implementing Algorithms in Python and R

Here you can read online V Kishore Ayyadevara - Pro Machine Learning Algorithms: A Hands-On Approach to Implementing Algorithms in Python and R full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2018, publisher: Apress, genre: Computer. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Pro Machine Learning Algorithms: A Hands-On Approach to Implementing Algorithms in Python and R

- Author:

- Publisher:Apress

- Genre:

- Year:2018

- Rating:3 / 5

- Favourites:Add to favourites

- Your mark:

Pro Machine Learning Algorithms: A Hands-On Approach to Implementing Algorithms in Python and R: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Pro Machine Learning Algorithms: A Hands-On Approach to Implementing Algorithms in Python and R" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

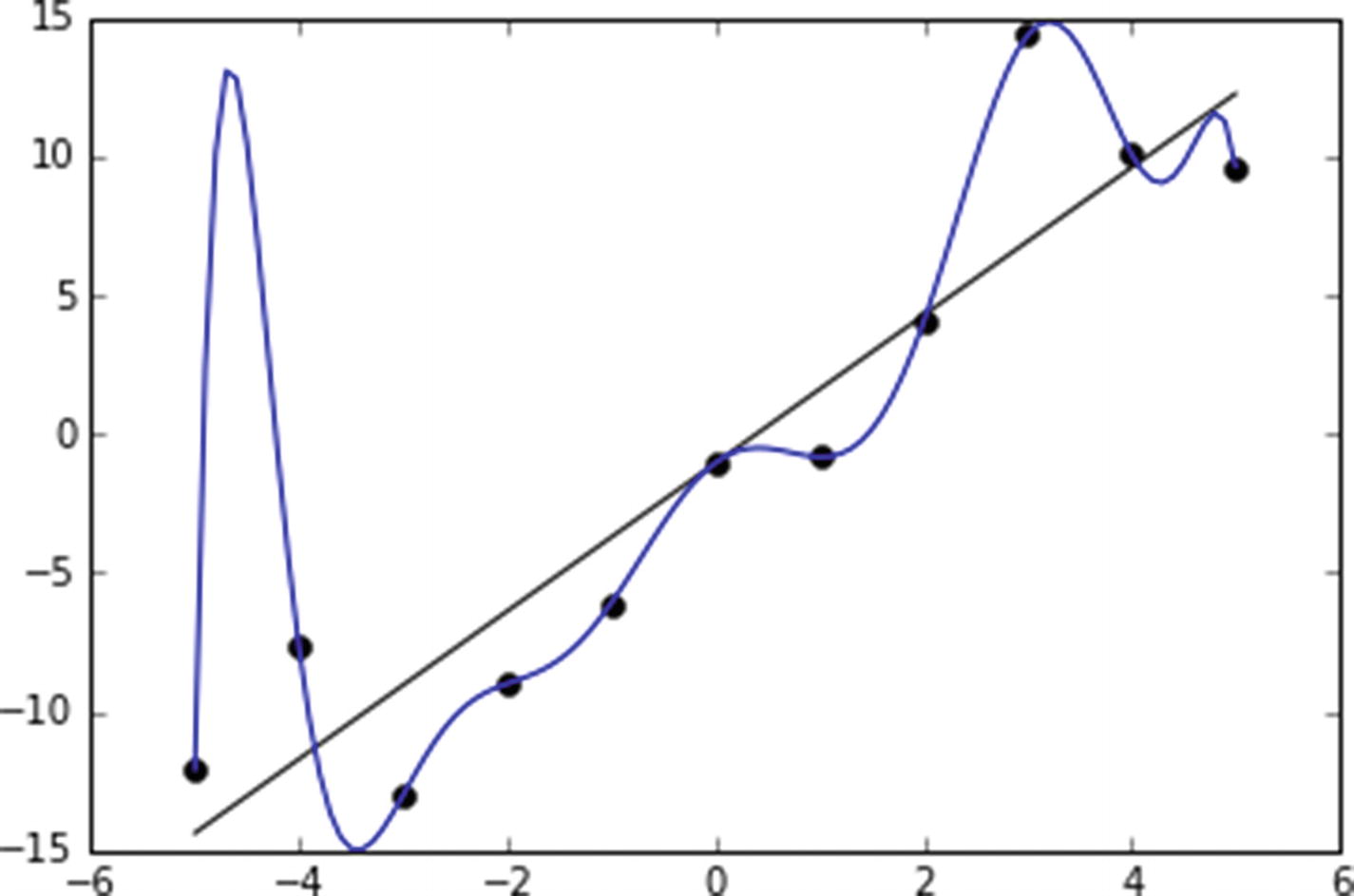

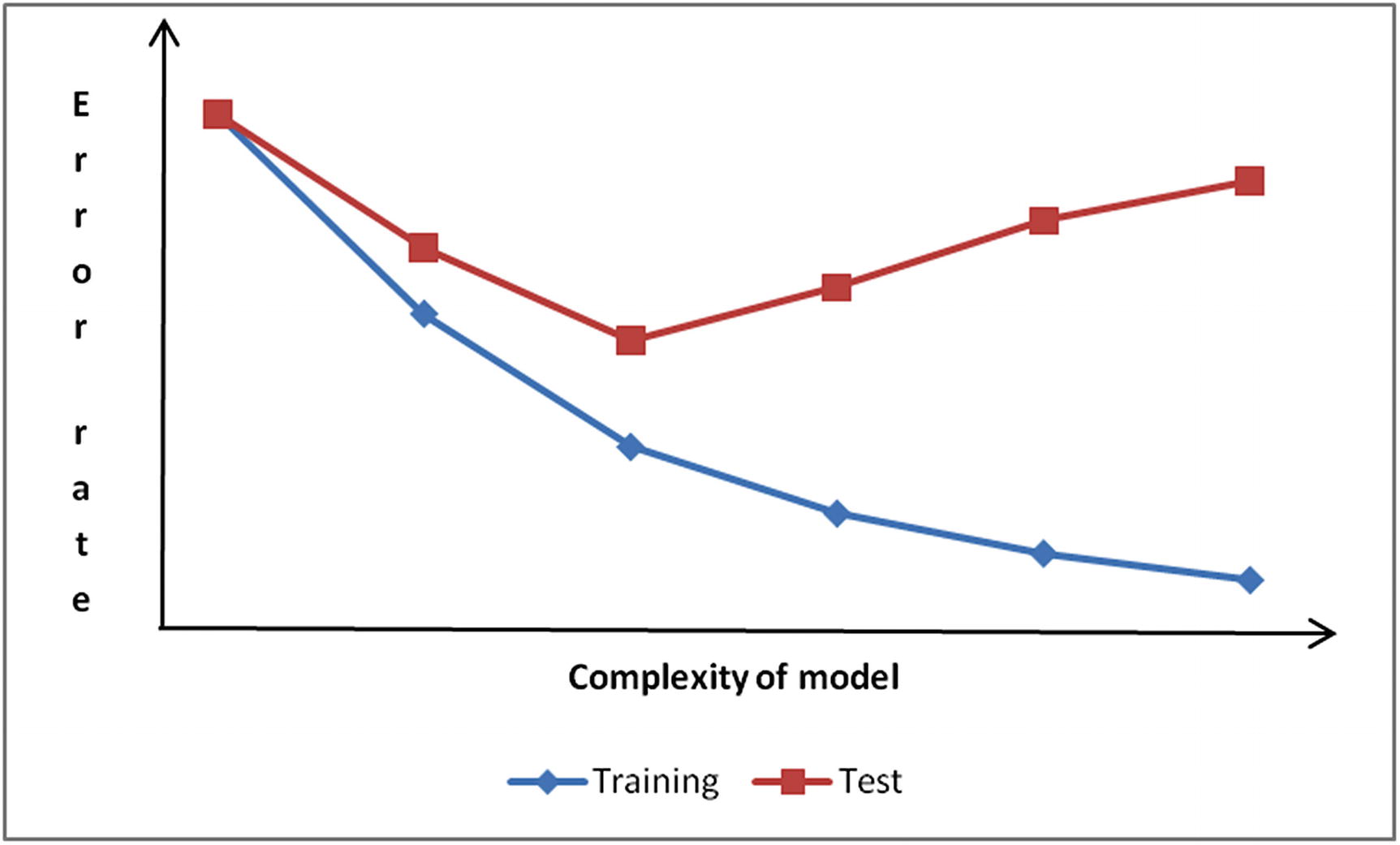

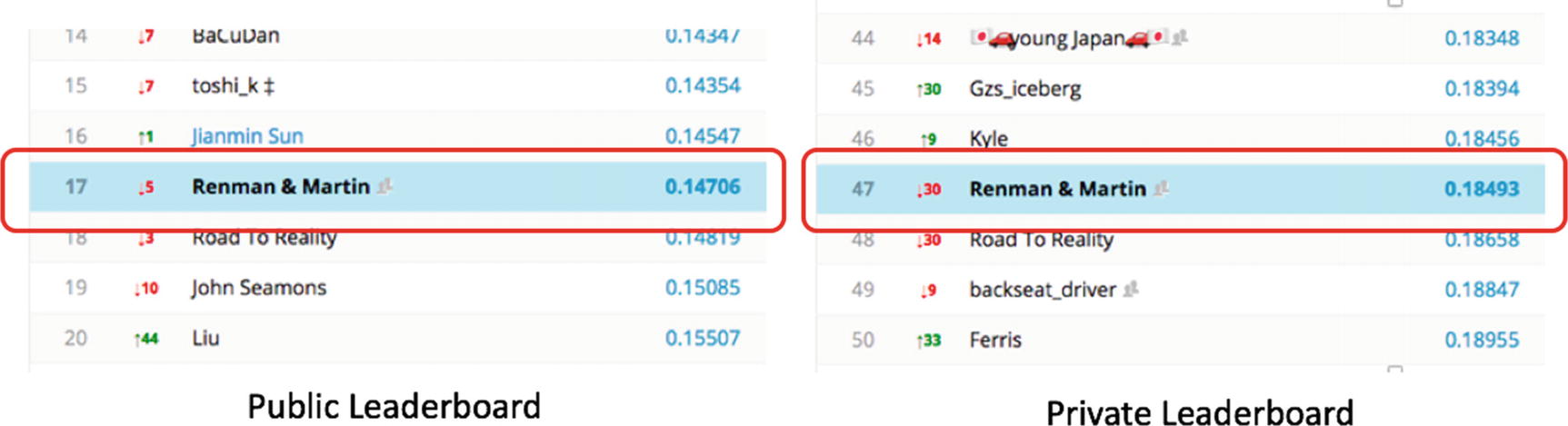

Bridge the gap between a high-level understanding of how an algorithm works and knowing the nuts and bolts to tune your models better. This book will give you the confidence and skills when developing all the major machine learning models. In Pro Machine Learning Algorithms, you will first develop the algorithm in Excel so that you get a practical understanding of all the levers that can be tuned in a model, before implementing the models in Python/R.You will cover all the major algorithms: supervised and unsupervised learning, which include linear/logistic regression; k-means clustering; PCA; recommender system; decision tree; random forest; GBM; and neural networks. You will also be exposed to the latest in deep learning through CNNs, RNNs, and word2vec for text mining. You will be learning not only the algorithms, but also the concepts of feature engineering to maximize the performance of a model. You will see the theory along with case studies, such as sentiment classification, fraud detection, recommender systems, and image recognition, so that you get the best of both theory and practice for the vast majority of the machine learning algorithms used in industry. Along with learning the algorithms, you will also be exposed to running machine-learning models on all the major cloud service providers.You are expected to have minimal knowledge of statistics/software programming and by the end of this book you should be able to work on a machine learning project with confidence. What You Will LearnGet an in-depth understanding of all the major machine learning and deep learning algorithms Fully appreciate the pitfalls to avoid while building modelsImplement machine learning algorithms in the cloud Follow a hands-on approach through case studies for each algorithmGain the tricks of ensemble learning to build more accurate modelsDiscover the basics of programming in R/Python and the Keras framework for deep learningWho This Book Is ForBusiness analysts/ IT professionals who want to transition into data science roles. Data scientists who want to solidify their knowledge in machine learning.

V Kishore Ayyadevara: author's other books

Who wrote Pro Machine Learning Algorithms: A Hands-On Approach to Implementing Algorithms in Python and R? Find out the surname, the name of the author of the book and a list of all author's works by series.