Machine learning can be broadly classified into supervised and unsupervised learning. By definition, the term supervised means that the machine (the system) learns with the help of somethingtypically a labeled training data.

Training data (or a dataset ) is the basis on which the system learns to infer. An example of this process is to show the system a set of images of cats and dogs with the corresponding labels of the images (the labels say whether the image is of a cat or a dog) and let the system decipher the features of cats and dogs.

Similarly, unsupervised learning is the process of grouping data into similar categories. An example of this is to input into the system a set of images of dogs and cats without mentioning which image belongs to which category and let the system group the two types of images into different buckets based on the similarity of images.

Regression and Classification

Lets assume that we are forecasting for the number of units of Coke that would be sold in summer in a certain region. The value ranges between certain valueslets say 1 million to 1.2 million units per week. Typically, regression is a way of forecasting for such continuous variables.

Classification or prediction , on the other hand, predicts for events that have few distinct outcomesfor example, whether a day will be sunny or rainy.

Linear regression is a typical example of a technique to forecast continuous variables, whereas logistic regression is a typical technique to predict discrete variables. There are a host of other techniques, including decision trees, random forests, GBM, neural networks, and more, that can help predict both continuous and discrete outcomes.

Training and Testing Data

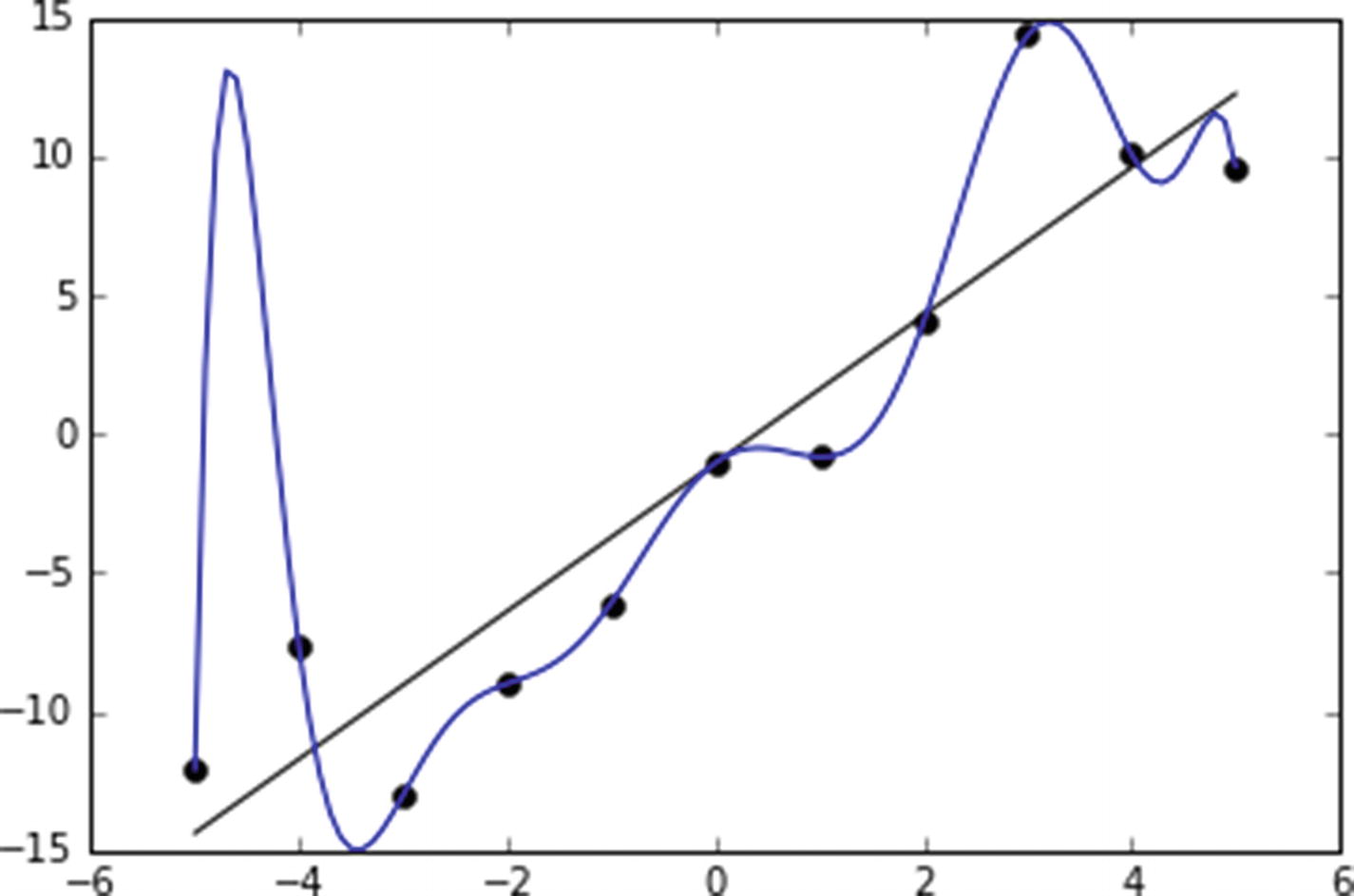

Typically, in regression, we deal with the problem of generalization/overfitting. Overfitting problems arise when the model is so complex that it perfectly fits all the data points, resulting in a minimal possible error rate. A typical example of an overfitted dataset looks like Figure .

Figure 1-1

An overfitted dataset

From the dataset in the figure, you can see that the straight line does not fit all the data points perfectly, whereas the curved line fits the points perfectlyhence the curve has minimal error on the data points on which it is trained.

However, the straight line has a better chance of being more generalizable when compared to the curve on a new dataset. So, in practice, regression/classification is a trade-off between the generalizability of the model and complexity of model.

The lower the generalizability of the model , the higher the error rate will be on unseen data points.

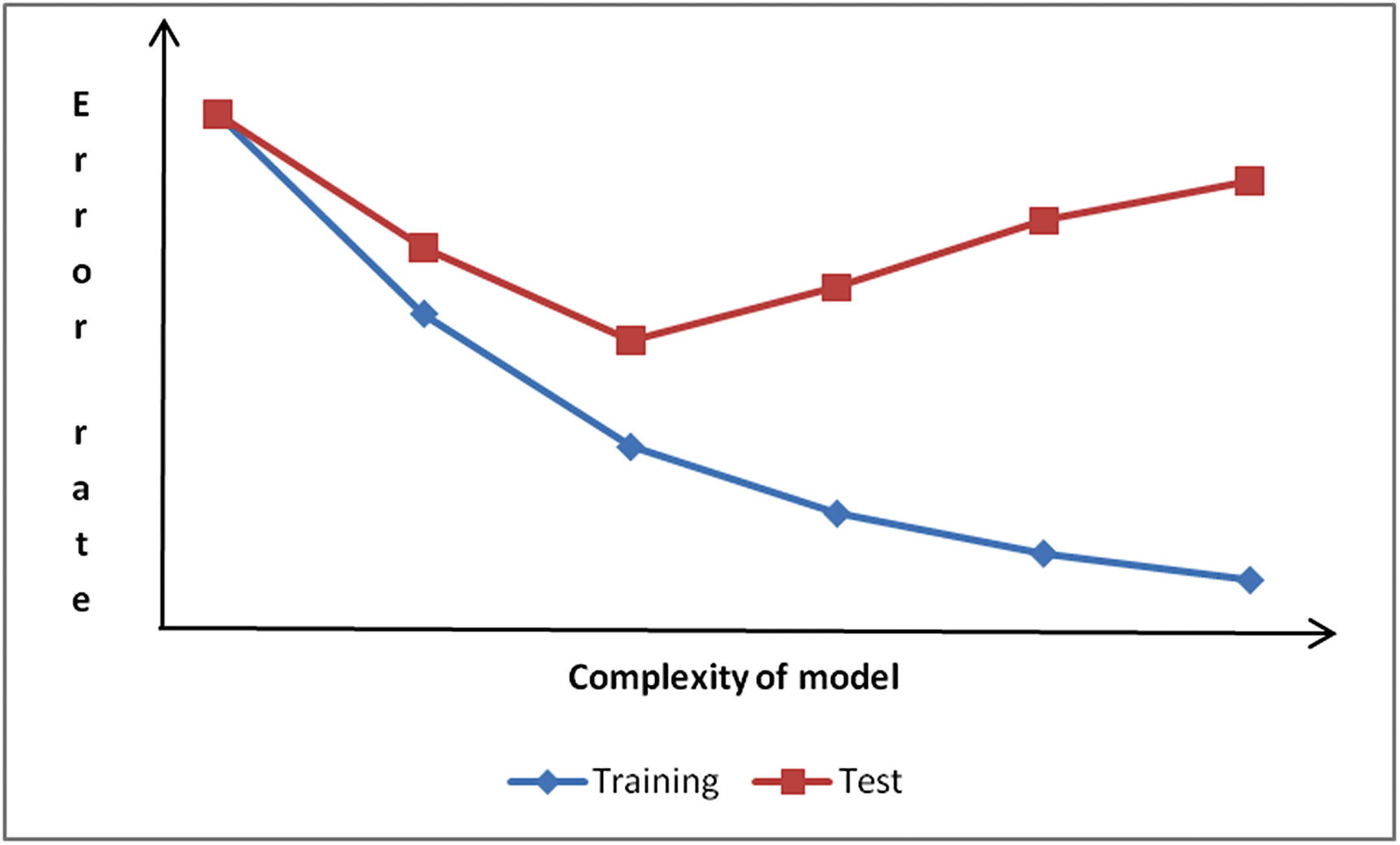

This phenomenon can be observed in Figure . As the complexity of the model increases, the error rate of unseen data points keeps reducing up to a point, after which it starts increasing again. However, the error rate on training dataset keeps on decreasing as the complexity of model increases - eventually leading to overfitting.

Figure 1-2

Error rate in unseen data points

The unseen data points are the points that are not used in training the model, but are used in testing the accuracy of the model, and so are called testing data or test data .

The Need for Validation Dataset

The major problem in having a fixed training and testing dataset is that the test dataset might be very similar to the training dataset, whereas a new (future) dataset might not be very similar to the training dataset. The result of a future dataset not being similar to a training dataset is that the models accuracy for the future dataset may be very low.

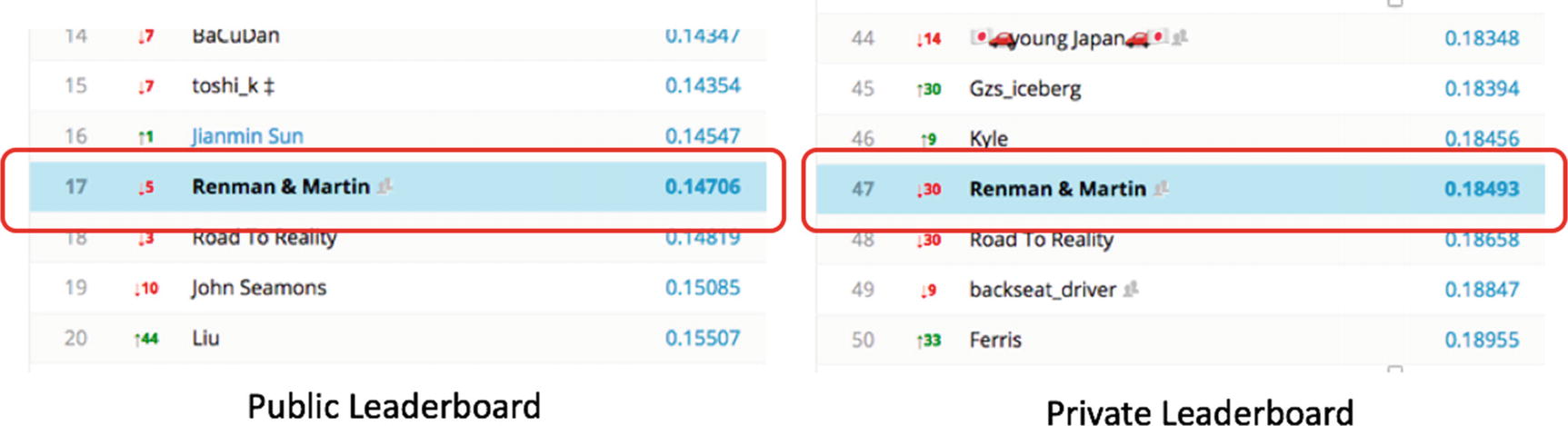

An intuition of the problem is typically seen in data science competitions and hackathons like Kaggle ( www.kaggle.com ). The public leaderboard is not always the same as the private leaderboard. Typically, for a test dataset, the competition organizer will not tell the users which rows of the test dataset belong to the public leaderboard and which belong to the private leaderboard. Essentially, a randomly selected subset of test dataset goes to the public leaderboard and the rest goes to the private leaderboard.

One can think of the private leaderboard as a test dataset for which the accuracy is not known to the user, whereas with the public leaderboard the user is told the accuracy of the model .

Potentially, people overfit on the basis of the public leaderboard, and the private leaderboard might be a slightly different dataset that is not highly representative of the public leaderboards dataset .

The problem can be seen in Figure .

Figure 1-3

The problem illustrated

In this case, you would notice that a user moved down from rank 17 to rank 47 when compared between public and private leaderboards. Cross-validation is a technique that helps avoid the problem. Lets go through the workings in detail.

If we only have a training and testing dataset, given that the testing dataset would be unseen by the model, we would not be in a position to come up with the combination of hyper-parameters (A hyper-parameter can be thought of as a knob that we change to improve our models accuracy) that maximize the models accuracy on unseen data unless we have a third dataset. Validation is the third dataset that can be used to see how accurate the model is when the hyper-parameters are changed. Typically, out of the 100% data points in a dataset, 60% are used for training, 20% are used for validation, and the remaining 20% are for testing the dataset.

Another idea for a validation dataset goes like this: assume that you are building a model to predict whether a customer is likely to churn in the next two months. Most of the dataset will be used to train the model, and the rest can be used to test the dataset. But in most of the techniques we will deal with in subsequent chapters, youll notice that they involve hyper-parameters.

As we keep changing the hyper-parameters, the accuracy of a model varies by quite a bit, but unless there is another dataset, we cannot ascertain whether accuracy is improving. Heres why:

We cannot test a models accuracy on the dataset on which it is trained.

We cannot use the result of test dataset accuracy to finalize the ideal hyper-parameters, because, practically, the test dataset is unseen by the model.

Hence, the need for a third datasetthe validation dataset .

Measures of Accuracy

In a typical linear regression (where continuous values are predicted), there are a couple of ways of measuring the error of a model. Typically, error is measured on the testing dataset, because measuring error on the training dataset (the dataset a model is built on) is misleadingas the model has already seen the data points, and we would not be in a position to say anything about the accuracy on a future dataset if we test the models accuracy on the training dataset only. Thats why error is always measured on the dataset that is not used to build a model.