1. Introduction

This book contains descriptions of algorithms for image processing such as noise reduction, including reduction of impulse noise, contrast enhancement, shading correction, edge detection, and many others. The source codes of the projects in the C# programming language implementing the algorithms are included on the books companion web site. The source codes are Windows Forms projects rather than Microsoft Foundation Classes Library (MFC) projects. The controls and the graphics in these projects are implemented by means of simple and easily understandable methods. I have chosen this way of implementing controls and graphics services rather than those based on MFC because the integrated development environment (IDE) using MFC is expensive. Besides that, the software using MFC is rather complicated. It includes many different files in a project and the user is largely unable to understand the sense and usefulness of these files. On the contrary, Windows Forms and its utility tools are free and are easier to understand. They supply controls and graphics similar to that of MFC.

To provide fast processing of images we transform objects of the class Bitmap , which is standard in Windows Forms, to objects of our class CImage , the methods of which are fast because they use direct access to the set of pixels, whereas the standard way of using Bitmap consists of implementing the relatively slow methods of GetPixel and SetPixel or methods using LockBits , which are fast, but not usable for indexed images.

The class CImage is rather simple: It contains the properties width , height , and nBits of the image and methods used in the actual project. My methods are described in the chapters devoted to projects. Here is the definition of our class CImage .

class CImage

{ public Byte[] Grid;

public int width, height, nBits;

public CImage() { } // default constructor

public CImage(int nx, int ny, int nbits) // constructor

{

width = nx;

height = ny;

nBits = nbits;

Grid = new byte[width * height * (nBits / 8)];

}

public CImage(int nx, int ny, int nbits, byte[] img) // constructor

{

width = nx;

height = ny;

nBits = nbits;

Grid = new byte[width * height * (nBits / 8)];

for (int i = 0; i < width * height * nBits / 8; i++) Grid[i] = img[i];

}

} //*********************** end of class CImage *****************

Methods of the class CImage are described in the descriptions of projects.

2. Noise Reduction

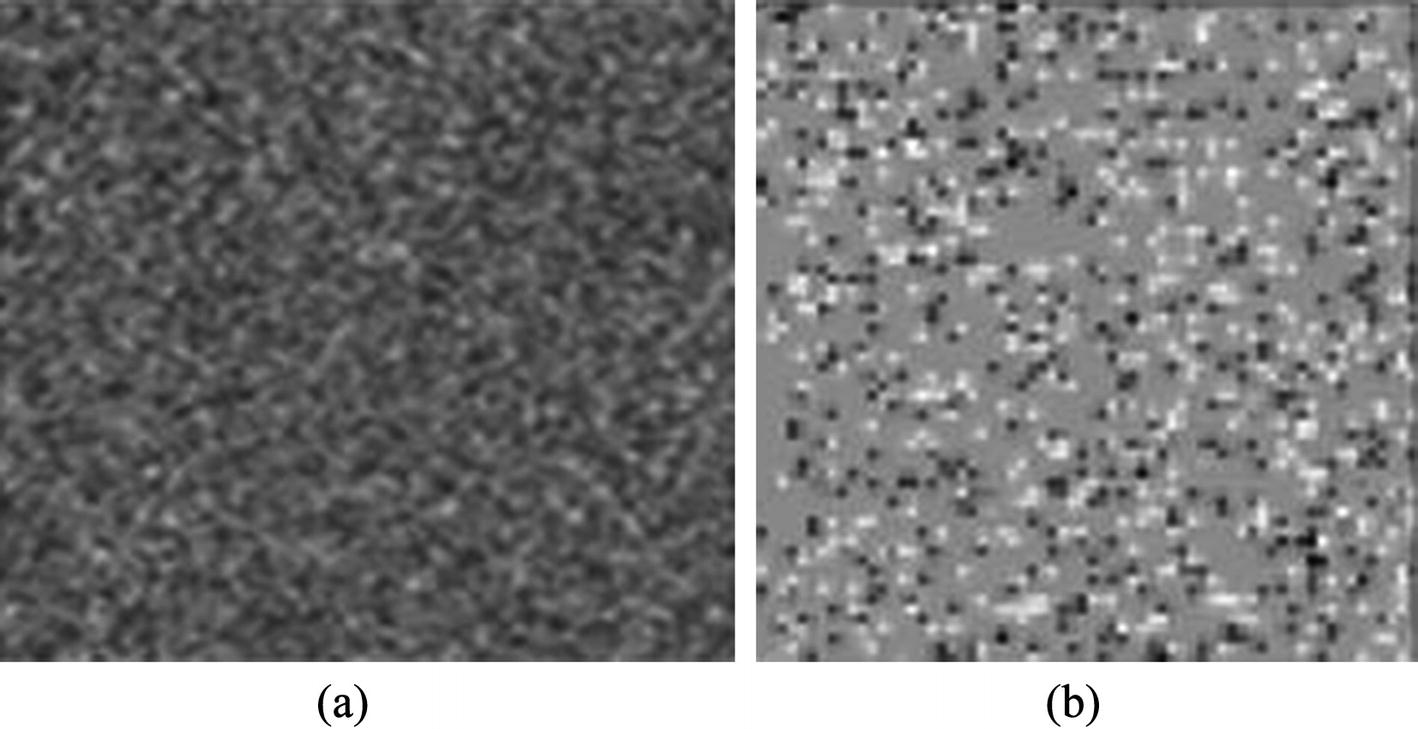

Digital images are often distorted by random errors usually referred to as noise. There are two primary kinds of noise: Gaussian noise and impulse noise (see Figure ). Gaussian noise is statistical noise having a probability distribution similar to a Gaussian distribution. It arises mainly during acquisition (e.g., in a sensor). It could be caused by poor illumination or by high temperature of the sensor. It comes from many natural sources, such as the thermal vibrations of atoms in conductors, referred to as thermal noise. It influences all pixels of the image.

Impulse noise , also called salt-and-pepper noise, presents itself as sparsely occurring light and dark pixels. It comes from pulse distortions like those coming from electrical welding near the electronic device taking up the image or due to improper storage of old photographs. It influences a small part of the set of pixels.

Figure 2-1

Examples of noise: (a) Gaussian noise ; (b) impulse noise

The Simplest Filter

We consider first the ways of reducing the intensity of Gaussian noise . The algorithm for reducing the intensity of impulse noise is considered later in this chapter.

The most efficient method of reducing the intensity of Gaussian noise is replacing the lightness of a pixel P by the average value of the lightness of a small subset of pixels in the neighborhood of P . This method is based on the fact from the theory of random values : The standard deviation of the average of N equally distributed random values is by the factor N less than the standard deviation of a single value. This method performs a two-dimensional convolution of the image with a mask, which is an array of weights arranged in a square of W W pixels, and the actual pixel P lies in the middle of the square. Source code for this filter is presented later in this chapter. This method has two drawbacks : It is rather slow because it uses W W additions for each pixel of the image, and it blurs the image. It transforms fine edges at boundaries of approximately homogeneous regions to ramps where the lightness changes linearly in a stripe whose width is equal to W pixels. The first drawback is overcome with the fast averaging filter. However, the second drawback is so important that it prevents use of the averaging filter for the purpose of noise removal . Averaging filters are, however, important for improving images with shading (i.e., those representing nonuniformly illuminated objects), as we see later. I propose another filter for the purpose of noise removal as well.

First, let us describe the simplest averaging filter. I present the source code of this simple method, which the reader can use in his or her program. In this code, as well as in many other code examples, we use certain classes, which are defined in the next section.

The Simplest Averaging Filter

The nonweighted averaging filter calculates the mean gray value in a gliding square window of W W pixels where W is the width and height of a square gliding window. The greater the window size W, the stronger the suppression of Gaussian noise : The filter decreases the noise by the factor W . The value W is usually an odd integer W = 2 h + 1 for the sake of symmetry. The coordinates ( x + xx, y + yy ) of pixels inside the window vary symmetrically around the current center pixel ( x, y ) in the intervals: h xx + h and h yy + h with h = 1, 2, 3, 4, and so on.

Near the border of the image the window lies partially outside of the image. In this case, the computation loses its natural symmetry because only pixels inside the image can be averaged. A reasonable way to solve the border problem is to take control of the coordinates ( x + xx, y + yy ) if they point out of the image. If these coordinates are out of the image the summation of the gray values must be suspended and the divisor nS should not be incremented.

An example of the algorithm for averaging the colors is presented here. We often use in our code comments denoted by lines of certain symbols: lines marked with = label the start and the end of a loop, lines with minus signs label if instructions, and so on. This makes the structure of the code more visible.

The simplest slow version of the algorithm has four nested for loops.

public void CImage::Averaging(CImage input, int HalfWidth)

{ int nS, sum;

for (int y=0; y

{ for (int x=0; x

{ nS=sum=0;

for (int yy=-HalfWidth; yy<=HalfWidth; yy++) //=======