ACKNOWLEDGMENTS

You road I enter upon and look around! I believe you are not all that is here;

I believe that much unseen is also here.

WALT WHITMAN, Song of the Open Road

Many friends, colleagues, and family members read some or all of the manuscript. Among them are Alex Bates, Wilson Bell, Mike Burrows, Walt Chromiak, Michael Isard, Alastair MacCormick, Raewyn MacCormick, Nico-letta Marini-Maio, Frank McSherry, Kristine Mitchell, Ilya Mironov, Wendy Pollack, Judith Potter, Cotten Seiler, Helen Takacs, Kunal Talwar, Tim Wahls, Jonathan Waller, Udi Wieder, and Ollie Williams. Suggestions from these readers resulted in a large number of substantial improvements to the manuscript. The comments of two anonymous reviewers also resulted in significant improvements.

Chris Bishop provided encouragement and advice. Tom Mitchell gave permission to use his pictures and source code in .

Vickie Kearn (the book's editor) and her colleagues at Princeton University Press did a wonderful job of incubating the project and bringing it to fruition.

My colleagues in the Department of Mathematics and Computer Science at Dickinson College were a constant source of support and camaraderie.

Michael Isard and Mike Burrows showed me the joy and beauty of computing. Andrew Blake taught me how to be a better scientist.

My wife Kristine was always there and is here still; much unseen is also here.

To all these people I express my deepest gratitude. The book is dedicated, with love, to Kristine.

Introduction: What Are the Extraordinary Ideas Computers Use Every Day?

This is a gift that I havea foolish extravagant spirit, full of forms, figures, shapes, objects, ideas, apprehensions, motions, revolutions.

WILLIAM SHAKESPEARE, Love's Labour's Lost

How were the great ideas of computer science born? Here's a selection:

In the 1930s, before the first digital computer has even been built, a British genius founds the field of computer science, then goes on to prove that certain problems cannot be solved by any computer to be built in the future, no matter how fast, powerful, or cleverly designed.

In 1948, a scientist working at a telephone company publishes a paper that founds the field of information theory. His work will allow computers to transmit a message with perfect accuracy even when most of the data is corrupted by interference.

In 1956, a group of academics attend a conference at Dartmouth with the explicit and audacious goal of founding the field of artificial intelligence. After many spectacular successes and numerous great disappointments, we are still waiting for a truly intelligent computer program to emerge.

In 1969, a researcher at IBM discovers an elegant new way to structure the information in a database. The technique is now used to store and retrieve the information underlying most online transactions.

In 1974, researchers in the British government's lab for secret communications discover a way for computers to communicate securely even when another computer can observe everything that passes between them. The researchers are bound by government secrecybut fortunately, three American professors independently discover and extend this astonishing invention that underlies all secure communication on the internet.

In 1996, two Ph.D. students at Stanford University decide to collaborate on building a web search engine. A few years later, they have created Google, the first digital giant of the internet era.

As we enjoy the astonishing growth of technology in the 21st century, it has become impossible to use a computing devicewhether it be a cluster of the most powerful machines available or the latest, most fashionable handheld devicewithout relying on the fundamental ideas of computer science, all born in the 20th century. Think about it: have you done anything impressive today? Well, the answer depends on your point of view. Have you, perhaps, searched a corpus of billions of documents, picking out the two or three that are most relevant to your needs? Have you stored or transmitted many millions of pieces of information, without making a single mistakedespite the electromagnetic interference that affects all electronic devices? Did you successfully complete an online transaction, even though many thousands of other customers were simultaneously hammering the same server? Did you communicate some confidential information (for example, your credit card number) securely over wires that can be snooped by dozens of other computers? Did you use the magic of compression to reduce a multimegabyte photo down to a more manageable size for sending in an e-mail? Or did you, without even thinking about it, exploit the artificial intelligence in a hand-held device that self-corrects your typing on its tiny keyboard?

Each of these impressive feats relies on the profound discoveries listed earlier. Thus, most computer users employ these ingenious ideas many times every day, often without even realizing it! It is the objective of this book to explain these conceptsthe great ideas of computer science that we use every dayto the widest possible audience. Each concept is explained without assuming any knowledge of computer science.

ALGORITHMS: THE BUILDING BLOCKS OF THE GENIUS AT YOUR FINGERTIPS

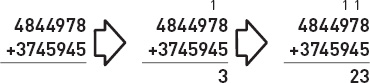

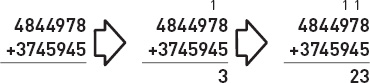

So far, I've been talking about great ideas of computer science, but computer scientists describe many of their important ideas as algorithms. So what's the difference between an idea and an algorithm? What, indeed, is an algorithm? The simplest answer to this question is to say that an algorithm is a precise recipe that specifies the exact sequence of steps required to solve a problem. A great example of this is an algorithm we all learn as children in school: the algorithm for adding two large numbers together. An example is shown above. The algorithm involves a sequence of steps that starts off something like this: First, add the final digits of the two numbers together, write down the final digit of the result, and carry any other digits into the next column on the left; second, add the digits in the next column together, add on any carried digits from the previous columnand so on.

The first two steps in the algorithm for adding two numbers.

Note the almost mechanical feel of the algorithm's steps. This is, in fact, one of the key features of an algorithm: each of the steps must be absolutely precise, requiring no human intuition or guesswork. That way, each of the purely mechanical steps can be programmed into a computer. Another important feature of an algorithm is that it always works, no matter what the inputs. The addition algorithm we learned in school does indeed have this property: no matter what two numbers you try to add together, the algorithm will eventually yield the correct answer. For example, although it would take a rather long time, you could certainly use this algorithm to add two 1000-digit numbers together.

You may be a little curious about this definition of an algorithm as a precise, mechanical recipe. Exactly how precise does the recipe need to be? What fundamental operations are permitted? For example, in the addition algorithm above, is it okay to simply say add the two digits together, or do we have to somehow specify the entire set of addition tables for single-digit numbers? These details might seem innocuous or perhaps even pedantic, but it turns out that nothing could be further from the truth: the real answers to these questions lie right at the heart of computer science and also have connections to philosophy, physics, neuroscience, and genetics. The deep questions about what an algorithm really is all boil down to a proposition known as the

Next page