K. P. Arjun, N. M. Sreenarayanan, K. Sampath Kumar, and R. Viswanathan

Contents

Computing involves process-oriented step-by-step tasks to complete a goal-oriented computation. A goal is not a simple or single rather there may be more than one goal. Normally we can say that a goal is a complex operation that is processed using a computer. A normal computer contains hardware and software; and computing can also involve more than one computing environment in hardware like workstations, servers, clients and other intermediate nodes and software like a workstation Operating System, server operating system and other computing software. The computing in our daily life includes sending emails, playing games or making phone calls; these are different kinds of computing examples at different contextual levels. Depending on the processing speed and size, computers are categorized into different types like supercomputers, mainframes, minicomputers and microcomputers. The computing power of a device is directly proportional to its data-storing capacity.

All software is developed in a sequential way which means that before developing software to solve a large problem, we split the problem into smaller sub problems. These sub problems broken down step by step or in a flowchart are called algorithms. These algorithms are executed by the central processing unit (CPU). We can call this serial computing, as the main task is divided into a number of small instructions, then these instructions are executed one by one. But in the main, this serial communication is a huge waste of the hardware other than the CPU. The CPU is continuously taking instructions and processing those instructions. The hardware contributing to processing that specific hardware is used for that particular time only, and for the remaining time that hardware is idle.

So to overcome the deficiencies in resource utilization and improve the computing power we moved into another era of computing called parallel computing and distributed computing. The insight of distributed computing is in solving more complex and larger computational problems with the help of more than one computational system. The computational problem is divided into many tasks, each of which is executed in different computational systems that are located in different regions.

Distributed computing [] on Graphs have been conducted.

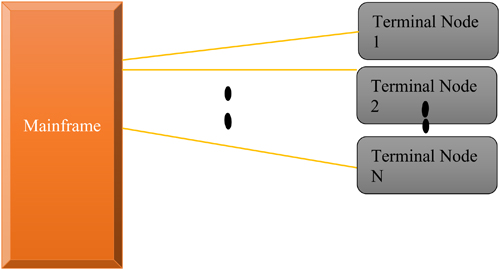

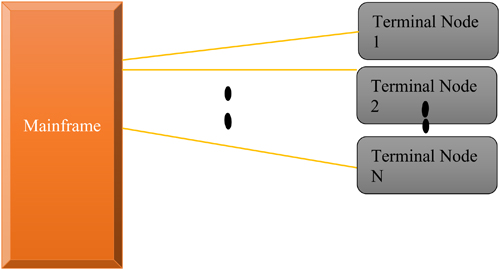

The name centralized computing refers to computing that occurs in a central situated machine. The specifications of the central computing server machine include high computing capabilities and sophisticated software. All other computers are attached to the central situated machine and communicate through terminals. The centralized machine [] itself controls and manages the peripherals, some of which are physically connected and some of which are attached via terminals.

The main advantage of centralized system is greater security compared to other types of computing because the processing is only done at the centrally located machine. All the connected machines can access the centralized processing machine and start processing their own task by using terminals. If one terminal goes down, then the user can use another terminal and log in again. All the user-related files are still available with that particular user login. The user can resume their session and complete the task.

The main and most important disadvantage of the centralized computing system is that all computing and storage is done at centrally located machine. If the machine fails or crashes the entire system will go down. It affects the performance evaluation on unavailability of service.

]. The client has minimum computing power, but for advanced and high-level computing, client requests for the server. The server computes the request received from the client and sends the response back to the client.

Figure 1.1 Centralized computing.

In centralized computing, a centrally located powerful system provides computing services to all other nodes connected. The disadvantage is that all processing power is located at one entity. Alternatively, the burden at the central level can be shared by the nodes connected on the network. In decentralized computing [] a single server is not responsible for the whole task. The whole workload is distributed to the computing nodes so that each computing node has equal processing power.

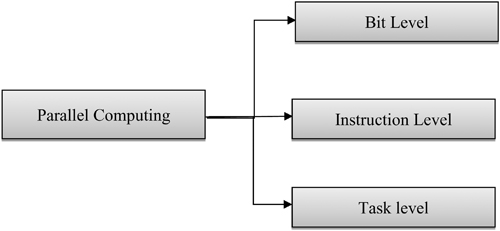

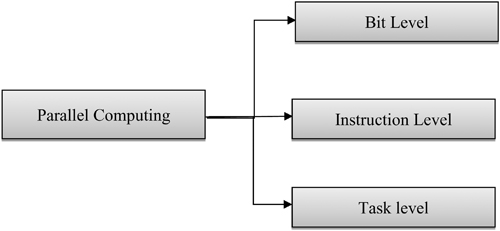

To overcoming the deficiencies in resource utilization and improve the computing power we moved into another era of computing called parallel computing. The name parallel means that more than one instruction can be executed simultaneously. It requires the configuration of a number of computing engines (normally called processors) and related hardware and also software configuration.

In a CPU, a main task is divided into a number of small instructions, and then these instructions are executed one by one. The main problem with the serial communication is wastage of large amount of resources in terms of hardware and software resources. CPU continuously receives instructions and process them. The hardware involved in serial processing remains idle in case there are no instructions to be processed.

To overcome the deficiencies in resource utilization and to improve the computing power we moved into another era of computing called parallel computing [ shows the levels of parallel computing.

Figure 1.2 Different levels of parallel computing.

] is one of the multifaceted problems. Parallel computing can be utilized to convert real-world scenarios to more convenient formats.

The main utility of parallel computing is in solving real-world problem-as more complex, independent and unrelated events will occur at the same time, for example, galaxy formation, planetary movements, climate changes, road traffic, weather, etc.

The advantage of fast computing is helpful in various high-end applications, for example, faster networks, high speed data transfer, distributed systems and multi-processor computing [], etc.

The distributed computing insight lies in solving more complex and larger computational problems with the help of more than one computational system. The computational problem is divided into many tasks, each of which is executed in different computational systems that are located in different regions. Different computational systems located at different places communicate through strong base network communication technology. There are many communication mechanisms that have been adopted for strong and secure communications like message passing, RPC and HTTP mechanisms, etc.

Another way we can describe distributed computing is as different computational engines which are all autonomous, physically present in different geographical areas, and communicating with the help of a computer network. Each computational engine is called an autonomous system. Each autonomous system has its own hardware and software. Actually they will not share their hardware or software with another system that is located in another region. But they are continuously communicating by using the message-passing mechanism.

The main idea behind distributed computing is overcoming the limitations of computing like low processing power, speed and memory. Each computer is connected by using a single network. The duties of each computing engine are to do the assigned jobs and communicate to peer computers that are connected in the network.

Figure 1.1 Centralized computing.

Figure 1.1 Centralized computing.  Figure 1.2 Different levels of parallel computing.

Figure 1.2 Different levels of parallel computing.