Short Introduction

Nowadays, more and more companies are switching to microservices architecture and this trend leads to an increased I/O load on the entire system and growing number of connections. Asynchronous code is perfect for developing microservices and solves such problems. But not everyone understands the concept internally. This small book was written for just one purpose explain the asynchrony concept from beginning to end.

This book is intended first of all for developers who already have some experience in writing programs but just want to structure and systematize their knowledge about asynchronous programming and on how it is designed. We will go from low level socket operations to higher levels, the last chapter is entirely devoted to asynchronous frameworks in Python language in order to map the theory to practical implementations.

The book includes code samples mainly in the Python language for the Linux platform.

Chapter 1

Blocking I/O and non-blocking I/O

O ne way or another, when you have a question about blocking or non-blocking calls, most commonly it means dealing with I/O . The most frequent case in our age of information, microservices, and FaaS will be processing requests. We can now imagine that you, dear reader, are a user of a web site, while your browser (or the application in which you're reading these lines) is a client. Somewhere in the depths of the amazon.com, there is a server that handles your requests to generate the same lines that you're reading.

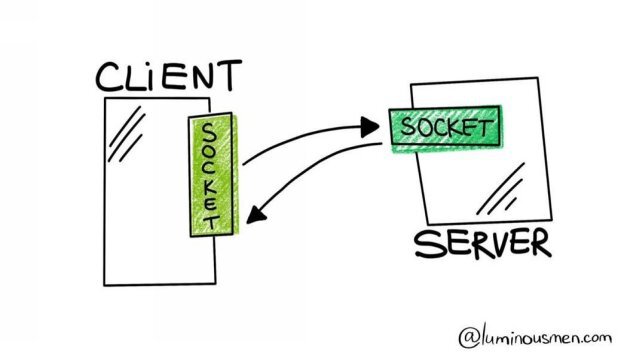

In order to start an interaction in such client-server communications, the client and the server must first establish a connection with each other. We will not go into the depths of the 7-layer model and the protocol stack that is involved in this interaction, as I think it all can be easily found on the Internet. What we need to understand is that on both sides (client and server) there are special connection points known as sockets. Both the client and server must be bound to each other's sockets, and listen to them to understand what the other says on the opposite side of the wire.

In this communication, the server is doing something either processes the request, converts markdown to HTML or looks where the images are, it performs some kind of processing.

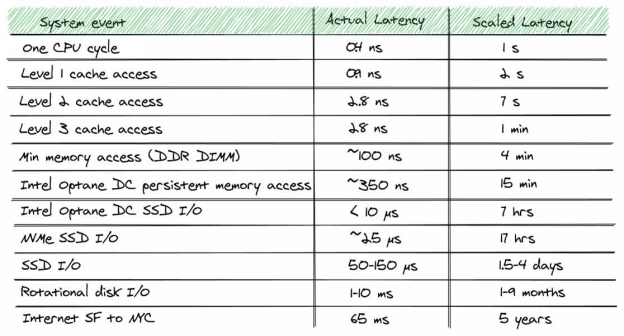

If you look at the ratio between CPU speed and network speed, the difference is a couple of orders of magnitude. And if the application uses I/O most of the time, in most cases the processor simply does nothing I/O becomes a bottleneck. This type of application is called I/O-bound.

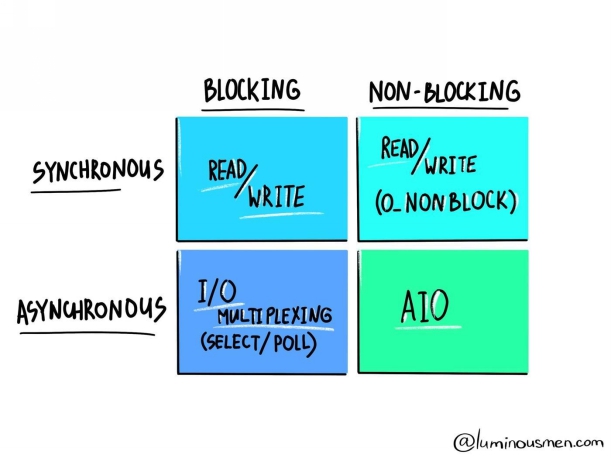

There are two ways to organize I/O: blocking and non-blocking .

Also, there are two types of I/O operations: synchronous and asynchronous .

All together they represent possible I/O models.

Each of these I/O models has usage patterns that are advantageous for particular applications. Here I will demonstrate the difference between the two ways of organizing I/O.

Blocking I/O

With the blocking I/O, when the client makes a connection request to the server, the socket which is processing that connection and the corresponding thread that reads from it are blocked until some data appears. This data is placed in the network buffer until it is all read and ready for processing. Until the operation is complete, the server can do nothing more but wait .

The simplest conclusion from this is that we cannot serve more than one connection within a single thread. By default, TCP sockets work in blocking mode.

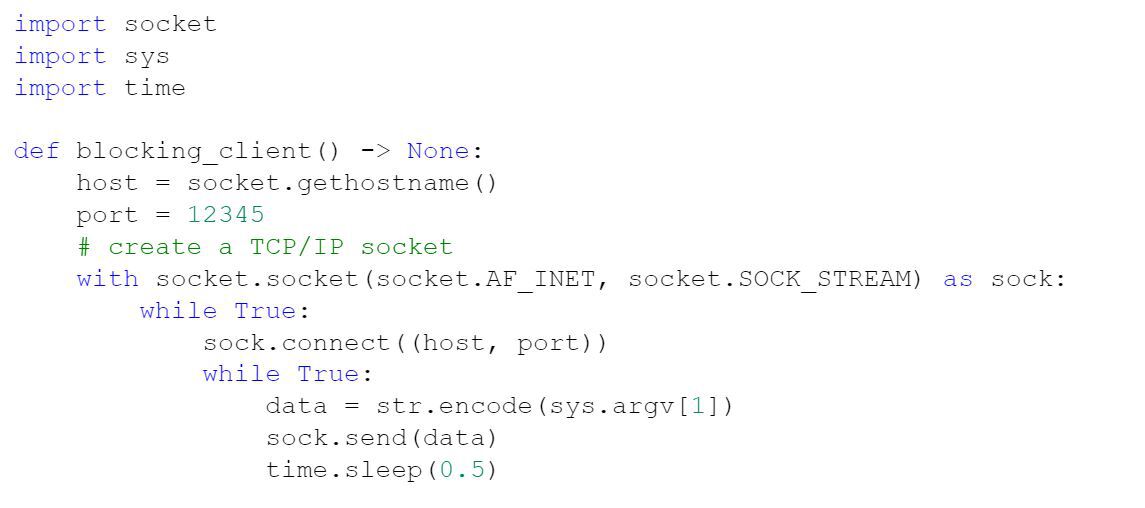

A simple example on Python, client :

Here we send a message with a 50ms interval to the server in the endless loop. Imagine that this client-server communication consists of downloading data and it takes some time to finish.

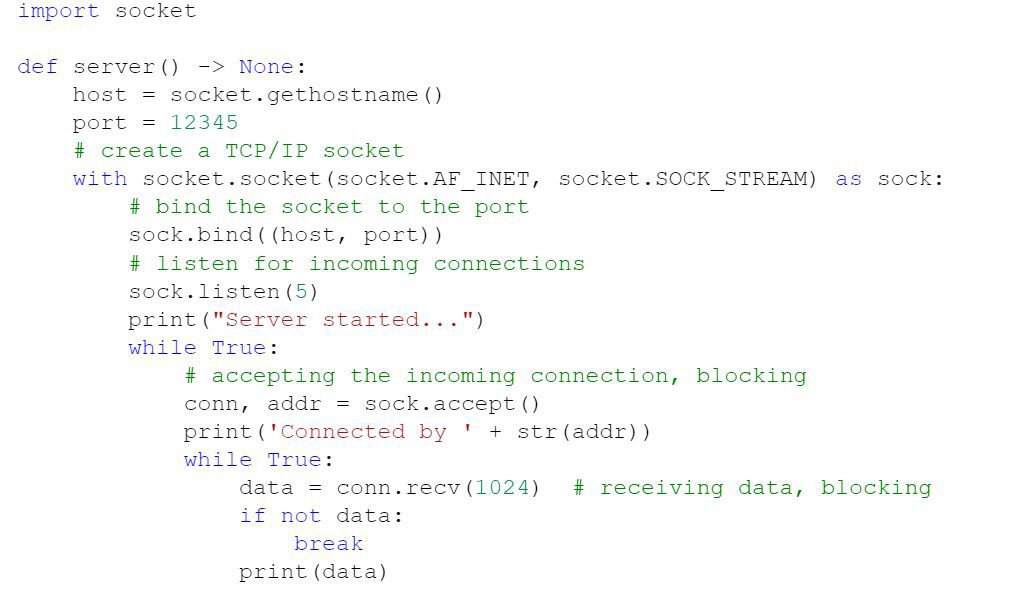

And the server:

Here the server just listens to the socket and accepts incoming connections. Then it tries to receive data from this connection.

I will run those applications in separate terminal windows with several clients as:

And server as:

In the above code, the server will essentially be blocked by a single client connection! If we run another client with another message, you will not see it. I highly recommend that you play with this example to understand what is happening.

What is going on here?

The send() method will try to send all its data to the server while the write buffer on the server will continue to receive data. When the syscall for reading is called, the application is blocked and the context is switched to the kernel. The kernel initiates reading , the data is transferred to the user-space buffer. When the buffer becomes empty, the kernel will wake up the process again to receive the next portion of data to be transferred.

Now in order to handle two clients with this approach, we need to have several threads, i.e. allocate a new thread for each client connection. We will get back to that soon.

Non-blocking I/O

However, there is also a second option non-blocking I/O. The difference is obvious from its name instead of blocking, any operation is returned immediately. Non-blocking I/O means that the request is immediately queued and the function is completed. The actual I/O is then processed at some later point.

By setting a socket to a non-blocking mode, you can effectively interrogate it. If you try to read from a non-blocking socket and there is no data, it will return an error code ( EAGAIN or EWOULDBLOCK ).

Actually, if you run your program in a constant cycle of polling data from the socket, it will consume expensive CPU time. This polling type is a bad idea. It can be extremely inefficient because in many cases the application must wait until the data is available or attempt to do other work while the command is performed in the kernel. A more elegant way to check if the data is available for reading is using select() .

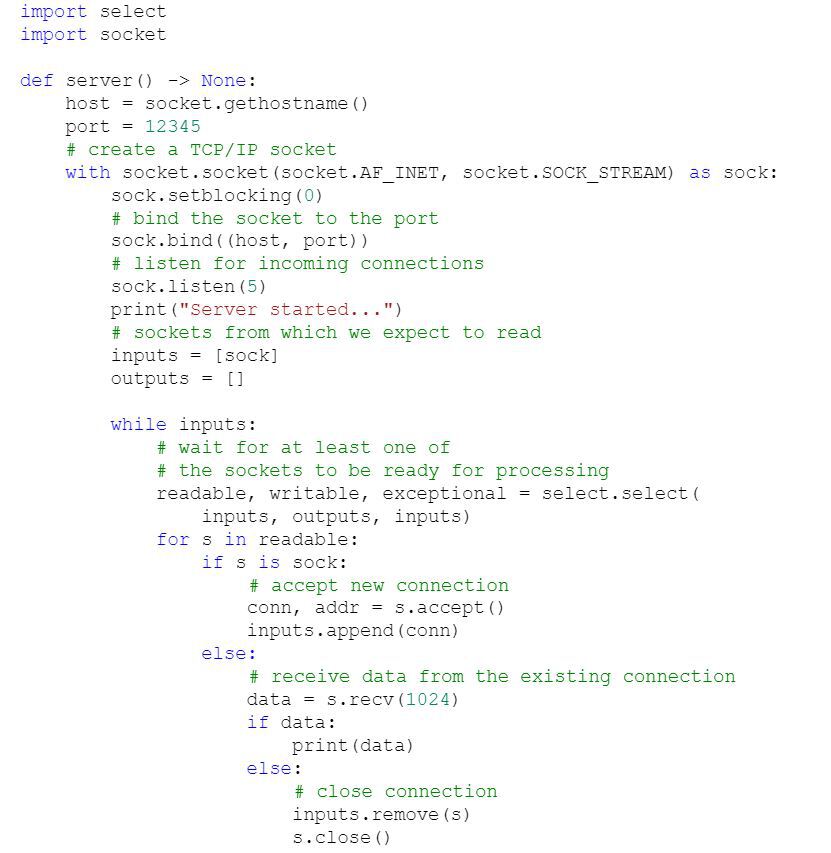

Let us go back to our example with some changes on the server:

Now if we run this code with more than one client you will see that the server is not blocked by a single client and it handles everything that can be detected by the messages displayed. Again, I suggest that you try this example yourself.

What's going on here?

Here the server does not wait for all the data to be written to the buffer. When we make a socket non-blocking by calling setblocking(0) , it will never wait for the operation to be completed. So when we call the recv method, it will return to the main thread. The main mechanical difference is that send , recv , connect and accept can return without doing anything at all.