Dask simplifies scaling analytics and ML code written in Python, allowing you to handle larger and more complex data and problems.Dask aims to fill the space where your existing tools, like pandas DataFrames, or your sci-kit machine learning pipelines start to become too slow (or do not succeed).While the term big data is perhaps less in vogue now than a few years ago, the data size of the problems has not gotten smaller, and the complexity of the computation and models have not gotten simpler.Dask allows you to primarily use the existing interfaces that you are used to (such as pandas and multiprocessing) while going beyond the scale of a single core or even a single machine.

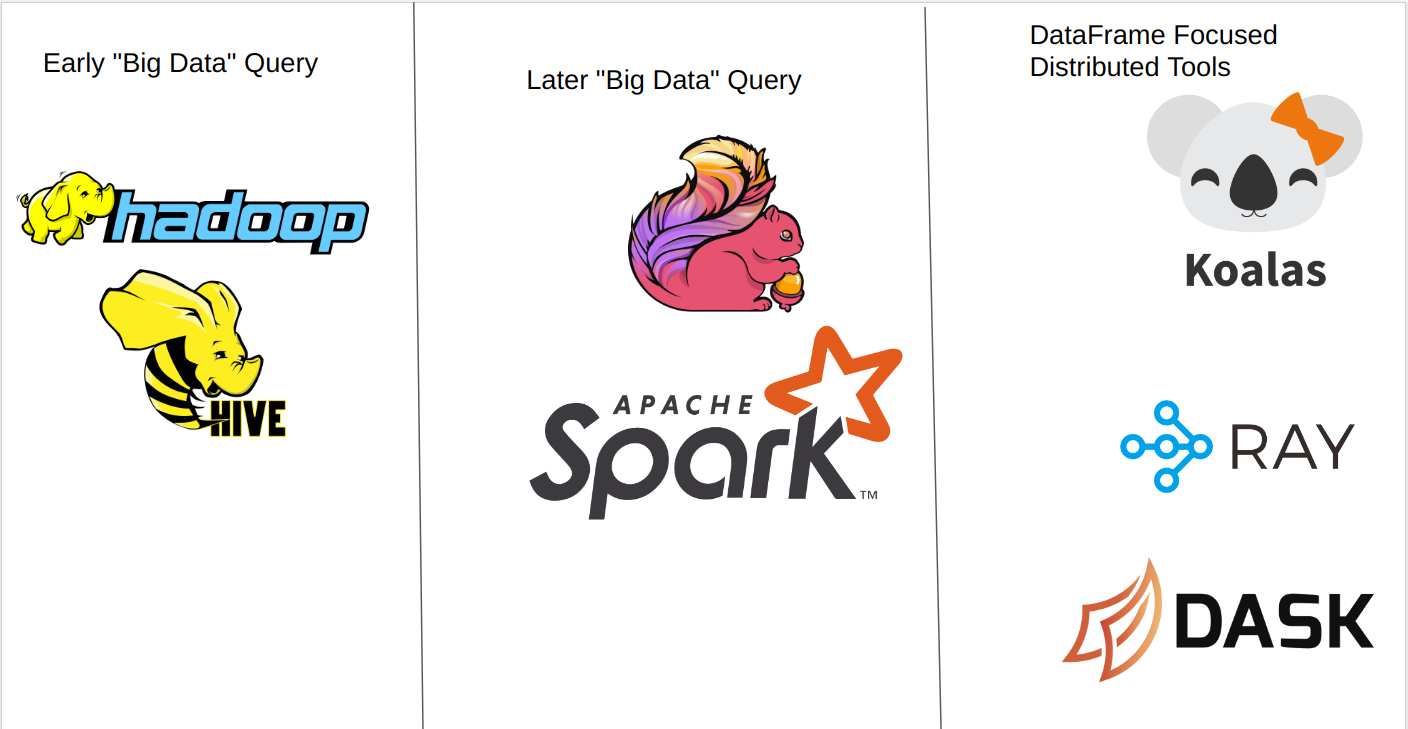

Where Does Dask Fit in the Ecosystem?

Dask provides scalability to multiple, traditionally distinct tools. It is most often used to scale Python data libraries like pandas and NumPy. Dask extends existing tools for scaling, such as multiprocessing, allowing them to exceed their current limits of single machines to multi-core and multi-machine.

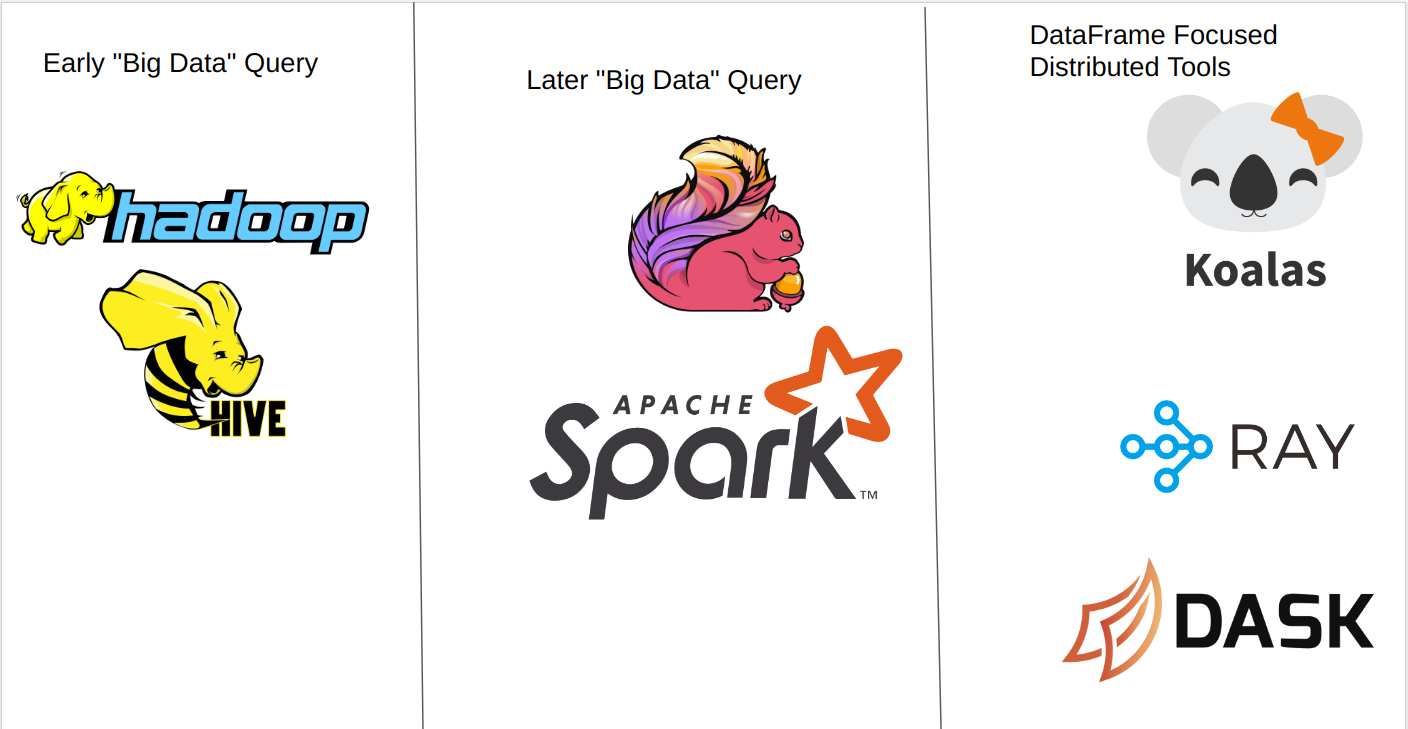

Figure 1-1. A quick Look at the ecosystem evolution

From an abstraction point of view, Dask sits above the machines and cluster management tools, allowing you to focus on Python code instead of the intricacies of machine-to-machine communication.

Figure 1-2. An alternate look at the ecosystem

We say a problem is compute bound if the limiting factor is not the amount of data, but rather the work we are doing on the data. Memory bound problems are problems where the computation is not the limiting factor, rather the ability to store all of the data in memory is the limiting factor. Some problems can exhibit both compute and memory bound problems as is often the case for large deep learning problems.

Multi-Core (think multi-threading) processing can help with compute problems (up to the limit of the number of cores in a machine). Generally multi-core processing is unable to help with memory bound problems as all CPUs have similar access to the memory footnote:[With the exception of non-uniform memory access (NUMA) systems

Accelerated processing, like specialized instruction sets or specialized hardware like tensor processing units or graphics processing units, is generally only useful for compute bound problems. Sometimes using accelerated processing can introduce memory bounding problems, as the amount of memory available to the accelerated computation can be smaller than the main system memory.

Multi-Machine processing is important both of these classes of problems, namely compute bound and memory bound problems. Since the number of cores you can get in a machine (affordable) are limited, even if a problem is only compute bound at certain scales you will need to consider multi-machine processing. More commonly, memory bound problems are a good fit for multi-machine scaling as Dask can often split up the data between the different machines.

Dask has both multi-core and multi-machine scaling, allowing you to scale your Python code as you best see fit.

Much of Dasks power comes from the tools and libraries built on top of it, which fit into their parts of the data processing ecosystem (such as BlazingSQL). Your background and interest will naturally shape how you first view Dask, so in the following subsections, Ill briefly discuss how you can use Dask for different types of problems, as well as how it compares to some existing tools.