LazyProgrammer - Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano

Here you can read online LazyProgrammer - Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2016, publisher: LazyProgrammer, genre: Computer. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano

- Author:

- Publisher:LazyProgrammer

- Genre:

- Year:2016

- Rating:5 / 5

- Favourites:Add to favourites

- Your mark:

- 100

- 1

- 2

- 3

- 4

- 5

Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

LazyProgrammer: author's other books

Who wrote Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano? Find out the surname, the name of the author of the book and a list of all author's works by series.

Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano — read online for free the complete book (whole text) full work

Below is the text of the book, divided by pages. System saving the place of the last page read, allows you to conveniently read the book "Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano" online for free, without having to search again every time where you left off. Put a bookmark, and you can go to the page where you finished reading at any time.

Font size:

Interval:

Bookmark:

Unsupervised Deep Learning in Python Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano By: The LazyProgrammer ( http://lazyprogrammer.me )

We are going to do a lot of that in this book. PCA and t-SNE both help you visualize data from high dimensional spaces on a flat plane. Autoencoders and Restricted Boltzmann Machines help you visualize what each hidden node in a neural network has learned. One interesting feature researchers have discovered is that neural networks learn hierarchically. Take images of faces for example. The first layer of a neural network will learn some basic strokes.

The next layer will combine the strokes into combinations of strokes. The next layer might form the pieces of a face, like the eyes, nose, ears, and mouth. It truly is amazing! Perhaps this might provide insight into how our own brains take simple electrical signals and combine them to perform complex reactions. We will also see in this book how you can trick a neural network after training it! You may think it has learned to recognize all the images in your dataset, but add some intelligently designed noise, and the neural network will think its seeing something else, even when the picture looks exactly the same to you! So if the machines ever end up taking over the world, youll at least have some tools to combat them. Finally, in this book I will show you exactly how to train a deep neural network so that you avoid the vanishing gradient problem - a method called greedy layer-wise pretraining. whats deep learning and all this other crazy stuff youre talking about? If you are completely new to deep learning, you might want to check out my earlier books and courses on the subject: Deep Learning in Python Deep Learning in Python Prerequisities Much like how IBMs Deep Blue beat world champion chess player Garry Kasparov in 1996, Googles AlphaGo recently made headlines when it beat world champion Lee Sedol in March 2016. whats deep learning and all this other crazy stuff youre talking about? If you are completely new to deep learning, you might want to check out my earlier books and courses on the subject: Deep Learning in Python Deep Learning in Python Prerequisities Much like how IBMs Deep Blue beat world champion chess player Garry Kasparov in 1996, Googles AlphaGo recently made headlines when it beat world champion Lee Sedol in March 2016.

What was amazing about this win was that experts in the field didnt think it would happen for another 10 years. The search space of Go is much larger than that of chess, meaning that existing techniques for playing games with artificial intelligence were infeasible. Deep learning was the technique that enabled AlphaGo to correctly predict the outcome of its moves and defeat the world champion. Deep learning progress has accelerated in recent years due to more processing power (see: Tensor Processing Unit or TPU), larger datasets, and new algorithms like the ones discussed in this book.

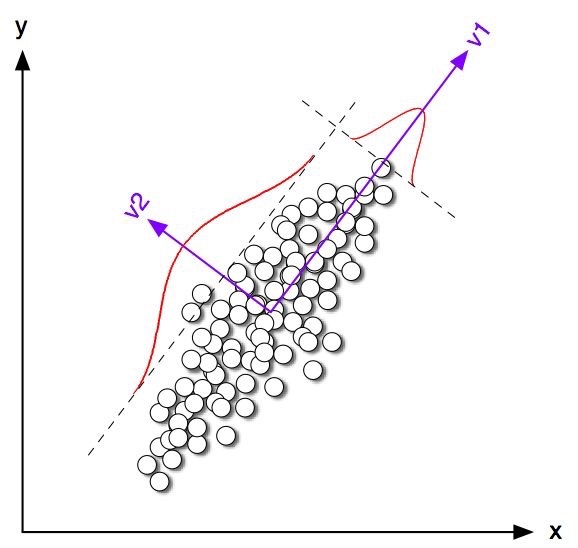

There are 2 components to their description: 1) describing what PCA does and how it is used 2) the math behind PCA. So what does PCA do? Firstly, it is a linear transformation. If you think about what happens when you multiply a vector by a scalar, youll see that it never changes direction. It only becomes a vector of different length. Of course, you could multiply it by -1 and it would face the opposite direction, but it cant be rotated arbitrarily. 2(1, 2) = (2, 4) If you multiply a vector by a matrix - it CAN change direction and be rotated arbitrarily. Ex. [[1, 1], [1, 0]] [1, 2] T = [3, 1] T So what does PCA do? Simply put, a linear transformation on your data matrix: Z = XQ It takes an input data matrix X, which is NxD, multiplies it by a transformation matrix Q, which is DxD, and outputs the transformed data Z which is also NxD. [[1, 1], [1, 0]] [1, 2] T = [3, 1] T So what does PCA do? Simply put, a linear transformation on your data matrix: Z = XQ It takes an input data matrix X, which is NxD, multiplies it by a transformation matrix Q, which is DxD, and outputs the transformed data Z which is also NxD.

If you want to transform an individual vector x to a corresponding individual vector z, that would be z = Qx. Its in a different order because when x is in a data matrix its a 1xD row vector, but when we talk about individual vectors they are Dx1 column vectors. Its just convention. What makes PCA an interesting algorithm is how it chooses the Q matrix. Notice that because this is unsupervised learning, we have an X but no Y, or no targets. On Rotation Another view of what happens when you multiply by a matrix is not that you are are rotating the vectors, but you instead are rotating the coordinate system in which the vectors live.

Which view we take will depend on which problem we are trying to solve. Dimensionality Reduction One use of PCA is dimensionality reduction. When youre looking at the MNIST dataset, which is 28x28 images, or vectors of size 784, thats a lot of dimensions, and its definitely not something you can visualize. 28x28 is a very tiny image, and most images these days are much larger than that - so we either need to have the resources to handle data that can have millions of dimensions, or we could reduce the data dimensionality using techniques like PCA. We of course cant just take arbitrary dimensions from X - we want to reduce the data size but at the same time capture as much information as possible. Ex. info(1st col of Z) > info(2nd col of Z) > ... info(1st col of Z) > info(2nd col of Z) > ...

How do we measure this information? In traditional PCA and many other traditional statistical methods we use variance. If something varies more, it carries more information. You can imagine the opposite situation, where a variable is completely deterministic, i.e. it has no variance. Then measuring this variable would not give us any new information, because we already knew what it was going to be. So when we get our transformed data Z, what we want is for the first column to have the most information, the second column to have the second most information, and so on.

Thus, when we take the 2 columns with the most information, that would mean taking the first 2 columns. De-correlation Another thing PCA does is de-correlation. If we find correlations, that means some of the data is redundant, because we can predict one column from another column.

Font size:

Interval:

Bookmark:

Similar books «Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano»

Look at similar books to Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano. We have selected literature similar in name and meaning in the hope of providing readers with more options to find new, interesting, not yet read works.

Discussion, reviews of the book Unsupervised Deep Learning in Python: Master Data Science and Machine Learning with Modern Neural Networks written in Python and Theano and just readers' own opinions. Leave your comments, write what you think about the work, its meaning or the main characters. Specify what exactly you liked and what you didn't like, and why you think so.