Deep Learning in Python

Master Data Science and Machine Learning with Modern Neural Networks written in Python, Theano, and TensorFlow

By: The LazyProgrammer ( http://lazyprogrammer.me )

Introduction

Deep learning is making waves. At the time of this writing (March 2016), Googles AlghaGo program just beat 9-dan professional Go player Lee Sedol at the game of Go, a Chinese board game.

Experts in the field of Artificial Intelligence thought we were 10 years away from achieving a victory against a top professional Go player, but progress seems to have accelerated!

While deep learning is a complex subject, it is not any more difficult to learn than any other machine learning algorithm. I wrote this book to introduce you to the basics of neural networks. You will get along fine with undergraduate-level math and programming skill.

All the materials in this book can be downloaded and installed for free. We will use the Python programming language, along with the numerical computing library Numpy. I will also show you in the later chapters how to build a deep network using Theano and TensorFlow, which are libraries built specifically for deep learning and can accelerate computation by taking advantage of the GPU.

Unlike other machine learning algorithms, deep learning is particularly powerful because it automatically learns features . That means you dont need to spend your time trying to come up with and test kernels or interaction effects - something only statisticians love to do. Instead, we will let the neural network learn these things for us. Each layer of the neural network learns a different abstraction than the previous layers. For example, in image classification, the first layer might learn different strokes, and in the next layer put the strokes together to learn shapes, and in the next layer put the shapes together to form facial features, and in the next layer have a high level representation of faces.

Do you want a gentle introduction to this dark art, with practical code examples that you can try right away and apply to your own data? Then this book is for you.

Chapter 1: What is a neural network?

A neural network is called such because at some point in history, computer scientists were trying to model the brain in computer code.

The eventual goal is to create an artificial general intelligence, which to me means a program that can learn anything you or I can learn. We are not there yet, so no need to get scared about the machines taking over humanity. Currently neural networks are very good at performing singular tasks, like classifying images and speech.

Unlike the brain, these artificial neural networks have a very strict predefined structure.

The brain is made up of neurons that talk to each other via electrical and chemical signals (hence the term, neural network). We do not differentiate between these 2 types of signals in artificial neural networks, so from now on we will just say a signal is being passed from one neuron to another.

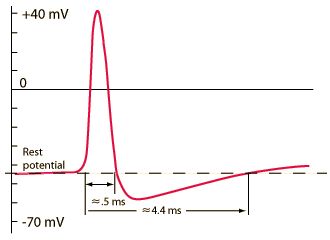

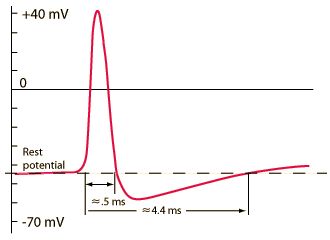

Signals are passed from one neuron to another via what is called an action potential. It is a spike in electricity along the cell membrane of a neuron. The interesting thing about action potentials is that either they happen, or they dont. There is no in between. This is called the all or nothing principle. Below is a plot of the action potential vs. time, with real, physical units.

These connections between neurons have strengths. You may have heard the phrase, neurons that fire together, wire together, which is attributed to the Canadian neuropsychologist Donald Hebb.

Neurons with strong connections will be turned on by each other. So if one neuron sends a signal (action potential) to another neuron, and their connection is strong, then the next neuron will also have an action potential, would could then be passed on to other neurons, etc.

If the connection between 2 neurons is weak, then one neuron sending a signal to another neuron might cause a small increase in electrical potential at the 2nd neuron, but not enough to cause another action potential.

Thus we can think of a neuron being on or off. (i.e. it has an action potential, or it doesnt)

What does this remind you of?

If you said digital computers, then you would be right!

Specifically, neurons are the perfect model for a yes / no, true / false, 0 / 1 type of problem. We call this binary classification and the machine learning analogy would be the logistic regression algorithm.

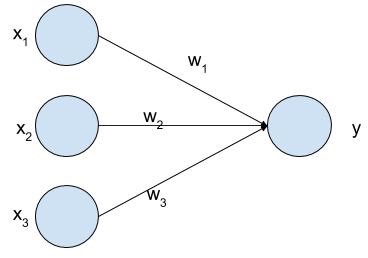

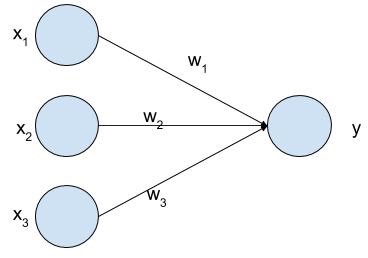

The above image is a pictorial representation of the logistic regression model. It takes as inputs x, x, and x, which you can imagine as the outputs of other neurons or some other input signal (i.e. the visual receptors in your eyes or the mechanical receptors in your fingertips), and outputs another signal which is a combination of these inputs, weighted by the strength of those input neurons to this output neuron.

Because were going to have to eventually deal with actual numbers and formulas, lets look at how we can calculate y from x.

y = sigmoid(w*x + w*x + w*x)

Note that in this book, we will ignore the bias term, since it can easily be included in the given formula by adding an extra dimension x which is always equal to 1.

So each input neuron gets multiplied by its corresponding weight (synaptic strength) and added to all the others. We then apply a sigmoid function on top of that to get the output y. The sigmoid is defined as:

sigmoid(x) = 1 / (1 + exp(-x))

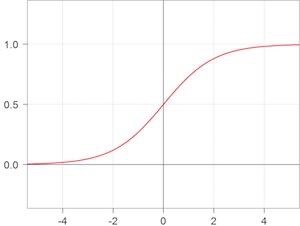

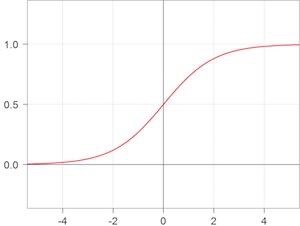

If you were to plot the sigmoid, you would get this:

You can see that the output of a sigmoid is always between 0 and 1. It has 2 asymptotes, so that the output is exactly 1 when the input is + infinity, and the output is exactly 0 when the input is - infinity.

The output is 0.5 when the input is 0.

You can interpret the output as a probability. In particular, we interpret it as the probability:

P(Y=1 | X)

Which can be read as the probability that Y is equal to 1 given X. We usually just use this and y by itself interchangeably. They are both the output of the neuron.

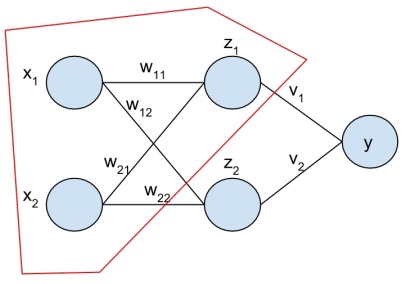

To get a neural network, we simply combine neurons together. The way we do this with artificial neural networks is very specific. We connect them in a feedforward fashion.

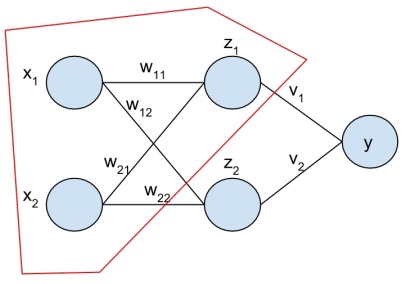

I have highlighted in red one logistic unit. Its inputs are (x, x) and its output is z. See if you can find the other 2 logistic units in this picture.

We call the layer of zs the hidden layer. Neural networks have one or more hidden layers. A neural network with more hidden layers would be called deeper.

Deep learning is somewhat of a buzzword. I have googled around about this topic, and it seems that the general consensus is that any neural network with one or more hidden layers is considered deep.

Exercise

Using the logistic unit as a building block, how would you calculate the output of a neural network Y? If you cant get it now, dont worry, well cover it in Chapter 3.

Chapter 2: Biological analogies

I described in the previous chapter how an artificial neural network is analogous to a brain physically, but what about with respect to learning and other high level attributes?

Next page