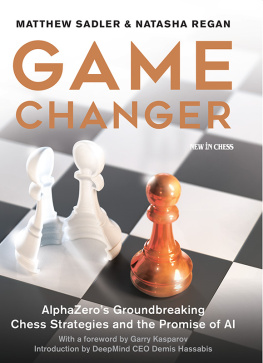

Game Changer

Matthew Sadler and Natasha Regan

Game Changer

AlphaZeros Groundbreaking Chess Strategies and the Promise of AI

New In Chess 2019

2019 New In Chess

Published by New In Chess, Alkmaar, The Netherlands

www.newinchess.com

All rights reserved. No part of this book may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior written permission from the publisher.

Cover design: Buro Blikgoed

Editing and typesetting, supervision: Peter Boel

Proofreading: Maaike Keetman

Production: Joop de Groot, Anton Schermer

Have you found any errors in this book?

Please send your remarks to and implement them in a possible next edition.

ISBN: 978-90-5691-818-7

Explanation of symbols

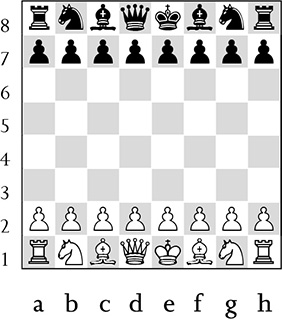

The chessboard with its coordinates:

| White to move |

| Black to move |

King |

Queen |

Rook |

Bishop |

Knight |

| White stands slightly better |

| Black stands slightly better |

| White stands better |

| Black stands better |

+ | White has a decisive advantage |

+ | Black has a decisive advantage |

= | balanced position |

! | good move |

!! | excellent move |

? | bad move |

?? | blunder |

!? | interesting move |

?! | dubious move |

FOREWORD BY GARRY KASPAROV

AlphaZero and the Knowledge Revolution

The ancient board game of chess has played a significant role in the history of artificial intelligence, if mostly as a chimera. Founding fathers of computation like Alan Turing and Claude Shannon understood that a simple algorithm could play a competent game, famously demonstrated by Turing and David Champernownes paper machine program in 1948.

Because early computers were so slow, they also imagined that to ever challenge human chess masters a machine would have to approach chess like a human, using selective, knowledge-based algorithms. The brute force examination of every possible move well into the millions of possibilities after just a few moves was obviously too slow.

Of course, these luminaries had no way of knowing that computer processing speed would soon begin to increase geometrically with the advent of integrated circuits. Gordon Moore would not postulate his eponymous Law until 1965, twelve years after Turings tragic and tragically premature death.

Much as there can be great beauty within the tight constraints of a sonnet or haiku, the limitations of early computers forced programmers to be creative and experimental. They were addressing AI questions that were far larger than the humble game they were attempting to conquer. Can a program learn from its mistakes instead of repeating the same errors every time? Can a form of intuition be instilled into a machine? Is output what matters when determining intelligence, or method? If the human brain is just a very fast computer, what happens when computers become faster than the brain?

As an aside, chesss role as a symbol in spreading the computational theory of mind (CTM) is an interesting subject. By 1997, the computer-savvy general public wasnt amazed that Deep Blue could play world champion-level chess. They were amazed that a human could possibly compete with a machine in a pursuit believed by most to be an exercise in calculation. I was credited with super-human, computerlike abilities when, in fact, human mastery in chess is believed to be based more on pattern recognition and spatial visualization than on calculation or other computer strengths.

Unfortunately, it turned out that the answers to all these profound questions werent required to create a machine that would defeat the world chess champion. As early as the late 1970s, the top programs were all built on the same model: Shannons Type A machine that used brute-force search, the minimax algorithm, and all the speed the days CPUs could provide.

The programming community was happy with the rapid progress in strength, but disillusioned by the straightforwardness with which it was obtained. It was as if the goal to build a robot mountain-climber to scale Mount Everest was achieved by a giant tank plowing a straight line to the top. Once Ken Thompsons hardware-based machine Belle reached master level in 1983, the writing was on the wall even if many of us would stay in denial for another decade.

This isnt to denigrate the achievement of the Deep Blue team or the generations of brilliant chess programmers and inventors that came before them only to put it into perspective with the benefit of hindsight. Its an important lesson that our original visions are often far off the mark when faced with the pragmatic results machines produce. Our intelligent machines dont have to imitate us to surpass our performance. They dont have to be perfect to be useful, only better than a human at a particular task, whether its playing chess or interpreting cancer scans.

With the human versus machine era closing, in 1998 I created Advanced Chess to investigate the potential of human plus machine. Once again, chess proved to be a handy laboratory for experiments that had much wider applications. The revelation was that a superior coordination process between them was more important than the strength of the human or the machine. This formula obviously has an expiration date in a small, closed system like chess, where machines grind ever onward toward perfection, but an emphasis on improving interfaces and collaborative processes has become conventional wisdom in open, real-world areas like security analysis, investing, and business software.

By 2017, the ratings of the top chess programs were to Magnus Carlsen what Carlsen is to a strong club player. They use giant opening books, terabytes of endgame tablebases, and multi-core CPUs that make your iPhone faster than Deep Blue. It was hard to imagine that my beloved chess had any more to offer in its old role as a cognition laboratory, and AI game programmers had moved on to video games and Go, a more mathematically difficult game for machines to crack.

In fact, it was a Go program that led to chesss return to the AI spotlight. DeepMinds AlphaGo moved beyond pure brute force to compete, and beat, the worlds top Go players. Then the bigger surprise, a generic version called AlphaGo Zero, easily surpassed its great predecessor by eschewing embedded human knowledge and teaching itself to play better by playing against itself.

After seeing this important result, I of course had to ask DeepMinds Demis Hassabis when he was going to turn his machines sights on our favorite game. Humans and machines are relatively very bad at Go due to its complexity. Was there much room for improvement in chess? After decades of stuffing as much human chess knowledge as possible into code, would a self-taught algorithm be able to compete with the top traditional programs?

Next page