This book made available by the Internet Archive.

To our Mother and the memory of our Father

Preface

Wishful thinking has probably always complicated our relations with technology, but it is safe to assert that before the computer, and before the bomb, the complications weren't quite as dangerous as they are today. Nor was the wishful thinking as fantastic. Consider a typical military wish list:

As a result of a series of advances in artificial intelligence, computer science, and microelectronics, we stand at the threshold of a new generation of computing technology having unprecedented capabilities.... For example, instead of fielding simple guided missiles or remotely piloted vehicles, we might launch completely autonomous land, sea, and air vehicles capable of complex, far-ranging reconnaissance and attack missions.^

Military managers, like their civilian brethren, have long dreamed of autonomous machines, freed of their reliance on fallible, contentious humanity, able finally to go it alone. Now the speed of computerized warfare has added new urgency to the pursuit of machine intelligence. After all, nuclear attack times are down to seven or eight minutes, and computers, more alert, faster, more constant than we human beings, seem the obvious solution. Thus, faith in the perfectibility of computer

X PREFACE

intelligence has become a means of avoiding the truth of our circumstances. With it comes a temptation to avoid confronting the perils of our existence by handing over our fate to electronic machines.

That dream may become a reality in the Strategic Defense Initiative ("Star Wars"). Describing the system, Time writes in its March 11, 1985, issue:

Humans would make the key strategic decisions in advance, determining under what conditions the missile defense would start firing, and devise a computer system that could translate those decisions into a program. In the end the defensive response would be out of human hands: it would be activated by computer before U.S. commanders even knew that a battle had begun.

Today, after twenty years of support for artificial intelligence (AI) research, the military is moving to call in its chips. But does the military hold the cards to realize its hopes? It does not. The human mind has the upper hand over any machine. The "series of advances" upon which hopes for "autonomous computer systems" depend have been greatly exaggerated, with serious negative consequences.

At present, large-scale eflForts are under way to develop artificial intelHgence systems capable of conversing fluidly in natural language, of providing expert medical advice, of exhibiting common sense, of functioning autonomously in critical military situations. Debates about the political, moral, ethical, and social consequences of such systems are each year more prominent than the last, yet they still take place within a haze of misinformation about the genuine potential of machine intelligence. Marvin Minsky, for example, recently said:

Today our robots are like boys. They do only the simple things they're programmed to. But clearly they're about to cross the edgeless line past which they'll do the things we are programmed to.... What will happen when we face new options in our work and home, where m^ore intelligent machines can better do the things we like to do? What kind of minds and personalities should we dispense to them? What kind of rights and privileges should we withhold from them? Are we ready to face such questions?^

Even Joseph Weizenbaum, whose cri de coeur is still the best-known attack on the computerization of human aS'airs, as

sumes the eventual success of the artificial intelligence enterprise: "It is technically feasible to build a computer system that will interview patients applying for help at a psychiatric outpatient clinic and will produce their psychological profiles complete with charts, graphs, and natural-language commentary."^

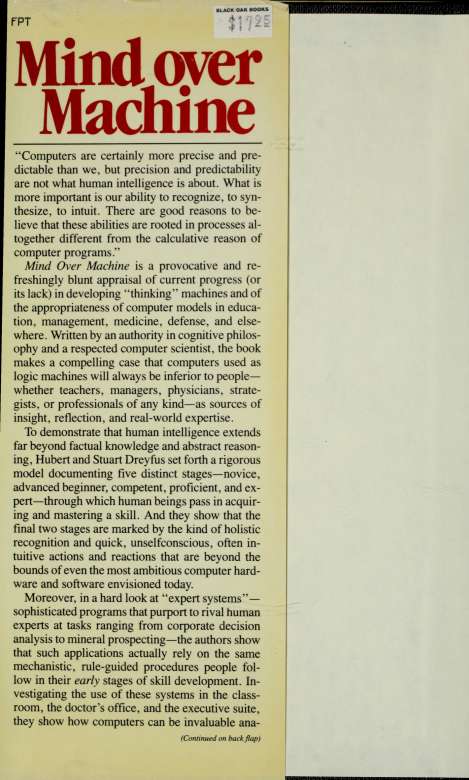

Our goal here is not to take up the rationality or irrationality of the arms race or the social problems posed by automation. Those are concerns for society as a whole, and we claim no special expertise in evaluating them. Our intention is more modest but more basic. Despite what you may have read in magazines and newspapers, regardless of what your congressman was told when he voted on the Strategic Computing Plan, twenty-five years of artificial intelligence research has lived up to very few of its promises and has failed to yield any evidence that it ever will. The time has come to ask what has gone wrong and what we can reasonably expect from computer intelligence. How closely can computers processing facts and making inferences approach human intelligence? How can we profitably use the intelligence that can be given to them? What are the risks of enthusiastic and ambitious attempts to redefine our intelligence in their terms, of delegating to computers key decisionmaking powers, of adapting ourselves to the educational and business practices attuned to mechanized reason?

In short, we want to put the debate about the computer in perspective by clearing the air of false optimism and unrealistic expectations. The debate about what computers should do is properly about social values and institutional forms. But before we can profitably discuss what computers should do we have to be clear about what they can do. Our bottom line is that computers as reasoning machines can't match human intuition and expertise, so in determining what computers should do we have to contrast their capacities with the more generous gifts possessed by the human mind.

Too often, computer enthusiasm leads to a simplistic view of human skill and expertise. To maintain the momentum of automation, to carry its thrust from the production line and office to the board room and classroom, computer boosters lean on theories that emphasize those aspects of expertise that most lend themselves to computerization. To the "knowledge engineers," skill and expertise are equivalent to the "rules of thumb"

PREFACE

that human experts articulate when asked how they solve problems.

We contend that those rules, though they lead to a view of expertise that supports optimism for the future of machine intelligence, do fundamental violence to the real nature of human intelligence and expertise. To safeguard both, we shall propose a nonmechanistic model of human skill, one that we claim:

Explains the failure of existing AI systems to capture expert human judgment

Predicts that failure will continue until intelligence ceases to be understood as abstract reason and computers cease to be used as reasoning machines

Warns against attempts at too zealous computerization in fields such as education and management, which, while not AI proper, fall prey to similar misconceptions