Geometric Structures of statistical Physics, Information Geometry, and Learning

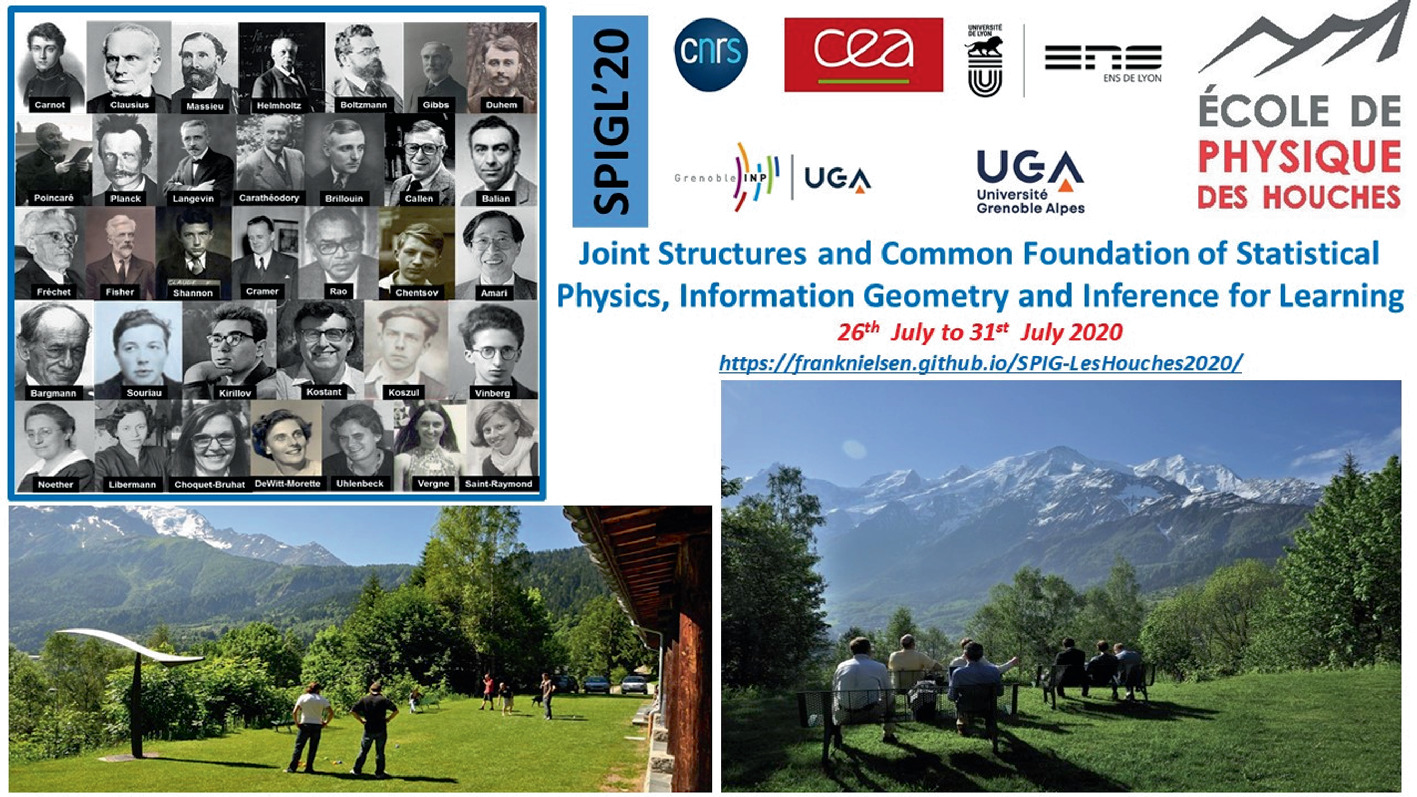

Ecole de Physique des Houches SPIGL20 Summer Week

SPRINGER Proceedings in Mathematics & Statistics, 2021

Subject

This book is proceedings of Les Houches Summer Week SPIGL20 (Joint Structures and Common Foundation of Statistical Physics, Information Geometry and Inference for Learning) organized from July 2731, 2020, at LEcole de Physique des Houches:

Website https://franknielsen.github.io/SPIG-LesHouches2020/

Videos: https://www.youtube.com/playlist?list=PLo9ufcrEqwWExTBPgQPJwAJhoUChMbROr

The conference SPIGL20 has developed the following topics:

Geometric Structures of Statistical Physics and Information

Statistical mechanics and geometric mechanics

Thermodynamics, symplectic and contact geometries

Lie groups thermodynamics

Relativistic and continuous media thermodynamics

Symplectic integrators

Physical Structures of Inference and Learning

Stochastic gradient of Langevins dynamics

Information geometry, Fisher metric, and natural gradient

Monte Carlo Hamiltonian methods

Variational inference and Hamiltonian controls

Boltzmann machine

Organizers

Frdric Barbaresco

THALES, KTD PCC, Palaiseau, France

Silvre Bonnabel

Mines ParisTech, CAOR, Paris, France

Gry de Saxc

Universit de Lille, LaMcube, Lille, France

Franois Gay-Balmaz

Ecole Normale Suprieure Ulm, CNRS & LMD, Paris, France

Bernhard Maschke

Universit Claude Bernard, LAGEPP, Lyon, France

Eric Moulines

Ecole Polytechnique, CMAP, Palaiseau, France

Frank Nielsen

Sony Computer Science Laboratories, Tokyo, Japan

Scientific Rational

In the middle of the last century, Lon Brillouin in The Science and The Theory of Information or Andr Blanc-Lapierre in Statistical Mechanics forged the first links between the theory of information and statistical physics as precursors.

In the context of artificial intelligence, machine learning algorithms use more and more methodological tools coming from the physics or the statistical mechanics. The laws and principles that underpin this physics can shed new light on the conceptual basis of artificial intelligence. Thus, the principles of maximum entropy, minimum of free energy, GibbsDuhems thermodynamic potentials and the generalization of Franois Massieus notions of characteristic functions enrich the variational formalism of machine learning. Conversely, the pitfalls encountered by artificial intelligence to extend its application domains question the foundations of statistical physics, such as the construction of stochastic gradient in large dimension, the generalization of the notions of Gibbs densities in spaces of more elaborate representation like data on homogeneous differential or symplectic manifolds, Lie groups, graphs, and tensors.

Sophisticated statistical models were introduced very early to deal with unsupervised learning tasks related to IsingPotts models (the IsingPotts model defines the interaction of spins arranged on a graph) of statistical physics and more generally the Markov fields. The Ising models are associated with the theory of mean fields (study of systems with complex interactions through simplified models in which the action of the complete network on an actor is summarized by a single mean interaction in the sense of the mean field).

The porosity between the two disciplines has been established since the birth of artificial intelligence with the use of Boltzmann machines and the problem of robust methods for calculating partition function. More recently, gradient algorithms for neural network learning use large-scale robust extensions of the natural gradient of Fisher-based information geometry (to ensure reparameterization invariance), and stochastic gradient based on the Langevin equation (to ensure regularization), or their coupling called natural Langevin dynamics.