Charu C. Aggarwal - Neural Networks and Deep Learning

Here you can read online Charu C. Aggarwal - Neural Networks and Deep Learning full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 0, publisher: Springer International Publishing, genre: Computer. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Neural Networks and Deep Learning

- Author:

- Publisher:Springer International Publishing

- Genre:

- Year:0

- Rating:5 / 5

- Favourites:Add to favourites

- Your mark:

- 100

- 1

- 2

- 3

- 4

- 5

Neural Networks and Deep Learning: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Neural Networks and Deep Learning" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

Neural Networks and Deep Learning — read online for free the complete book (whole text) full work

Below is the text of the book, divided by pages. System saving the place of the last page read, allows you to conveniently read the book "Neural Networks and Deep Learning" online for free, without having to search again every time where you left off. Put a bookmark, and you can go to the page where you finished reading at any time.

Font size:

Interval:

Bookmark:

This Springer imprint is published by the registered company Springer Nature Switzerland AG

The registered company address is: Gewerbestrasse 11, 6330 Cham, Switzerland

To my wife Lata, my daughter Sayani, and my late parents Dr. Prem Sarup and Mrs. Pushplata Aggarwal.

Any A.I. smart enough to pass a Turing test is smart enough to know to fail it.Ian McDonald

Neural networks were developed to simulate the human nervous system for machine learning tasks by treating the computational units in a learning model in a manner similar to human neurons. The grand vision of neural networks is to create artificial intelligence by building machines whose architecture simulates the computations in the human nervous system. This is obviously not a simple task because the computational power of the fastest computer today is a minuscule fraction of the computational power of a human brain. Neural networks were developed soon after the advent of computers in the fifties and sixties. Rosenblatts perceptron algorithm was seen as a fundamental cornerstone of neural networks, which caused an initial excitement about the prospects of artificial intelligence. However, after the initial euphoria, there was a period of disappointment in which the data hungry and computationally intensive nature of neural networks was seen as an impediment to their usability. Eventually, at the turn of the century, greater data availability and increasing computational power lead to increased successes of neural networks, and this area was reborn under the new label of deep learning. Although we are still far from the day that artificial intelligence (AI) is close to human performance, there are specific domains like image recognition, self-driving cars, and game playing, where AI has matched or exceeded human performance. It is also hard to predict what AI might be able to do in the future. For example, few computer vision experts would have thought two decades ago that any automated system could ever perform an intuitive task like categorizing an image more accurately than a human.

Neural networks are theoretically capable of learning any mathematical function with sufficient training data, and some variants like recurrent neural networks are known to be Turing complete . Turing completeness refers to the fact that a neural network can simulate any learning algorithm, given sufficient training data . The sticking point is that the amount of data required to learn even simple tasks is often extraordinarily large, which causes a corresponding increase in training time (if we assume that enough training data is available in the first place). For example, the training time for image recognition, which is a simple task for a human, can be on the order of weeks even on high-performance systems. Furthermore, there are practical issues associated with the stability of neural network training, which are being resolved even today. Nevertheless, given that the speed of computers is expected to increase rapidly over time, and fundamentally more powerful paradigms like quantum computing are on the horizon, the computational issue might not eventually turn out to be quite as critical as imagined.

Although the biological analogy of neural networks is an exciting one and evokes comparisons with science fiction, the mathematical understanding of neural networks is a more mundane one. The neural network abstraction can be viewed as a modular approach of enabling learning algorithms that are based on continuous optimization on a computational graph of dependencies between the input and output. To be fair, this is not very different from traditional work in control theory; indeed, some of the methods used for optimization in control theory are strikingly similar to (and historically preceded) the most fundamental algorithms in neural networks. However, the large amounts of data available in recent years together with increased computational power have enabled experimentation with deeper architectures of these computational graphs than was previously possible. The resulting success has changed the broader perception of the potential of deep learning.

The basics of neural networks: Chapter This will give the analyst a feel of how neural networks push the envelope of traditional machine learning algorithms.

Fundamentals of neural networks: Although Chapters present radial-basis function (RBF) networks and restricted Boltzmann machines.

Advanced topics in neural networks: A lot of the recent success of deep learning is a result of the specialized architectures for various domains, such as recurrent neural networks and convolutional neural networks. Chapters

We have taken care to include some of the forgotten architectures like RBF networks and Kohonen self-organizing maps because of their potential in many applications. The book is written for graduate students, researchers, and practitioners. Numerous exercises are available along with a solution manual to aid in classroom teaching. Where possible, an application-centric view is highlighted in order to give the reader a feel for the technology.

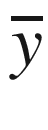

Throughout this book, a vector or a multidimensional data point is annotated with a bar, such as  or

or

Font size:

Interval:

Bookmark:

Similar books «Neural Networks and Deep Learning»

Look at similar books to Neural Networks and Deep Learning. We have selected literature similar in name and meaning in the hope of providing readers with more options to find new, interesting, not yet read works.

Discussion, reviews of the book Neural Networks and Deep Learning and just readers' own opinions. Leave your comments, write what you think about the work, its meaning or the main characters. Specify what exactly you liked and what you didn't like, and why you think so.