Chapter 1. Case Your Own Joint: A Paradigm Shift from Traditional Software Testing

Every complex software program will have costly security vulnerabilities unless considerable effort is made to eliminate them. These security vulnerabilities allow viruses and worms to thrive and also allow criminals to grab personal financial data from already-jittery users who reluctantly put their personal data on the Internet.

Ideally, security should be addressed from the beginningduring system inception with defined security policies, use cases, and so on. Security should be built into a program during the design stage and coded by developers who are trained in secure programming. For more details, see .

If you dont adhere to this philosophy of implementing a secure software development lifecycle and mitigating security risks, security flaws will keep creeping in as mistakes are made and complex software interactions go unnoticed. Methods for ensuring software security quality and testing for security are needed.

The traditional software development and testing process has been far from perfect, as reflected in the various failure rates. For example, from Standish Group statistics, on a $300 billion base, American companies spend $84 billion annually on failed software projects. $138 billion is spent on projects that significantly exceed time and budget estimates or that have reduced functionality.

Designers, developers, and testers make mistakes. Limited budgets and schedule pressures are often cited as the source of this problem. Yet business analysts and users are often unclear about security requirements to begin with. These problems compound to allow defects to slip into production, and the end result is insecure systems.

There is an increasing awareness of software security needs, and the demand for secure systems is increasing. A company can lose revenue or eventually go out of business if its products are considered insecure.

In June 2005, more than 40 million debit and credit cards were exposed to criminals when CardSystems was the victim of a cyber break-in. Forensic analysis after the theft revealed that CardSystems was not handling sensitive data properly. Its software was making extra copies of the credit card data and storing it unencrypted, making it easy pickings for cyber criminals. This prompted big customers to reevaluate their relationship with CardSystems, and four months later the company was sold.

This book discusses how this type of insecure handling of sensitive data can be prevented. Specifically,

Additionally, the CardSystems security disaster could possibly have been prevented if security testing had been part of the process and if CardSystems had learned how to detect this type of situation. Then, when the problem was detected, CardSystems could have taken the mitigation steps just mentioned. It is against Visas Payment Card Industry (PCI) regulations to store secretsa credit card security standard and security policy requirement that should have been implemented, as discussed in .

Traditional development and testing environments that focus on requirements development and testing (discussed in more detail next) find only a portion of the requirements deviation. This is often because security requirements are rarely documented or are faulty. Traditionally, testing hasnt focused on finding the majority of security vulnerabilitiesthe shouldnts and dont allows that are part of all software. We call these attack patterns (see for more details).

defines a vulnerability as A flaw or weakness in a systems design, implementation, or operation and management that could be exploited to violate the systems security policy.

Black hats and vulnerability researchers have developed software security testing techniques that the software development community doesnt know much about. Programmers and testers can use these techniques to find security flaws in their programs during the development process. Some of these techniques are discussed in Instead of breaking into a network, application penetration testing breaks into a software application to uncover its security flaws.

A benefit of software security testing over other software security processes such as code or design reviews is that security testing can mirror actual attack scenarios to yield objective and quantifiable results and demonstrate real exploitability. Late in the software process, it is risky or expensive to change software to eliminate vulnerabilities. Evaluating the damages a vulnerability could cause if it is exploited can help you decide whether to fix a security problem immediately or in the next scheduled software release. This topic is discussed in detail in .

This chapter discusses security testing versus traditional software testing and shows you why security testing requires a paradigm shift in the approach to testing. Additionally, this chapter covers various high-level security testing strategies, including the discovery process, thinking like an attacker, and prioritizing security testing.

Security Testing Versus Traditional Software Testing

Traditional software testing focuses mainly on verifying functional requirements. It generally answers the question Does the application meet all the requirements outlined in the use cases and requirements documentation? On a secondary level, traditional testing also focuses on specified operational requirements, such as performance, stress, backup, and recoverability. Operational requirements, however, are often narrowly understood or specified, making them difficult to verify. Security requirements in traditional testing environments are often scarcely stated or completely omitted. That type of environment has minimal or no security test scenarios.

In a traditional testing environment, software testers build test cases and scenarios based on the applications requirements. In this case, various testing techniques and strategies are used to methodically exercise each function of the application to ensure that it is working properly.

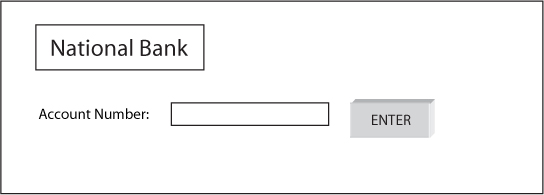

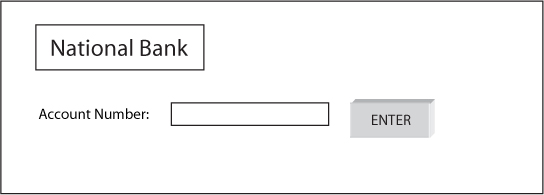

A seemingly straightforward simplified requirement, such as A financial application should accept a bank account number and display an account balance, (see ) can require numerous testing scenarios if tested exhaustively. For example, it would require test scenarios that exercise the function the way a regular user would, plus test scenarios that focus on the variations and permutations of input boundaries of the bank account number fields that could break the system.

Figure 1-1 Account entry form

This functional type of testing verifies that the valid inputs result in expected outputs (positive testing) and that the system graciously handles invalid inputs (such as by displaying useful error messages). The system also should check for unexpected system behavior, such as by verifying that the server doesnt crash (negative testing).

In the case of testing for the bank account number boundaries, the requirement might state that the account number must contain exactly 12 numeric characters.

A simplified testing example would look like this:

- The positive boundary is as follows:

Enter exactly 12 numeric characters into the Account Number field, press

Enter, and evaluate the system behavior.

- The negative boundary is as follows:

Enter 11 (max -1) numeric characters into the Account Number field, press Enter, and evaluate the system behavior. (The system should display an error message.)

Try to enter 13 (max +1) numeric characters into the Account Number field. The system shouldnt allow the user to enter more than 12 numeric characters.