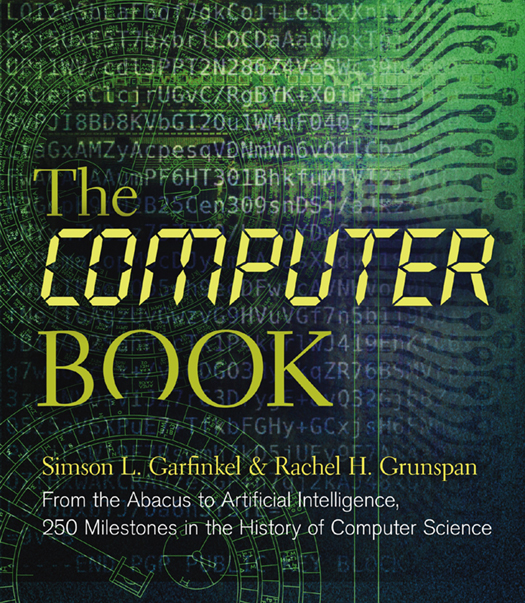

FROM THE ABACUS TO ARTIFICIAL INTELLIGENCE, 250 MILESTONES IN THE HISTORY OF COMPUTER SCIENCE

Simson L. Garfinkel and Rachel H. Grunspan

STERLNG and the distinctive Sterling logo are registered trademarks of Sterling Publishing Co., Inc.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means (including electronic, mechanical, photocopying, recording, or otherwise) without prior written permission from the publisher.

All trademarks are the property of their respective owners, are used for editorial purposes only, and the publisher makes no claim of ownership and shall acquire no right, title or interest in such trademarks by virtue of this publication.

For information about custom editions, special sales, and premium and corporate purchases, please contact Sterling Special Sales at 800-805-5489 or .

Introduction

The evolution of the computer likely began with the human desire to comprehend and manipulate the environment. The earliest humans recognized the phenomenon of quantity and used their fingers to count and act upon material items in their world. Simple methods such as these eventually gave way to the creation of proxy devices such as the abacus, which enabled action on higher quantities of items, and wax tablets, on which pressed symbols enabled information storage. Continued progress depended on harnessing and controlling the power of the natural worldsteam, electricity, light, and finally the amazing potential of the quantum world. Over time, our new devices increased our ability to save and find what we now call data, to communicate over distances, and to create information products assembled from countless billions of elements, all transformed into a uniform digital format.

These functions are the essence of computation: the ability to augment and amplify what we can do with our minds, extending our impact to levels of superhuman reach and capacity.

These superhuman capabilities that most of us now take for granted were a long time coming, and it is only in recent years that access to them has been democratized and scaled globally. A hundred years ago, the instantaneous communication afforded by telegraph and long-distance telephony was available only to governments, large corporations, and wealthy individuals. Today, the ability to send international, instantaneous messages such as email is essentially free to the majority of the worlds population.

In this book, we recount a series of connected stories of how this change happened, selecting what we see as the seminal events in the history of computing. The development of computing is in large part the story of technology, both because no invention happens in isolation, and because technology and computing are inextricably linked; fundamental technologies have allowed people to create complex computing devices, which in turn have driven the creation of increasingly sophisticated technologies.

The same sort of feedback loop has accelerated other related areas, such as the mathematics of cryptography and the development of high-speed communications systems. For example, the development of public key cryptography in the 1970s provided the mathematical basis for sending credit card numbers securely over the internet in the 1990s. This incentivized many companies to invest money to build websites and e-commerce systems, which in turn provided the financial capital for laying high-speed fiber optic networks and researching the technology necessary to build increasingly faster microprocessors.

In this collection of essays, we see the history of computing as a series of overlapping technology waves, including:

Human computation. More than people who were simply facile at math, the earliest computers were humans who performed repeated calculations for days, weeks, or months at a time. The first human computers successfully plotted the trajectory of Halleys Comet. After this demonstration, teams were put to work producing tables for navigation and the computation of logarithms, with the goal of improving the accuracy of warships and artillery.

Mechanical calculation. Starting in the 17th century with the invention of the slide rule, computation was increasingly realized with the help of mechanical aids. This era is characterized by mechanisms such as Oughtreds slide rule and mechanical adding machines such as Charles Babbages difference engine and the arithmometer.

Connected with mechanical computation is mechanical data storage. In the 18th century, engineers working on a variety of different systems hit upon the idea of using holes in cards and tape to represent repeating patterns of information that could be stored and automatically acted upon. The Jacquard loom used holes on stiff cards to enable automated looms to weave complex, repeating patterns. Herman Hollerith managed the scale and complexity of processing population information for the 1890 US Census on smaller punch cards, and mile Baudot created a device that let human operators punch holes in a roll of paper to represent characters as a way of making more efficient use of long-distance telegraph lines. Booles algebra lets us interpret these representations of information (holes and spaces) as binary1s and 0sfundamentally altering how information is processed and stored.

With the capture and control of electricity came electric communication and computation. Charles Wheatstone in England and Samuel Morse in the US both built systems that could send digital information down a wire for many miles. By the end of the 19th century, engineers had joined together millions of miles of wires with relays, switches, and sounders, as well as the newly invented speakers and microphones, to create vast international telegraph and telephone communications networks. In the 1930s, scientists in England, Germany, and the US realized that the same electrical relays that powered the telegraph and telephone networks could also be used to calculate mathematical quantities. Meanwhile, magnetic recording technology was developed for storing and playing back soundtechnology that would soon be repurposed for storing additional types of information.

Electronic computation. In 1906, scientists discovered that a beam of electrons traveling through a vacuum could be switched by applying a slight voltage to a metal mesh, and the vacuum tube was born. In the 1940s, scientists tried using tubes in their calculators and discovered that they ran a thousand times faster than relays. Replacing relays with tubes allowed the creation of computers that were a thousand times faster than the previous generation.

Solid state computing. Semiconductorsmaterials that can change their electrical propertieswere discovered in the 19th century, but it wasnt until the middle of the 20th century that scientists at Bell Laboratories discovered and then perfected a semiconductor electronic switchthe transistor. Faster still than tubes and solids, semiconductors use dramatically less power than tubes and can be made smaller than the eye can see. They are also incredibly rugged. The first transistorized computers appeared in 1953; within a decade, transistors had replaced tubes everywhere, except for the computers screen. That wouldnt happen until the widespread deployment of flat-panel screens in the 2000s.