Joel Grus - K-means and hierarchical clustering with Python

Here you can read online Joel Grus - K-means and hierarchical clustering with Python full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2016, publisher: OReilly Media, Inc., genre: Home and family. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:K-means and hierarchical clustering with Python

- Author:

- Publisher:OReilly Media, Inc.

- Genre:

- Year:2016

- Rating:5 / 5

- Favourites:Add to favourites

- Your mark:

K-means and hierarchical clustering with Python: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "K-means and hierarchical clustering with Python" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

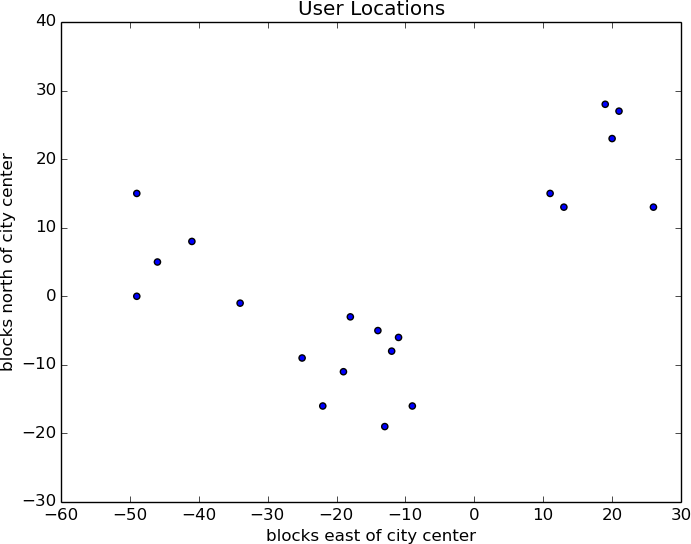

Abstract: Clustering is the usual starting point for unsupervised machine learning. This lesson introduces the k-means and hierarchical clustering algorithms, implemented in Python code. Why is it important? Whenever you look at a data source, its likely that the data will somehow form clusters. Datasets with higher dimensions become increasingly more difficult to eyeball based on human perception and intuition. These clustering algorithms allow you to discover similarities within data at scale, without first having to label a large training dataset. What youll learnand how you can apply it Understand how the k-means and hierarchical clustering algorithms work. Create classes in Python to implement these algorithms, and learn how to apply them in example applications. Identify clusters of similar inputs, and find a representative value for each cluster. Prepare to use your own implementations or reuse algorithms implemented in scikit-learn. This lesson is for you because People interested in data science need to learn how to implement k-means and bottom-up hierarchical clustering algorithms Prerequisites Some experience writing code in Python Experience working with data in vector or matrix format Materials or downloads needed in advance Download this code , where youll find this lessons code in Chapter 19, plus youll need the linear_algebra functions from Chapter 4. This lesson is taken from Data Science from Scratch by Joel Grus

Joel Grus: author's other books

Who wrote K-means and hierarchical clustering with Python? Find out the surname, the name of the author of the book and a list of all author's works by series.

![Frank Kane [Frank Kane] - Hands-On Data Science and Python Machine Learning](/uploads/posts/book/119615/thumbs/frank-kane-frank-kane-hands-on-data-science-and.jpg)

![David Natingga [David Natingga] - Data Science Algorithms in a Week - Second Edition](/uploads/posts/book/119607/thumbs/david-natingga-david-natingga-data-science.jpg)

in a way that minimizes the total sum of squared distances from each point to the mean of its assigned cluster.

in a way that minimizes the total sum of squared distances from each point to the mean of its assigned cluster.