MEAP VERSION 6

About this MEAP

You can download the most up-to-date version of your electronic books from your Manning Account at .

Manning Publications Co. We welcome reader comments about anything in the manuscript - other than typos and other simple mistakes. These will be cleaned up during production of the book by copyeditors and proofreaders.

https://livebook.manning.com/#!/book/pyspark-in-action/discussion

Welcome

Thank you for purchasing the MEAP for PySpark in Action: Python data analysis at scale. It is a lot of fun (and work!) and I hope youll enjoy reading it as much as I am enjoying writing the book.

My journey with PySpark is pretty typical: the company I used to work for migrated their data infrastructure to a data lake and realized along the way that their usual warehouse-type jobs didnt work so well anymore. I spent most of my first months there figuring out how to make PySpark work for my colleagues and myself, starting from zero. This book is very influenced by the questions I got from my colleagues and students (and sometimes myself). Ive found that combining practical experience through real examples with a little bit of theory brings not only proficiency in using PySpark, but also how to build better data programs. This book walks the line between the two by explaining important theoretical concepts without being too laborious.

This book covers a wide range of subjects, since PySpark is itself a very versatile platform. I divided the book into three parts.

- Part 1: Walk teaches how PySpark works and how to get started and perform basic data manipulation.

- Part 2: Jog builds on the material contained in Part 1 and goes through more advanced subjects. It covers more exotic operations and data formats and explains more what goes on under the hood.

- Part 3: Run tackles the cooler stuff: building machine learning models at scale, squeezing more performance out of your cluster, and adding functionality to PySpark.

To have the best time possible with the book, you should be at least comfortable using Python. It isnt enough to have learned another language and transfer your knowledge into Python. I cover more niche corners of the language when appropriate, but youll need to do some research on your own if you are new to Python.

Furthermore, this book covers how PySpark can interact with other data manipulation frameworks (such as Pandas), and those specific sections assume basic knowledge of Pandas.

Finally, for some subjects in Part 3, such as machine learning, having prior exposure will help you breeze through. Its hard to strike a balance between not enough explanation and too much explanation; I do my best to make the right choices.

Your feedback is key in making this book its best version possible. I welcome your comments and thoughts in the liveBook discussion forum.

Thank you again for your interest and in purchasing the MEAP!

Jonathan Rioux

Brief Table of Contents

Part 1:Walk

Part 2:

Faster big data processing: a primer

Part 3:

A foray into machine learning: logistic regression with PySpark

Simplifying your experiments with machine learning pipelines

Machine learning for unstructured data

PySpark for graphs: GraphFrames

Testing PySpark code

Going full circle: structuring end-to-end PySpark code

Appendixes:

Using PySpark with a cloud provider

Python essentials

PySpark data types

Efficiently using PySparks API documentation

Introduction

In this chapter, you will learn:

What is PySpark

Why PySpark is a useful tool for analytics

The versatility of the Spark platform and its limitations

PySparks way of processing data

According to pretty much every news outlet, data is everything, everywhere. Its the new oil, the new electricity, the new gold, plutonium, even bacon! We call it powerful, intangible, precious, dangerous. I prefer calling it useful in capable hands . After all, for a computer, any piece of data is a collection of zeroes and ones, and it is our responsibility, as users, to make sense of how it translates to something useful.

Just like oil, electricity, gold, plutonium and bacon (especially bacon!), our appetite for data is growing. So much, in fact, that computers arent following. Data is growing in size and complexity, yet consumer hardware has been stalling a little. RAM is hovering for most laptops at around 8 to 16 GB, and SSD are getting prohibitively expensive past a few terabytes. Is the solution for the burgeoning data analyst to triple-mortgage his life to afford top of the line hardware to tackle Big Data problems?

Introducing Spark, and its companion PySpark, the unsung heroes of large-scale analytical workloads. They take a few pages of the supercomputer playbook powerful, but manageable, compute units meshed in a network of machines and bring it to the masses. Add on top a powerful set of data structures ready for any work youre willing to throw at them, and you have a tool that will grow (pun intended) with you.

This book is great introduction to data manipulation and analysis using PySpark. It tries to cover just enough theory to get you comfortable, while giving enough opportunities to practice. Each chapter except this one contains a few exercices to anchor what you just learned. The exercises are all solved and explained in Appendix A.

1.1 What is PySpark?

Whats in a name? Actually, quite a lot. Just by separating PySpark in two, one can already deduce that this will be related to Spark and Python. And it would be right!

At the core, PySpark can be summarized as being the Python API to Spark. While this is an accurate definition, it doesnt give much unless you know the meaning of Python and Spark. If we were in a video game, I certainly wouldnt win any prize for being the most useful NPC. Lets continue our quest to understand what is PySpark by first answering What is Spark? .

1.1.1 You saw it coming: What is Spark?

Spark, according to their authors, is a unified analytics engine for large-scale data processing . This is a very accurate definition, if a little dry.

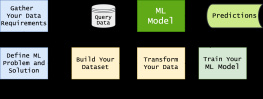

Digging a little deeper, we can compare Spark to an analytics factory . The raw material here, data comes in, and data, insights, visualizations, models, you name it! comes out.

Just like a factory will often gain more capacity by increasing its footprint, Spark can process an increasingly vast amount of data by scaling out instead of scaling up . This means that, instead of buying thousand of dollars of RAM to accommodate your data set, youll rely instead of multiple computers, splitting the job between them. In a world where two modest computers are less costly than one large one, it means that scaling out is less expensive than up, keeping more money in your pockets.

The problem with computers is that they crash or behave unpredictably once in a while. If instead of one, you have a hundred, the chance that at least one of them go down is now much higher. Spark goes therefore through a lot of hoops to manage, scale, and babysit those poor little sometimes unstable computers so you can focus on what you want, which is to work with data.