1. An Overview of the Salesforce Analytics Cloud

Electronic supplementary material

The online version of this chapter (doi: 10.1007/978-1-4842-1203-5_1 ) contains supplementary material, which is available to authorized users.

Know where to find information and how to use it; that is the secret of success.

Albert Einstein

Einsteins sentiment, expressed in this concise quotation, captures our innate need to acquire and comprehend information. This is because information enables us to make decisions that are not impaired by bias or poor judgment. One could argue that this thought gave rise to computers, which were first developed to perform mathematical calculations faster. As computers have evolved, innovative strategies and technologies have also evolved to allow information in those computers to be more readily used in decision making. These strategies range from statistical methods to technologies that facilitate the storage of data for rapid retrieval, as well as visualization technologies.

In this book, we cover the Salesforce Analytics Cloud, one of the latest technologies to enable the productive use of information. The Salesforce Analytics Cloud belongs to a class of products known as data discovery products; this is the term that is usually used by research firms dealing with this technology. The Salesforce Analytics Cloud features a high-performance storage infrastructure that facilitates rapid data retrieval and advanced visualizations allowing information to be quickly understood, all delivered from the cloud infrastructure that underpins the success of Salesforce products. Before we delve into the Salesforce Analytics Cloud, though, lets set the context for this product and survey the data discovery products with a discussion of computers and technology in reporting and analytics.

Evolution of Business Intelligence and Reporting Systems

As computers evolved into systems to manage information, they were increasingly utilized in business and commerce. These early computers stored data in simple flat files. With increasing volumes of data, a more efficient and organized means of storing the data became necessary, which led to the creation of relational databases. Relational databases were capable of storing transactional data efficiently, but not in a manner that is easily queried or mined. The need to analyze information in transactional databases easily and quickly for reporting and analysis summoned into existence data warehouses and business intelligence (BI) tools. For over 20 years, these warehouses and tools have delivered reliable answers to typical questions, and they will continue to be used for the foreseeable future.

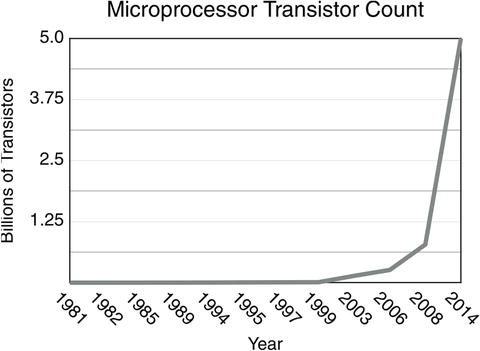

During this time, computer technology progressed at an astonishing rate, owing to increases in the power of microprocessors, the central brain of all computers. The 1980s marked the beginning of personal computer usage in business, with the introduction of the IBM personal computer in 1981, utilizing an Intel 8088 microprocessor with a total of 29,000 transistors (transistors are the electronic switches used in microprocessors). By the end of the 1980s, Intel Corporation had introduced the 80486, its first microprocessor having more than 1 million transistors. By 2014, the transistor count in Intel microprocessors exceeded 5.5 billion. Figure illustrates the incredible rate of increase in transistor use since the first personal computer.

Figure 1-1.

Transistor count of Intel microprocessors from 1981 to 2014

Intel has shipped over 200 million microprocessors whose collective transistor count is over 50 quadrillion, or put another way, more than 7 million CPU transistors for every person on earth. Microprocessors have long since left the confines of the personal computer, however, and have invaded nearly every aspect of our livesin personal devices like smartphones and wearable health-monitoring devices, automotive control features, and smart meters monitoring the electric usage of our homes. It comes as no surprise, then, that this staggering amount of computing power produces an equally staggering amount of data.

Computer networking on a global scale has led to information sharing on an unprecedented scale, primarily through the Internet. The Internet has enabled communication media that command considerable attention in our daily lives: email, social media, and informative or sales websites. One commonality of all of this data is that it lies outside the transactional databases we mentioned earlier, and it is not easily accessible to BI tools for reporting and analysis. The growth of this data in the last decade has meant that 80 to 90 percent of the data relevant to individuals and organizations lies outside the transactional databasesand to a large extent, out of our reach.

Most of this data is classified as unstructured or semi-structured data because it lacks the strongly defined data types and well-defined data models found in structured data sources, like relational databases. Unstructured and semi-structured data does not have the static fields or organizational features of structured data sources. Table lists examples of data sources that fall into each classification.

Table 1-1.

Data Types, Characteristics, and Examples

Data Type | Characteristics | Example(s) |

|---|

Structured data | Data model that has defined rectangular tables containing rows and columns, and referential integrity between tables | Relational databases (RDBMS) |

Semi-structured data | Loosely defined data model, internal structure | XML, CSV, spreadsheet files (Excel), JSON |

Unstructured data | No data model | Documents, email, social media, blogs, customer reviews, media files including image files and video |

The Challenge of Data Variety

This unstructured and semi-structured data from many disparate sources has created a data management problem because the quantity of data and rate at which it is produced are beyond the processing capabilities of relational databases. Data having these attributes is known as Big Data, and the most important attributes of Big Data are commonly referred to as the three Vs, meaning the unusually high volume of the data, the velocity at which the data is produced, and the wide variety of sources of this data. To meet the challenges presented by Big Data, there has been strong interest in a class of clustered file-system technologies designed to store Big Data. By far the best known of these technologies is the Hadoop Distributed File System or HDFS, which is available through the Apache Foundation as Apache Hadoop; it is capable of coping with the volume and velocity problem of Big Data.

HDFS can be deployed on low-cost hardware, and the low upfront cost has created an incentive for HDFS to be used to aggregate all datanot just Big Data but also data originating in transactional systems like ERP and CRM systems, and even data from data warehouses. These large collections of data are sometimes referred to as data lakes, since the data is simply thrown into them to be used later.