My thanks to Scott Moyers of Penguin Press for his editorial exuberance and my agent, Max Brockman, for his continued encouragement. A special thanks, once again, to Sara Lippincott for her thoughtful attention to the manuscript.

CONTENTS

It is exactly in the extension of the cybernetic idea to human beings that Wieners conceptions missed their target.

Deep learning has its own dynamics, it does its own repair and its own optimization, and it gives you the right results most of the time. But when it doesnt, you dont have a clue about what went wrong and what should be fixed.

We may face the prospect of superintelligent machinestheir actions by definition unpredictable by us and their imperfectly specified objectives conflicting with our ownwhose motivations to preserve their existence in order to achieve those objectives may be insuperable.

Any system simple enough to be understandable will not be complicated enough to behave intelligently, while any system complicated enough to behave intelligently will be too complicated to understand.

We dont need artificial conscious agents. We need intelligent tools.

We are in a much more complex situation today than Wiener foresaw, and I am worried that it is much more pernicious than even his worst imagined fears.

The advantages of artificial over natural intelligence appear permanent, while the advantages of natural over artificial intelligence, though substantial at present, appear transient.

We should analyze what could go wrong with AI to ensure that it goes right.

Continued progress in AI can precipitate a change of cosmic proportionsa runaway process that will likely kill everyone.

There is no law of complex systems that says that intelligent agents must turn into ruthless megalomaniacs.

Misconceptions about human thinking and human origins are causing corresponding misconceptions about AGI and how it might be created.

Automated intelligent systems that will make good inferences about what people want must have good generative models for human behavior.

In the real world, an AI must interact with people and reason about them. People will have to formally enter the AI problem definition somewhere.

Just because AI systems sometimes end up in local minima, dont conclude that this makes them any less like life. Humansindeed, probably all life-formsare often stuck in local minima.

Many of the central arguments in The Human Use of Human Beings seem closer to the 19th century than the 21st. Wiener seems not to have fully embraced Shannons notion of information as consisting of irreducible, meaning-free bits.

Although machine making and machine thinking might appear to be unrelated trends, they lie in each others futures.

Hybrid superintelligences such as nation-states and corporations have their own emergent goals and their actions are not always aligned to the interests of the people who created them.

Our fears about AI reflect the belief that our intelligence is what makes us special.

How can we make a good human-artificial ecosystem, something thats not a machine society but a cyberculture in which we can all live as humansa culture with a human feel to it?

Many contemporary artists are articulating various doubts about the promises of AI and reminding us not to associate the term artificial intelligence solely with positive outcomes.

Looking at what children do may give programmers useful hints about directions for computer learning.

By now, the legal, ethical, formal, and economic dimensions of algorithms are all quasi-infinite.

Probably we should be less concerned about us-versus-them and more concerned about the rights of all sentients in the face of an emerging unprecedented diversity of minds.

The work of cybernetically inclined artists concerns the emergent behaviors of life that elude AI in its current condition.

The most dramatic discontinuity will surely be when we achieve effective human immortality. Whether this will be achieved biologically or digitally isnt clear, but inevitably it will be achieved.

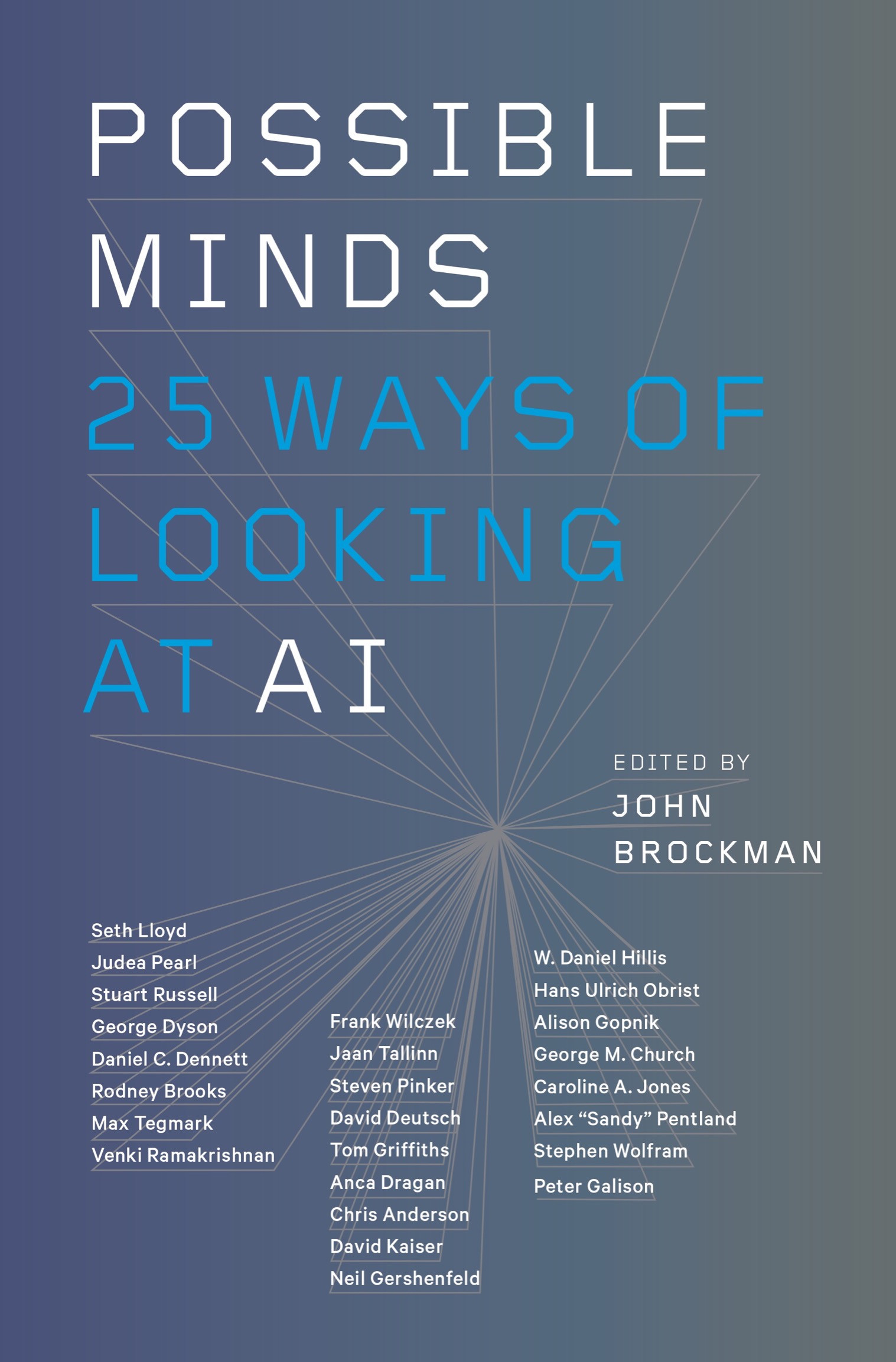

INTRODUCTION: ON THE PROMISE AND PERIL OF AI

Artificial intelligence is todays storythe story behind all other stories. It is the Second Coming and the Apocalypse at the same time: good AI versus evil AI. This book comes out of an ongoing conversation with a number of important thinkers, both in the world of AI and beyond it, about what AI is and what it means. Called the Possible Minds Project, this conversation began in earnest in September 2016, in a meeting at the Grace Mayflower Inn & Spa in Washington, Connecticut, with some of the books contributors.

What quickly emerged from that first meeting is that the excitement and fear in the wider culture surrounding AI now has an analog in the way Norbert Wieners ideas regarding cybernetics worked their way through the culture, particularly in the 1960s, as artists began to incorporate thinking about new technologies into their work. I witnessed the impact of those ideas at close hand; indeed, its not too much to say they set me off on my lifes path. With the advent of the digital era beginning in the early 1970s, people stopped talking about Wiener, but today, his Cybernetic Idea has been so widely adopted that its internalized to the point where it no longer needs a name. Its everywhere, its in the air, and its a fitting place to begin.