The Many Uses of

Orthogonal

Functions

by

D. James Benton

Copyright 2018 by D. James Benton, all rights reserved.

Foreword

Orthogonal functions are clever tools that unlock many mathematical puzzles. Once you've seen this done and understand how they work, you will find many more useful applications. This remarkable area of applied mathematics supports a wide range of technologies, ranging from CAT scans to satellite pictures from space to unraveling the sounds of the deep. Join me on a tour of this fascinating topic in which we will explore data sampling and approximation in both temporal and spatial dimensions.

All of the examples contained in this book,

(as well as a lot of free programs) are available at

http://www.dudleybenton.altervista.org/software/index.html

Programming

Most of the examples in this book are implemented in the C programming language. A few are implemented in VBA (Visual BASIC for Applications, or what Microsoft calls the language of Excel macros). BASIC stands for Beginner's All-Purpose Symbolic Instruction Code. If you're still using some form of BASIC and haven't yet graduated to a professional programming language, now is the time to do so and there is nothing better than C. It's in a class by itself.

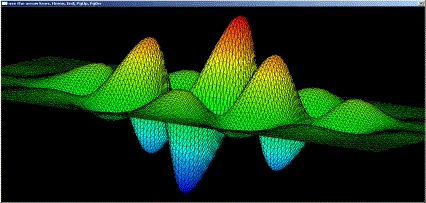

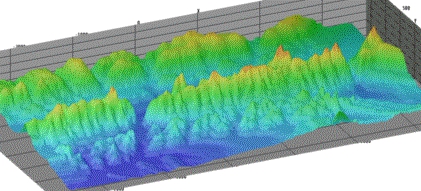

examples\surface\surface.exe Hermite.tb2

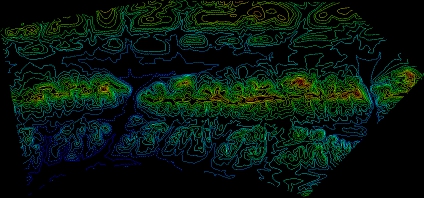

examples\topography\transformed.p3d

examples\topography\inverse_distance.tb2

Table of Contents | page |

Foreword | i |

Programming | i |

Chapter 1. Orthogonality: What Does it Mean? | |

Chapter 2. Magic of the Fast Fourier Transform | |

Chapter 3. Orthogonal Polynomials | |

Chapter 4. Infinite Domains | |

Chapter 5. 2D Data Applications | |

Chapter 6. Image Decomposition | |

Chapter 7. 3D Data Applications | |

Chapter 8: Spherical Data | |

Appendix A: Discrete Radon Transform | |

Appendix B. Image Rotation in Windows | |

Appendix C. Generation of Sinogram Images | |

Appendix D. Play It Anyway! | |

Appendix E: Triangle Gridder | |

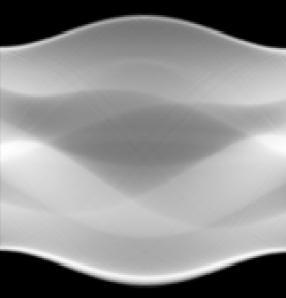

Shepp-Logan Phantom

Corresponding Sinogram

Chapter 1. Orthogonality: What Does it Mean?

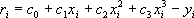

You must see this to appreciate it. While this may seem like a strange place to start, trust me, it will be eye opening Let's say you want to approximate some data using a simple polynomial of the form y=c+Cx+cx+cx+ The residual (i.e., error at each point) is given by:

(1.1)

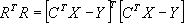

In matrix form, R contains the r i , C contains the c i , X contains the x i , and Y contains the y i . We can write:

(1.2)

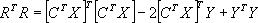

The sum of the squares of r i is equal to R T R , where R T is the transpose of R . Equation 1.2 can be expanded to obtain:

(1.3)

Equation 1.3 can be further expanded:

(1.4)

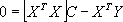

To find the coefficients resulting in the smallest residual, we take the derivative of Equation 1.4 with respect to C and set this equal to zero.

(1.5)

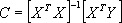

Solving Equation 1.5 for C yields:

(1.6)

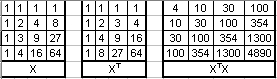

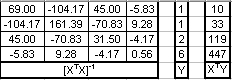

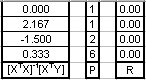

This is the fundamental equation of linear regression seeking the least squares residual. Let's see how this works for a typical problem with four coefficients and a polynomial order of three. The spreadsheet (discrete.xls) can be found in the online archive in folder examples\discrete. The matrices are:

Because the number of constants equals the number of points in this case, the residual is zero. This seems straightforward enough and is easily implemented in Excel with functions TRANSPOSE(), MMULT(), and MINVERSE(), as illustrated in the spreadsheet. You will get the same result using LINEST(). Note that Excel's LINEST function returns the coefficients in reverse order. There's even a shorthand way of specifying the powers of X:

=TRANSPOSE(LINEST(U1:U4,B1:B4^{1,2,3},TRUE,FALSE))

We can set up a program (condition.c) to step through a range of orders to obtain the following table. There are several definitions of the condition number of a matrix, including the ratio of the largest to smallest singular value. We can estimate this important measure of stability by accumulating the product of the pivots. The larger the condition number, the more unstable the matrix, the more susceptible it is to round-off error, and the more meaningless the inverse.

n | condition |

| |

| |

| |

| 82944 |

| 1.19E+09 |

| 6.19E+14 |

| 1.57E+22 |

| 2.56E+31 |

| 3.36E+42 |

| 2.35E+55 |

| 1.26E+72 |

| 4.45E+91 |

| 7.78E+114 |

| 3.95E+140 |