Throughout this book, we will explore Cascading and related open source projects in the context of brief programming examples.Familiarity with Java programming is required.Well show additional code in Clojure, Scala, SQL, and R.The sample apps are all available in source code repositories on GitHub.These sample apps are intended to run on a laptop (Linux, Unix, and Mac OS X, but not Windows) using Apache Hadoop in standalone mode.Each example is built so that it will run efficiently with a large data set on a large cluster, but setting new world records on Hadoop isnt our agenda.Our intent here is to introduce a new way of thinking about how Enterprise apps get designed.We will show how to get started with Cascading and discuss best practices for Enterprise data workflows.

Enterprise Data Workflows

Cascading provides an open source API for writing Enterprise-scale apps on top of Apache Hadoop and other Big Data frameworks.In production use now for five years (as of 2013Q1), Cascading apps run at hundreds of different companiesand in several verticals, which include finance, retail, health care, and transportation.Case studies have been published about large deployments atWilliams-Sonoma, Twitter, Etsy, Airbnb, Square, The Climate Corporation, Nokia, Factual, uSwitch, Trulia, Yieldbot, and the Harvard School of Public Health.Typical use cases for Cascading include large extract/transform/load (ETL) jobs,reporting, web crawlers, anti-fraud classifiers, social recommender systems, retail pricing, climate analysis, geolocation, genomics,plus a variety of other kinds of machine learning and optimization problems.

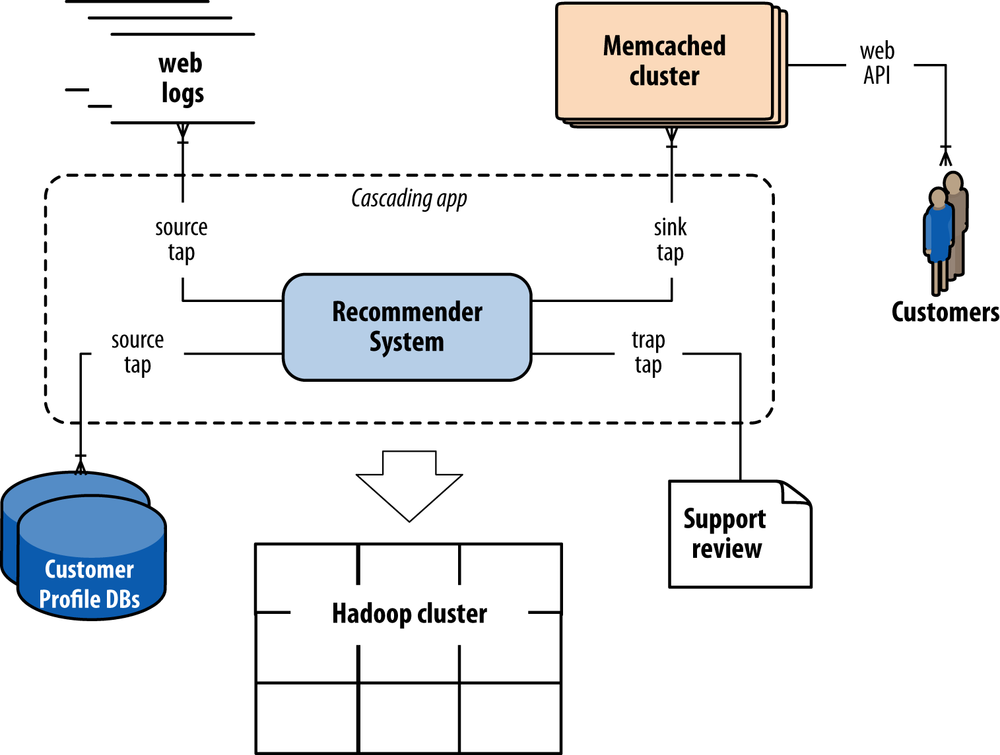

Keep in mind that Apache Hadoop rarely if ever gets used in isolation.Generally speaking, apps that run on Hadoop must consume data from a variety of sources,and in turn they produce data that must be used in other frameworks.For example, a hypothetical social recommender shown in to be served through an API.Cascading encompasses the schema and dependencies for each of those components in a workflowdata sources for input, business logic in the application, the flows that define parallelism, rules for handling exceptions, data sinks for end uses, etc.The problem at hand is much more complex than simply a sequence of Hadoop job steps.

Figure 1. Example social recommender

Moreover, while Cascading has been closely associated with Hadoop, it is not tightly coupled to it.Flow planners exist for other topologies beyond Hadoop, such as in-memory data grids for real-time workloads.That way a given app could compute some parts of a workflow in batch and some in real time,while representing a consistent unit of work for scheduling, accounting, monitoring, etc.The system integration of many different frameworks means that Cascading apps define comprehensive workflows.

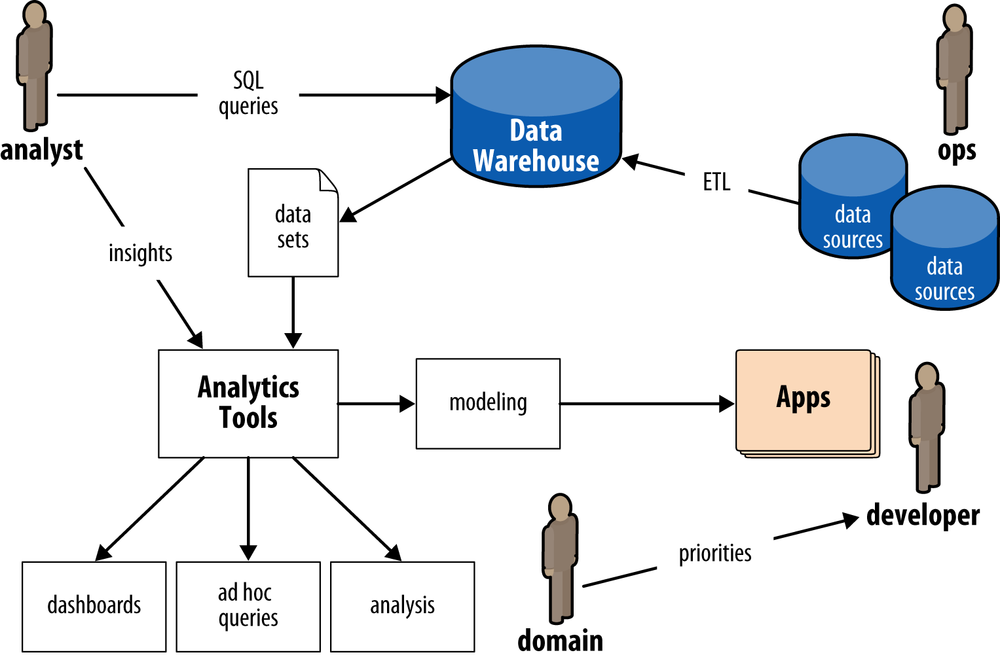

Circa early 2013, many Enterprise organizations are building out their Hadoop practices.There are several reasons, but for large firms the compelling reasons are mostly economic.Lets consider a typical scenario for Enterprise data workflows prior to Hadoop, shown in .

An analyst typically would make a SQL query in a data warehouse such as Oracle or Teradata to pull a data set.That data set might be used directly for a pivot tables in Excel for ad hoc queries,or as a data cube going into a business intelligence (BI) server such as Microstrategy for reporting.In turn, a stakeholder such as a product owner would consume that analysis via dashboards, spreadsheets, or presentations.Alternatively, an analyst might use the data in an analytics platform such as SAS for predictive modeling,which gets handed off to a developer for building an application.Ops runs the apps, manages the data warehouse (among other things), and oversees ETL jobs that load data from other sources.Note that in this diagram there are multiple componentsdata warehouse, BI server, analytics platform, ETLwhich have relatively expensive licensing and require relatively expensive hardware.Generally these apps scale up by purchasing larger and more expensive licenses and hardware.

Figure 2. Enterprise data workflows, pre-Hadoop

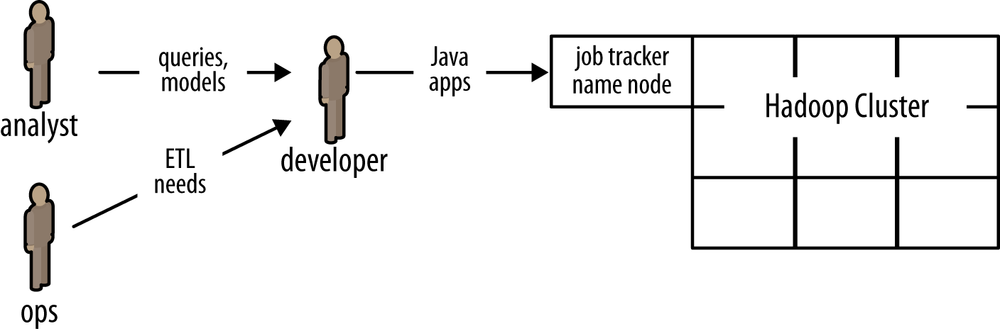

Circa late 1997 there was an inflection point,after which a handful of pioneering Internet companies such as Amazon the developers with Hadoop expertise become a new kind of bottleneck for analysts and operations.

Enterprise adoption of Apache Hadoop, driven by huge savings and opportunities for new kinds of large-scale data apps,has increased the need for experienced Hadoop programmers disproportionately.Theres been a big push to train current engineers and analysts and to recruit skilled talent.However, the skills required to write large Hadoop apps directly in Java are difficult to learn for most developers and far outside the norm of expectations for analysts.Consequently the approach of attempting to retrain current staff does not scale very well.Meanwhile, companies are finding that the process of hiring expert Hadoop programmers is somewhere in the range of difficult to impossible.That creates a dilemma for staffing, as Enterprise rushes to embrace Big Data and Apache Hadoop:SQL analysts are available and relatively less expensive than Hadoop experts.

Figure 3. Enterprise data workflows, with Hadoop

An alternative approach is to use an abstraction layer on top of Hadoopone that fits well with existing Java practices.Several leading IT publications have described Cascading in those terms, for example:

Management can really go out and build a team around folks that are already very experienced with Java.Switching over to this is really a very short exercise.

Thor OlavsrudCIO magazine (2012)

Cascading recently added support for ANSI SQL through a library called Lingual.Another library called Pattern supports thePredictive Model Markup Language (PMML),which is used by most major analytics and BI platforms to export data mining models.Through these extensions, Cascading provides greater access to Hadoop resources for the more traditional analysts as well as Java developers.Meanwhile, other projects atop Cascadingsuch as Scalding (based on Scala) and Cascalog (based on Clojure)are extending highly sophisticated software engineering practices to Big Data.For example, Cascalog provides features fortest-driven development (TDD) of Enterprise data workflows.