The Dark Secret of Search Engines

Find out what search engines are hiding from you

FERNANDO UILHERME BARBOSA DE AZEVEDO

Table of Content

About the Author

Introduction

Chapter 1 - Understanding Search Engines

Chapter 2 - A Basic History of Search Engines

Chapter 3 - Search Engines in Numbers

Chapter 4 - A Peek into Search Engine Manipulation

Chapter 5 - Underhanded Tactics for On-Page SEO

Chapter 6 - Off-Page SEO and the Value of Backlinks

Chapter 7 - The Problem with Backlinks

Chapter 8 - Search Engines using Artificial Intelligence

Chapter 9 - The Dark World of Internet Marketing

Final Words

About the Author

Fernando Uilherme Barbosa de Azevedo is an electronic, electrical and industrial engineer graduated from Pontifcia Universidade Catlica of Rio de Janeiro. He is MBA graduate from Fundao Getlio Vargas. He has been a programming instructor at Pontifcia Universidade Catlica of Rio de Janeiro for 7 years.

He published his first book Macros for Excel hands on by publisher Campus/Elsevier at age 27. The book is still sold in Brazil and Portugal.

His first startup business won a prize from the Brazilian Federal Institutional FINEP.

Coming to the United States in 2014, Fernando studied Web Development and Internet Technologies at University of California Santa Cruz - Silicon Valley Extension and also completed the Innovation and Entrepreneurship Certification from Stanford University.

Fernando has been featured many times in news media and TV. He was interviewed as a specialist on his field by Forbes, The Entrepreneur, el Nuevo Herald and many other major Brazilian media companies.

Today, Fernando runs 2 internet marketing companies in the United States and has clients in many countries. The companies offer services such as internet marketing, SEO, Online Reputation Managements, pen testing, systems audit, e-commerce, apps and other internet related activities.

He is also a web development instructor for IronHack and weekly speaker at Radio Gazeta.

Fernando considers himself an ethical hacker and thinks that the internet should be a safer place. By advocating against all the unethical activities online that are still present today, he hopes that our leaders and law makers can become aware of these threats and help create laws for a safer world.

This book is dedicated to Fernandos sister Christiane Monnerat.

This book is a series of 5 books related to Internet Technologies and how the internet works. If you like this book, please check out other books from the same author.

Introduction

Search engines are the gateway to the internet. Google, Yahoo, Bing, DuckDuckGo and other help millions of people every minute.

These companies got so big, their indexes algorithms gained a lot of complexity, and have created millions of new jobs for SEO (Search Engine Optimization) agencies, Digital Marketing and E-commerce.

Search Engines are not fast and innovators like they used to be. They became the slow huge enterprises like following the path of IBM and Microsoft.

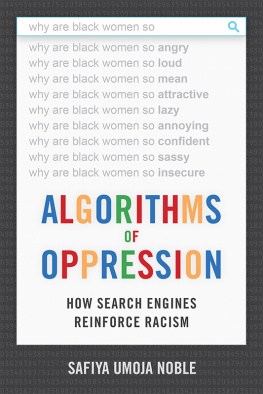

Search Engines want to be relevant so you can keep using them and they can keep money running ads. Is the most relevant article for the big majority really the most important article for you? Are you really interested in the tabloid link-bait over-the-top headlines that most people click? If it rotates around keeping the ads running, is everything we search trying to sell us something? Is everything we search trying to understand our values so they can better influence us into buying?

Meanwhile, outside of the Search Engines, SEO specialists work to manipulate their position on the Search Engines.

Also, digital marketers have carefully created each piece of content for you, sometimes showing evidence for arguments on both sides.

Privacy was not a concern till 2018 and companies were interested in increasing their sales by having our information and categorizing our social and economic aspects on search patterns and social medial information we give out.

Search engines are evolving to something bizarre. There are arguments for everything you search. There are ads for everything we search. SEO specialists manipulate Search Engines in ways search engines cant punish them. And even reputable websites are not free from biases.

Chapter 1 - Understanding Search Engines

To understand just how sophisticated a modern search engine really is, imagine that you need to do a book report on 644 million different books. They vary in size and in the number of pages they have. They also differ in the way they are formatted and in the language in which theyre written. Some of them are for entertainment while others contain functional information. Each one has a unique topic and each author has a unique take. Also imagine that the contents of these books change from day to day. When a new topic arises, a page is added to that book. You will need to add that new page to your report. This is similar to what search engines are doing to all contents in the internet.

It would be impossible for one person to read books of that number. Before the digital age, for a person to find a book in a library, he first needs to go to the card catalogue area, look for the card of the book, take note of its location, and go to that portion of the library. Even if all the books are arranged and organized the right way, that person still needs at least 10 minutes to locate it and to bring it to the reading area.

A search engine helps us with this problem. It saves us thousands of hours when it comes to searching for information. It does this by indexing all the information into a database. To present users with information thats most relevant to their needs, the search engine must search far and wide to collect data from all searchable websites.

While it may seem like a simple thing to do, it isnt. A search engine that uses an indexing algorithm like Google uses computers to explore the internet. These computers are called crawlers bots, Googlebot, or simply just crawlers. They execute their programming by going to all the websites that they can explore, hopping from one website to another through links. In the process, they collect relevant data about the website, its content, its important features, and many others. It then indexes these data to a database so that they can pull up specific webpages in the future when someone needs them.

In a nutshell, these crawlers read billions of webpages on a regular basis and keeps the index updated. As of the writing of this book, there are around 644 million active websites on the internet. Some of these websites have thousands of pages. Search engines organize all these in their indexes and serve the relevant content according to our search terms. With this in mind, we could break down the tasks of a search engine into three important steps:

Crawling

Crawling is the process of sending out bots to explore the internet and to collect data from each website they encounter. In our analogy earlier in the chapter, this process is like having thousands of robots read thousands of books at a time. It collects information from each page of every book.

Google, for example, encourages webmasters to optimize their websites based on Googles specification. They provide webmasters with a free tool called the Search Console. In the search console, they allow webmasters to submit a sitemap. The sitemap is like a blueprint of the websites structure. It indicates what webpages should be crawled by Googles robots. Ideally, all the pages in the sitemap should be interconnected by links.

By registering their website to the Search Console, webmasters are basically telling Google that they have a new online property and that they want their webpages to show in the Google search result pages. Google will eventually crawl the website and add its content to their index.

Next page