Shaders as Light Simulations

To explain the first definition, look at the three images in Figure . They show you three surfaces made of different materials. Your brain can instantly understand what material an object is made of, just by looking at it. That happens because every materials pattern of interaction with light is very characteristic and recognizable to the human brain. Lighting shaders simulate that interaction with light, either by taking advantage of what we know about the physics of light, or through a lot of trial and error and effort from the artists.

Figure 1-1.

Skin, metal, wood

In the physical world, surfaces are made of atoms, and light is both a wave and a particle. The interaction between light, the surface, and our eyes determines what a surface will look like. When light coming from a certain direction hits a surface, it can be absorbed, reflected (in another direction), refracted (in a slightly different direction), or scattered (in many different directions). The behavior of light rays, when they come in contact with a surface, is what creates the specific look of a material. See Figure .

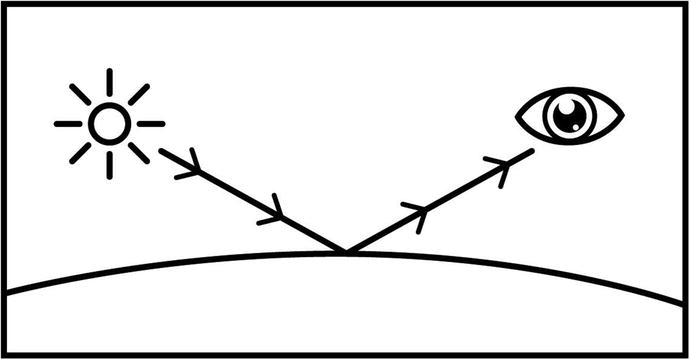

Figure 1-2.

A ray of light, hitting a surface and bouncing off in the direction of our eyes

Even if a surface looks smooth at the macroscopic level, like skin does, at the microscopic level, it can have micro-facets that scatter light in different directions.

Inside computers we don't have the computational power needed to simulate reality to that level of detail. If we had to simulate the whole thing, atoms and all, it would take years to render anything. In most renderers, surfaces are represented as 3D models, which are basically points in 3D space (vertices) at a certain position, that are then grouped in triangles, which are then again grouped to form a 3D shape. Even a simple model can have thousands of vertices.

Our 3D scene, composed of models, textures, and shaders, is rendered to a 2D image, composed of pixels. This is done by projecting those vertex positions to the correct 2D positions in the final image, while applying any textures to the respective surfaces and executing the shaders for each vertex of the 3D models and each potential pixel of the final image. See Figure .

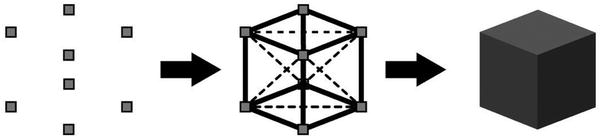

Figure 1-3.

From points, to triangles, to the renderer shaded model

Regardless of how far you are willing to go in detail when modeling, its impossible to match the level of detail in the real world. We can use our mathematical knowledge of how lighting works, within a shader, to make our 3D models look as realistic as possible, compensating for not being able to simulate surfaces at a higher level of detail. We can also use it to render our scenes fast enough, so that our game can draw a respectable number of frames per second. Frames per second appropriate for games range from 30 fps to 60 fps, with more than 60 fps being needed for virtual reality games.

This is what physically based rendering is all about. It's basically a catalog of various types of lighting behaviors in surfaces and the mathematical models we use to approximate them.

Rendering as Perspective Drawing

Rendering is conceptually (and mathematically) very similar to the painters process of drawing from life, into a canvas, using perspective.

The techniques of perspective drawing originated in the Italian Renaissance, more than 500 years ago, even if the mathematical foundations for it were laid much earlier, back in Euclids times. In our case, the canvas is our final image, the scene and 3D models are reality, and the painter is our renderer.

In computer graphics, there are many ways to render the scene, some more computationally expensive and some less. The fast type (rasterizer-based) is what real-time rendering, games included, has been using. The slow type (raytracing, etc.) is what 3D animated movies generally use, because rendering times can reach even hours per frame.

The rendering process for the fast type of renderers can be simplified like so: first the shapes of the models in the scene are projected into the final 2D image; lets call it the sketching the outline phase, from our metaphorical painters point of view. Then the pixels contained within each outline are filled, using the lighting calculations implemented in the shaders; lets call that the painting phase.

You could render an image without using shaders, and we used to do so. Before the programmable graphics pipeline, rendering was carried out with API calls (APIs such as OpenGL and DirectX3D). To achieve better speed, the APIs would give you pre-made functions, to which you would pass arguments. They were implemented in hardware, so there was no way to modify them. They were called fixed-function rendering pipelines.

To make renderers more flexible, the programmable graphics pipeline was introduced. With it, you could write small programs, called shaders , that would execute on the GPU, in place of much of the fixed-function functionality.