Session Summary

The Cognitive Robotics and Learning mini symposium was held on the third day of the conference and consisted of eight contributions. Three of them were presented as 15-minute talks, and five were presented as 5-minute spotlight talks followed by a poster session. The papers address important topics related to learning from human observation, novel representations for linking perception and action, and machine learning methods for statistical generalization, clustering, and classification. In spite of ISRR, the papers focused on discussing open research questions, challenges, and lessons learned in the respective areas.

In Chapter , Bohren and Hager presented a method to jointly recognize, segment, and estimate the position of objects in 3D. The goal was to integrate popular computer vision methods for object segmentation with constrained rigid object registration required for robotics manipulation, thereby connecting mid-level vision with the perception in 3D space. This was achieved with a combination of efficient convolutional operations through integral images with RANSAC-based pose estimation. Very comprehensive tests were performed with RGB-D data of several industrial objects.

Valada, Spinello, and Burgard introduced a new method for terrain classification in Chapter . Instead of using popular vision classification methods, the problem was formulated with acoustic signals obtained from inexpensive microphones. A deep classifier based on convolutional networks was then constructed to automatically learn features from spectrograms to classify the terrain. Experiments were conducted by navigating outdoors with accuracy exceeding 99% for the recognition of grass, paving, and carpet, among other surfaces. This demonstrated the potential of the method to deal with highly noise data.

Learning from demonstration was an important topic in our mini symposium, with several papers directly addressing this problem. In Chapter , Kim, Kim, Dai, Kaelbling, and Lozano-Perez described a novel trajectory generator to address problems with variable and uncertain dynamics. The key insight was to devise an active learning method based on anomaly detection to better search the state space and guide the acquisition of more training data. Experiments were performed for an aircraft control problem and a manipulation task.

In Chapter , Englert and Toussaint proposed a framework based on constraint optimization, specifically quadratic programming, to execute manipulation tasks that incorporate contacts. The authors formulated the problem of learning parametrized cost functions as a convex optimization procedure from a series of demonstrations. Once the cost function is determined, trajectories can be obtained by solving a nonlinear constrained problem. The authors successfully tested the approach on challenging tasks such as sliding objects and opening doors.

Oberlin and Tellex presented a system to automate the collection of data for novel objects with the aim of making both object detection, pose estimation and grasping easier. The paper entitled shows how to identify the best grasp point by performing multiple attempts of picking up an object and tracking successes and failures. This was formulated as an N-armed bandit problem and demonstrated on a stock Baxter robot with no extra sensors, and achieve excellent results in detecting, classifying, and manipulating objects.

In Chapter , Krishnan and colleagues addressed the problem of segmenting trajectories of a set of surgical trajectories by clustering transitions between linear dynamic regimes. The new method, named transition state clustering (TSC), uses a hierarchical Dirichlet process on Gaussian mixture models on transition states from several demonstrations. The method automatically determines the number of segments through a series of merging and pruning steps and achieves performance approaching human experts on needle passing segments and suturing segments.

In Chapter , Calinon discusses the generalization capability of task-parameterized Gaussian mixture models in robot learning from demonstration and the application of such models for movement generation in robots with high number of degrees of freedom. The paper shows that task parameters can be represented as affine transformation which can be used with different statistical encoding strategies, including standard mixture models and subspace clustering, and is able to handle task constraints in task space and joint space as well as constraint priorities. The approach was demonstrated in a series of problems in configuration and operational spaces, tested in simulation and on a Baxter robot.

In modeling objects as aspect transition graphs to support manipulation, Ku, Learned-Miller, and Grupen introduced an image-based object model that is able to categorize an object into a subset of aspects based on interactions instead of only on visual appearance. The resulting representation of an object model as aspect transition graph combines distinctive object views with the information of how actions change viewpoints or the state on the object. Further, an image-based visual servoing algorithm is presented that works with the object model. The novel object model and visual servoing algorithm are demonstrated on a tool grasping task on the Robonout 2 simulator.

Introduction

Despite the substantial progress made in computer vision for object recognition over the past few years, practical turn-key use of object recognition and localization for robotic manipulation in unstructured environments remains elusive. Traditional recognition methods that have been optimized for large-scale object classification []. Unfortunately, such modifications are often impractical and sometimes even infeasible for example, in manufacturing and assembly applications, robotic search-and-rescue, and any operation in hazardous or extreme environments.

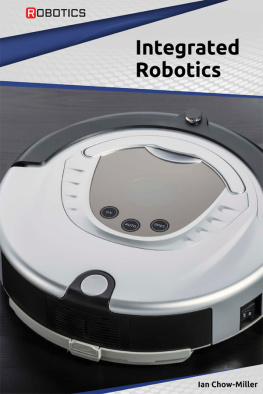

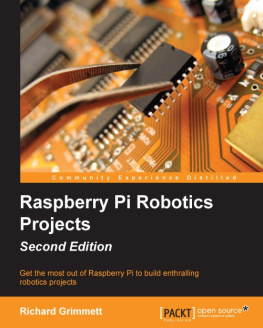

Fig. 1

In a robotic assembly scenario ( a ), for the objects ( b ) that have little or no distinguishing texture features, most object recognition systems would rely on the objects being well-separated ( c ) and fail when objects are densely packed ( d )

As with many industrial automation domains, we face an assembly task in which a robot is required to construct structures from rigid components which have no discriminative texture, as seen in Fig. ] manipulation in assembly.

Unfortunately, many object registration algorithms [d. Even if the application allowed for it, augmenting the parts with 2D planar markers is still insufficient for precise pose estimation due to the small size of the parts and the range at which they need to be observed.

While object recognition for these dense, textureless scenes is a challenging problem, we can take advantage of the inherent constraints imposed by physical environments to find a solution. In particular, many tasks only involve manipulation of a small set of known objects, the poses of these objects evolve continuously over time [], and often multiple views of the scene are available.