1. Basics of TensorFlow

This chapter covers the basics of TensorFlow , the deep learning framework. Deep learning does a wonderful job in pattern recognition, especially in the context of images, sound, speech, language, and time-series data. With the help of deep learning, you can classify, predict, cluster, and extract features. Fortunately, in November 2015, Google released TensorFlow, which has been used in most of Googles products such as Google Search, spam detection, speech recognition, Google Assistant, Google Now, and Google Photos. Explaining the basic components of TensorFlow is the aim of this chapter.

TensorFlow has a unique ability to perform partial subgraph computation so as to allow distributed training with the help of partitioning the neural networks. In other words, TensorFlow allows model parallelism and data parallelism. TensorFlow provides multiple APIs. The lowest level APITensorFlow Coreprovides you with complete programming control.

Note the following important points regarding TensorFlow :

Its graph is a description of computations.

Its graph has nodes that are operations.

It executes computations in a given context of a session.

A graph must be launched in a session for any computation.

A session places the graph operations onto devices such as the CPU and GPU.

A session provides methods to execute the graph operations.

For installation , please go to https://www.tensorflow.org/install/ .

I will discuss the following topics:

Tensors

Before you jump into the TensorFlow library, lets get comfortable with the basic unit of data in TensorFlow. A tensor is a mathematical object and a generalization of scalars, vectors, and matrices. A tensor can be represented as a multidimensional array. A tensor of zero rank (order) is nothing but a scalar. A vector/array is a tensor of rank 1, whereas a matrix is a tensor of rank 2. In short, a tensor can be considered to be an n -dimensional array.

Here are some examples of tensors:

: This is a rank 0 tensor; this is a scalar with shape [ ].

[2.,5., 3.] : This is a rank 1 tensor; this is a vector with shape [3] .

[[1., 2., 7.], [3., 5., 4.]] : This is a rank 2 tensor; it is a matrix with shape [2, 3] .

[[[1., 2., 3.]], [[7., 8., 9.]]] : This is a rank 3 tensor with shape [2, 1, 3] .

Computational Graph and Session

TensorFlow is popular for its TensorFlow Core programs where it has two main actions.

Lets understand how TensorFlow works .

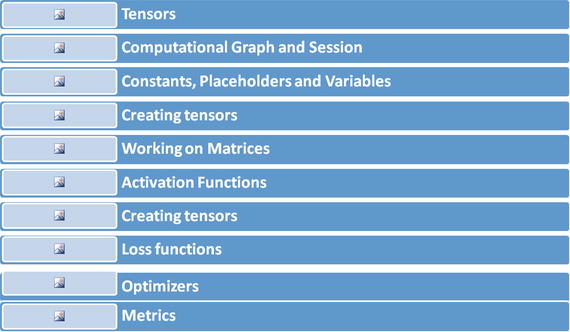

Its programs are usually structured into a construction phase and an execution phase.

The construction phase assembles a graph that has nodes (ops/operations) and edges (tensors).

The execution phase uses a session to execute ops (operations) in the graph.

The simplest operation is a constant that takes no inputs but passes outputs to other operations that do computation.

An example of an operation is multiplication (or addition or subtraction that takes two matrices as input and passes a matrix as output).

The TensorFlow library has a default graph to which ops constructors add nodes.

So, the structure of TensorFlow programs has two phases, shown here:

A computational graph is a series of TensorFlow operations arranged into a graph of nodes.

Lets look at TensorFlow versus Numpy . In Numpy , if you plan to multiply two matrices, you create the matrices and multiply them. But in TensorFlow, you set up a graph (a default graph unless you create another graph). Next, you need to create variables, placeholders, and constant values and then create the session and initialize variables. Finally, you feed that data to placeholders so as to invoke any action.

To actually evaluate the nodes, you must run the computational graph within a session.

A session encapsulates the control and state of the TensorFlow runtime.

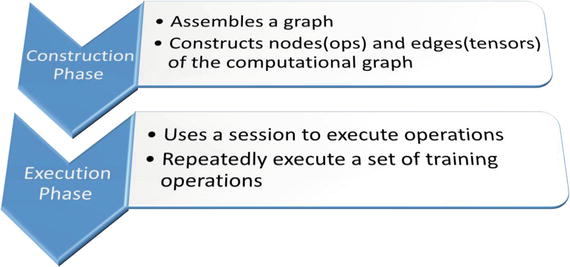

The following code creates a Session object:

sess = tf.Session()

It then invokes its run method to run enough of the computational graph to evaluate node1 and node2.

The computation graph defines the computation. It neither computes anything nor holds any value. It is meant to define the operations mentioned in the code. A default graph is created. So, you dont need to create it unless you want to create graphs for multiple purposes.

A session allows you to execute graphs or parts of graphs. It allocates resources (on one or more CPUs or GPUs) for the execution. It holds the actual values of intermediate results and variables.

The value of a variable, created in TensorFlow, is valid only within one session. If you try to query the value afterward in a second session, TensorFlow will raise an error because the variable is not initialized there.

To run any operation, you need to create a session for that graph. The session will also allocate memory to store the current value of the variable

Here is the code to demonstrate:

Constants , Placeholders, and Variables

TensorFlow programs use a tensor data structure to represent all dataonly tensors are passed between operations in the computation graph. You can think of a TensorFlow tensor as an n -dimensional array or list. A tensor has a static type, a rank, and a shape. Here the graph produces a constant result. Variables maintain state across executions of the graph.

Generally, you have to deal with many images in deep learning, so you have to place pixel values for each image and keep iterating over all images.

To train the model, you need to be able to modify the graph to tune some objects such as weight and bias. In short, variables enable you to add trainable parameters to a graph. They are constructed with a type and initial value.

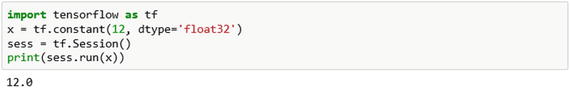

Lets create a constant in TensorFlow and print it.

Here is the explanation of the previous code in simple terms:

Import the tensorflow module and call it tf .

Create a constant value ( x ) and assign it the numerical value 12.

Create a session for computing the values.

Run just the variable x and print out its current value.

The first two steps belong to the construction phase, and the last two steps belong to the execution phase. I will discuss the construction and execution phases of TensorFlow now.