Nobuoki Eshima - Statistical Data Analysis and Entropy

Here you can read online Nobuoki Eshima - Statistical Data Analysis and Entropy full text of the book (entire story) in english for free. Download pdf and epub, get meaning, cover and reviews about this ebook. year: 2020, publisher: Springer Nature, genre: Home and family. Description of the work, (preface) as well as reviews are available. Best literature library LitArk.com created for fans of good reading and offers a wide selection of genres:

Romance novel

Science fiction

Adventure

Detective

Science

History

Home and family

Prose

Art

Politics

Computer

Non-fiction

Religion

Business

Children

Humor

Choose a favorite category and find really read worthwhile books. Enjoy immersion in the world of imagination, feel the emotions of the characters or learn something new for yourself, make an fascinating discovery.

- Book:Statistical Data Analysis and Entropy

- Author:

- Publisher:Springer Nature

- Genre:

- Year:2020

- Rating:3 / 5

- Favourites:Add to favourites

- Your mark:

Statistical Data Analysis and Entropy: summary, description and annotation

We offer to read an annotation, description, summary or preface (depends on what the author of the book "Statistical Data Analysis and Entropy" wrote himself). If you haven't found the necessary information about the book — write in the comments, we will try to find it.

Nobuoki Eshima: author's other books

Who wrote Statistical Data Analysis and Entropy? Find out the surname, the name of the author of the book and a list of all author's works by series.

be a sample space;

be a sample space;  be an event; and let

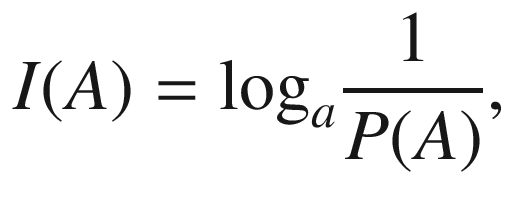

be an event; and let  the probability of event A. The information of event A is defined mathematically according to the probability, not the content itself. Smaller the probability of an event is, greater we feel its value. Based on our intuition, the mathematical definition of information [] is given by

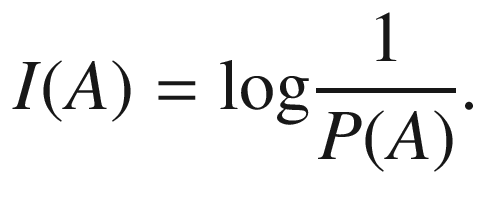

the probability of event A. The information of event A is defined mathematically according to the probability, not the content itself. Smaller the probability of an event is, greater we feel its value. Based on our intuition, the mathematical definition of information [] is given by , the information of A is defined by

, the information of A is defined by

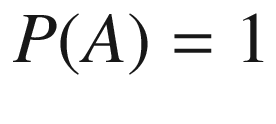

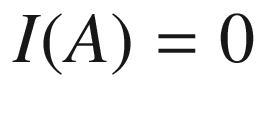

, i.e., event A always occurs, then

, i.e., event A always occurs, then  and it implies that event A has no information. The information measure

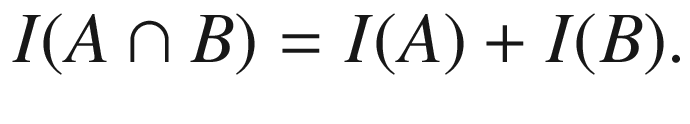

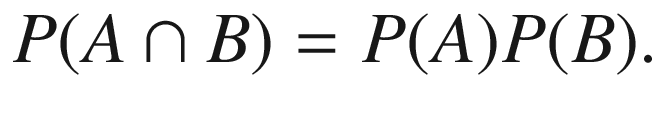

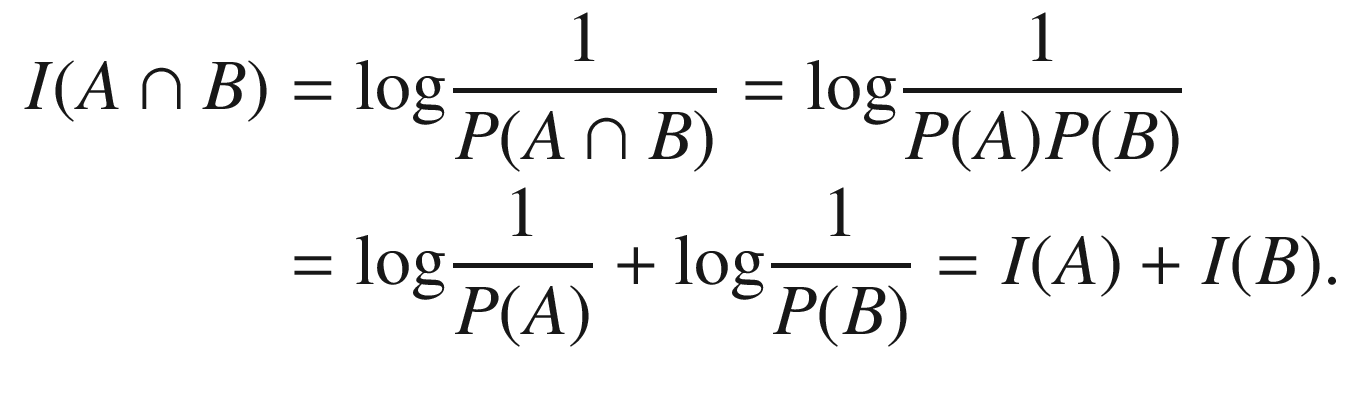

and it implies that event A has no information. The information measure  has the following properties:

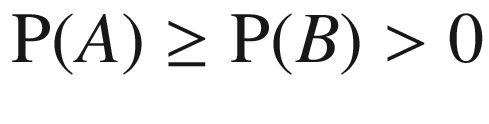

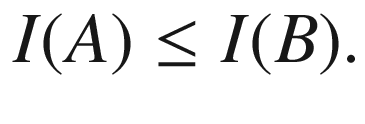

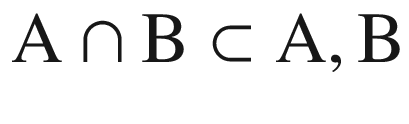

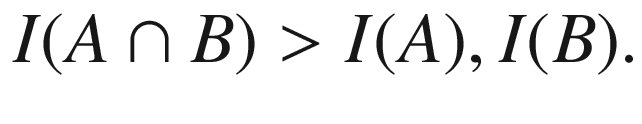

has the following properties:  , the following inequality holds:

, the following inequality holds:

, corresponding to (), we have

, corresponding to (), we have

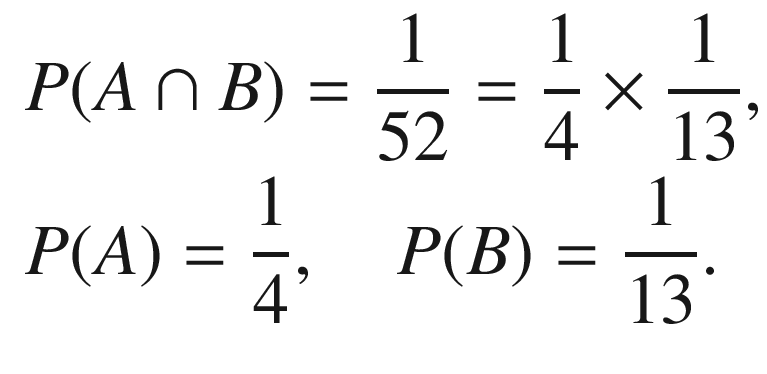

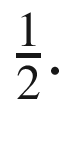

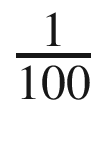

, if there is no information about the number. In this case, the loss of the information about the right phone number is

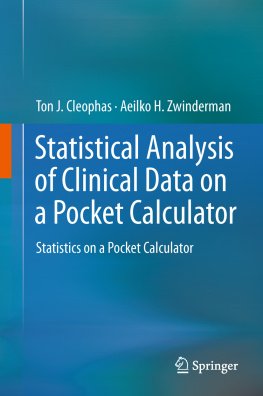

, if there is no information about the number. In this case, the loss of the information about the right phone number is  In general, the loss of the information is defined as follows:

In general, the loss of the information is defined as follows: Then, the loss of information concerning event A by using or knowing event B for A is defined by

Then, the loss of information concerning event A by using or knowing event B for A is defined by