PYTORCH

BASICS

FOR ABSOLUTE BEGINNERS

BY

TAM SEL

PYTORCH INTRO

The PyTorch Tutorial is intended for both beginners and experts.

To execute the PyTorch project, you must have a clear understanding of Python.

This tutorial discusses the fundamentals of PyTorch, as well as how deep neural networks are implemented and used in deep learning projects.

Obstacles We guarantee that you will not encounter any difficulties when following this PyTorch tutorial.

PyTorch is a small portion of a computer software that uses the Torch library. It's a Facebook-developed Deep Learning platform. PyTorch is a Python Machine Learning Library for applications such as Natural Language Processing.

The following are the high-level features offered by PyTorch:

- It provides tensor computing with heavy acceleration thanks to the Graphics Processing Unit (GPU).

- It offers a Deep Neural Network based on a tape-based auto diff scheme.

PyTorch was created with high versatility and speed in mind when it came to implementing and creating Deep Learning Neural Networks. It is a machine learning library for the Python programming language, so it is very simple to install, run, and understand. Pytorch is totally pythonic (it uses commonly accepted python idioms instead of writing Java or C++ code) and can easily construct a Neural Network Model.

HISTORY

In 2016, PyTorch was released. Many researchers are increasingly able to use PyTorch. Facebook was in charge of the operation. Caffe2 is a Facebook-owned company (Convolutional Architecture for Fast Feature Embedding). It's difficult to convert a PyTorch-defined model to Caffe2. In September 2017, Facebook and Microsoft developed the Open Neural Network Exchange (ONNX) for this reason. Simply put, ONNX was created for the purpose of translating models between frameworks. Caffe2 was merged into PyTorch in March 2018.

Why PyTorch

What's the deal with PyTorch? What makes PyTorch special in terms of building deep learning models? PyTorch is a versatile library that can be used in a number of ways. A dynamic library is a versatile library that you can use according to your needs and changes. It is currently being used by finishers in the Kaggle competition.

There are numerous features that encourage deep learning scientists to use it in the development of deep learning models.

PyTorch Basics

Understanding all of the fundamental principles needed to work with PyTorch is critical. Tensors are the foundation of PyTorch. Tensor has several tasks to complete. Aside from these, there are a slew of other principles that must be grasped in order to complete the mission.

Matrices or Tensors

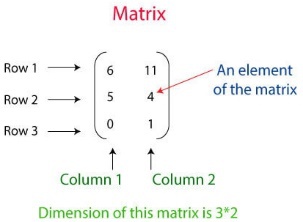

Pytorch's main components are tensors. PyTorch can be said to be entirely based on Tensors. A metrics is a rectangular sequence of numbers in mathematical terms. These metrics are known as ndaaray in the Numpy library. Tensor is the name given to it in PyTorch. An n-dimensional data container is known as a Tensor. For example, in PyTorch, a vector is 1d-Tensor, a metrics is 2d-Tensor, a cube is 3d-Tensor, and a cube vector is 4d-Tensor.

The matrices above describe a two-dimensional tensor with three rows and two columns.

Tensor can be generated in three different ways. Tensor is created in a different way by each of them. Tensors are made up of the following elements:

- Create a PyTorch Tensor array.

- Make a Tensor with just one digit and a random number.

- From a numpy array, build a tensor.

Let's take a look at how Tensors are made.

Create a PyTorch Tensor

You must first identify the array, and then move that array as an argument to the torch's Tensor process.

FOR EXAMPLE

import torch

arr = [[ , ], [ , ]]

pyTensor = torch.Tensor(arr)

print(pyTensor)

OUTPUT

tensor ([[3., 4.],[8., 5.]])

Create a Tensor

with the random number and all one

You must use the rand() method to construct a random number Tensor, and the ones() method of the torch to create a Tensor of all ones. Another torch tool, manual seed with 0 parameters, will be used with the rand to generate random numbers.

EXAMPLE

import torch

ones_t = torch.ones(( , ))

torch.manual_seed( ) //to have same values for random generation

rand_t = torch.rand(( , ))

print(ones_t)

print(rand_t)

OUTPUT

Tensor ([[1., 1.],[1., 1.]])

tensor ([[0.4963, 0.7682],[0.0885, 0.1320]])

Create a Tensor

from numpy array

We must first construct a numpy array before we can create a Tensor from it. After you've built your numpy array, you'll need to pass it as an argument to from numpy(). The numpy array is converted to a Tensor using this form.

EXAMPLE

import torch

import numpy as np1

numpy_arr = np1.ones(( , ))

pyTensor = torch.from_numpy(numpy_arr)

np1_arr_from_Tensor = pyTensor.numpy()

print(np1_arr_from_Tensor)

OUTPUT

[[1. 1.] [1. 1.]]

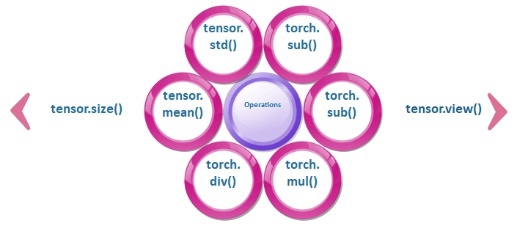

Tensors Operations

Since tensors are identical to arrays, any operation that can be performed on an array can also be performed on a tensor.

Resizing a Tensor

The size property of Tensor can be used to resize the Tensor. Tensor.view() is used to resize a Tensor. Resizing a Tensor entails converting a 2*2 dimensional Tensor to a 4*1 dimensional Tensor, or a 4*4 dimensional Tensor to a 16*1 dimensional Tensor, and so on. The Tensor.size() method is used to print the Tensor size.

Let's take a look at how to resize a Tensor.

import torch

pyt_Tensor = torch.ones(( , ))

print(pyt_Tensor.size()) # shows the size of this Tensor

pyt_Tensor = pyt_Tensor.view( ) # resizing 2x2 Tensor to 4x1

print(pyt_Tensor)

OUTPUT

torch.Size ([2, 2])

tensor ([1., 1., 1., 1.])

Mathematical Operations

Tensor can perform all mathematical operations such as addition, subtraction, division, and multiplication. The mathematical procedure can be done by the torch. Tensor operations are performed using the functions torch.add(), torch.sub(), torch.mul(), and torch.div().

Consider the following example of mathematical operations:

import numpy as np

import torch

Tensor_a = torch.ones(( , ))

Tensor_b = torch.ones(( , ))

result=Tensor_a+Tensor_b

result1 = torch.add(Tensor_a, Tensor_b) //another way of addidtion

Tensor_a.add_(Tensor_b) // In-place addition

print(result)

print(result1)

print(Tensor_a)

OUTPUT

tensor ([[2., 2.], [2., 2.]])

tensor ([[2., 2.], [2., 2.]])

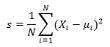

Mean and Standard-deviation

Tensor's standard deviation can be calculated in two ways: one-dimensional and multi-dimensional. We must first calculate the mean in our mathematical equation, and then apply the following formula to the given data with mean.

However, we can find the variance and mean of a Tensor using Tensor.mean() and Tensor.std() in Tensor.